If you have already configured Linux Local User Accounts Password Security policies Hardening – Set Password expiry, password quality, limit repatead access attempts, add directionary check, increase logged history command size and you want your configured local user accounts on a Linux / UNIX / BSD system to not expire before the user is reminded that it will be of his benefit to change his password on time, not to completely loose account to his account, then you might use a small script that is just checking the upcoming expiry for a predefined users and emails in an array with lslogins command like you will learn in this article.

The script below is written by a colleague Lachezar Pramatarov (Credit for the script goes to him) in order to solve this annoying expire problem, that we had all the time as me and colleagues often ended up with expired accounts and had to bother to ask for the password reset and even sometimes clearance of account locks. Hopefully this little script will help some other unix legacy admin systems to get rid of the account expire problem.

For the script to work you will need to have a properly configured SMTP (Mail server) with or without a relay to be able to send to the script predefined email addresses that will get notified.

Here is example of a user whose account is about to expire in a couple of days and who will benefit of getting the Alert that he should hurry up to change his password until it is too late 🙂

[root@linux ~]# date

Thu Aug 24 17:28:18 CEST 2023

[root@server~]# chage -l lachezar

Last password change : May 30, 2023

Password expires : Aug 28, 2023

Password inactive : never

Account expires : never

Minimum number of days between password change : 0

Maximum number of days between password change : 90

Number of days of warning before password expires : 14

Here is the user_passwd_expire.sh that will report the user

# vim /usr/local/bin/user_passwd_expire.sh

#!/bin/bash

# This script will send warning emails for password expiration

# on the participants in the following list:

# 20, 15, 10 and 0-7 days before expiration

# ! Script sends expiry Alert only if day is Wednesday – if (( $(date +%u)==3 )); !# email to send if expiring

alert_email='alerts@pc-freak.net';

# the users that are admins added to belong to this group

admin_group="admins";

notify_email_header_customer_name='Customer Name';declare -A mails=(

# list below accounts which will receive account expiry emails# syntax to define uid / email

# [“account_name_from_etc_passwd”]="real_email_addr@fqdn";# [“abc”]="abc@fqdn.com"

# [“cba”]="bca@fqdn.com"

[“lachezar”]="lachezar.user@gmail.com"

[“georgi”]="georgi@fqdn-mail.com"

[“acct3”]="acct3@fqdn-mail.com"

[“acct4”]="acct4@fqdn-mail.com"

[“acct5”]="acct5@fqdn-mail.com"

[“acct6”]="acct6@fqdn-mail.com"

# [“acct7”]="acct7@fqdn-mail.com"

# [“acct8”]="acct8@fqdn-mail.com"

# [“acct9”]="acct9@fqdn-mail.com"

)declare -A days

while IFS="=" read -r person day ; do

days[“$person”]="$day"

done < <(lslogins –noheadings -o USER,GROUP,PWD-CHANGE,PWD-WARN,PWD-MIN,PWD-MAX,PWD-EXPIR,LAST-LOGIN,FAILED-LOGIN –time-format=iso | awk '{print "echo "$1" "$2" "$3" $(((($(date +%s -d \""$3"+90 days\")-$(date +%s)))/86400)) "$5}' | /bin/bash | grep -E " $admin_group " | awk '{print $1 "=" $4}')#echo ${days[laprext]}

for person in "${!mails[@]}"; do

echo "$person ${days[$person]}";

tmp=${days[$person]}# echo $tmp

# each person will receive mails only if 20th days / 15th days / 10th days remaining till expiry or if less than 7 days receive alert mail every dayif (( (${tmp}==20) || (${tmp}==15) || (${tmp}==10) || ((${tmp}>=0) && (${tmp}<=7)) ));

then

echo "Hello, your password for $(hostname -s) will expire after ${days[$person]} days.” | mail -s “$notify_email_header_customer_name $(hostname -s) server password expiration” -r passwd_expire ${mails[$person]};

elif ((${tmp}<0));

then

# echo "The password for $person on $(hostname -s) has EXPIRED before{days[$person]} days. Please take an action ASAP.” | mail -s “EXPIRED password of $person on $(hostname -s)” -r EXPIRED ${mails[$person]};# ==3 meaning day is Wednesday the day on which OnCall Person changes

if (( $(date +%u)==3 ));

then

echo "The password for $person on $(hostname -s) has EXPIRED. Please take an action." | mail -s "EXPIRED password of $person on $(hostname -s)" -r EXPIRED $alert_email;

fi

fi

done

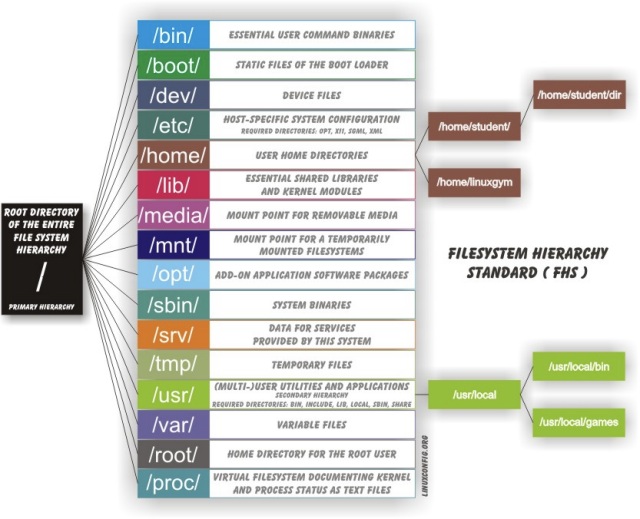

To make the script notify about expiring user accounts, place the script under some directory lets say /usr/local/bin/user_passwd_expire.sh and make it executable and configure a cron job that will schedule it to run every now and then.

# cat /etc/cron.d/passwd_expire_cron

# /etc/cron.d/pwd_expire

#

# Check password expiration for users

#

# 2023-01-16 LPR

#

02 06 * * * root /usr/local/bin/user_passwd_expire.sh >/dev/null

Script will execute every day morning 06:02 by the cron job and if the day is wednesday (3rd day of week) it will send warning emails for password expiration if 20, 15, 10 days are left before account expires if only 7 days are left until the password of user acct expires, the script will start sending the Alarm every single day for 7th, 6th … 0 day until pwd expires.

If you don't have an expiring accounts and you want to force a specific account to have a expire date you can do it with:

# chage -E 2023-08-30 someuser

Or set it for new created system users with:

# useradd -e 2023-08-30 username

That's it the script will notify you on User PWD expiry.

If you need to for example set a single account to expire 90 days from now (3 months) that is a kind of standard password expiry policy admins use, do it with:

# date -d "90 days" +"%Y-%m-%d"

2023-11-22

Ideas for user_passwd_expire.sh script improvement

The downside of the script if you have too many local user accounts is you have to hardcode into it the username and user email_address attached to and that would be tedios task if you have 100+ accounts.

However it is pretty easy if you already have a multitude of accounts in /etc/passwd that are from UID range to loop over them in a small shell loop and build new array from it. Of course for a solution like this to work you will have to have defined as user data as GECOS with command like chfn.

[georgi@server ~]$ chfn

Changing finger information for test.

Name [test]:

Office []: georgi@fqdn-mail.com

Office Phone []:

Home Phone []:Password:

[root@server test]# finger georgi

Login: georgi Name: georgi

Directory: /home/georgi Shell: /bin/bash

Office: georgi@fqdn-mail.com

On since чт авг 24 17:41 (EEST) on :0 from :0 (messages off)

On since чт авг 24 17:43 (EEST) on pts/0 from :0

2 seconds idle

On since чт авг 24 17:44 (EEST) on pts/1 from :0

49 minutes 30 seconds idle

On since чт авг 24 18:04 (EEST) on pts/2 from :0

32 minutes 42 seconds idle

New mail received пт окт 30 17:24 2020 (EET)

Unread since пт окт 30 17:13 2020 (EET)

No Plan.

Then it should be relatively easy to add the GECOS for multilpe accounts if you have them predefined in a text file for each existing local user account.

Hope this script will help some sysadmin out there, many thanks to Lachezar for allowing me to share the script here.

Enjoy ! 🙂