I was looking for English Orthodox Bible translation of the Old Testament (Septuagint Version) and found such divided in many pdf files. I wanted to create a common (single) PDF from all the separate Old Testamental Book files in order to put it online as it might be convenient for English native speakers to download and later read offline on their computers the Old Testament Orthodox version Holy Bible.

Before I explain how I did it I will make a short turn to explain few things about Septuagint, as this is probably interesting stuff, you might not know.

Septuagint (also referred as LXX or the Alexandrian Canon) – Is Translation of the Hebrew Bible and some related text in Koine Greek) by legendary 70 Jewish scholars as early as the 2nd century BC. Just for those interested in Christianity it is curious fact that the number of Old Testament books are different among Protestant, Roman Catholic and Orthodox Christians, whether the number of New Testament books are the same in Catholics, Protestant and Orthodox.

So How Many books are in Roman Catholic, Protestant and Orthodox Old Testament Holy Bible?

The Old Testament in Orthodox Holy Bible version has 50 (where Slavonic versions of the bible include also +2 More which are the Edras books), whether protestant Holy Bible includes only 39 books in old testament and Roman Catholics has 46 old testamental books in there bibles. The reason why Protestants choose to have less books (only 39) is some of the books in the Roman Catholic and Orthodox Church are Apocryphal are referred to as the Apocryphal, or Deuterocanonical books this doesn't mean that the extra 8 Books in Orthodox Bibles are not God Inspired, this means, they don't have the historic authenticity as the early Church accepted canonicals.

The Orthodox Church accepted the Septuagint LXX as divinely inspired to be used in Church.

Now back to how I managed to merge (convert) multiple PDF files into single PDF on my Debian Linux home router.

My first attempt was with ImageMagick's convert (in the same manner as I used to generate PDF files from pictures earlier), e.g.:

convert intro.pdf genesis.pdf exodus.pdf leviticus.pdf numbers.pdf deuteronomy.pdf … SINGLE-FILE.PDF

I waited for convertion to complete quite long but it seemed looping so finally after 7 minutes I stopped it and decided to try with something else and, after quick search I found pdftk.

pdftk has plenty of functions and is great for anyone who needs to do Merge / Split Update / Encrypt / Repair corrupted PDFs on Linux:

apt-cache show pdftk |grep -i desc -A 17

Description: tool for manipulating PDF documents

If PDF is electronic paper, then pdftk is an electronic stapler-remover,

hole-punch, binder, secret-decoder-ring, and X-Ray-glasses. Pdftk is a

simple tool for doing everyday things with PDF documents. Keep one in the

top drawer of your desktop and use it to:

– Merge PDF documents

– Split PDF pages into a new document

– Decrypt input as necessary (password required)

– Encrypt output as desired

– Fill PDF Forms with FDF Data and/or Flatten Forms

– Apply a Background Watermark

– Report PDF on metrics, including metadata and bookmarks

– Update PDF Metadata

– Attach Files to PDF Pages or the PDF Document

– Unpack PDF Attachments

– Burst a PDF document into single pages

– Uncompress and re-compress page streams

– Repair corrupted PDF (where possible)

To install pdftk on Debian Linux Lenny / Wheezy:

apt-get install –yes pdftk

…

After installed to convert a number of separate PDF files into single (merged) PDF file:

pdftk file1.pdf file2.pdf file3.pdf cat output single-merged-pdf-file.pdf

pdftk intro.pdf genesis.pdf exodus.pdf leviticus.pdf numbers.pdf deuteronomy.pdf joshua.pdf judges.pdf ruth.pdf kingdoms_1.pdf kingdoms_2.pdf kingdoms_3.pdf kingdoms_4.pdf paraleipomenon_1.pdf paraleipomenon_2.pdf esdras_1.pdf esdras_2.pdf nehemiah.pdf tobit.pdf judith.pdf esther.pdf maccabees_1.pdf maccabees_2.pdf maccabees_3.pdf psalms.pdf job.pdf proverbs_of_solomon.pdf ecclesiastes.pdf song_of_songs.pdf wisdom_of_solomon.pdf wisdom_of_sirach.pdf hosea.pdf amos.pdf micah.pdf joel.pdf obadiah.pdf jonah.pdf nahum.pdf habbakuk.pdf zephaniah.pdf malachi.pdf isaiah.pdf jeremiah.pdf baruch.pdf lamentations_of_jeremiah.pdf an_epistle_of_jeremiah.pdf ezekiel.pdf daniel.pdf maccabees_4.pdf slavonic_appendix.pdf cat output Orthodox-English-translation-of-Old-Testament-Septuagint.pdf

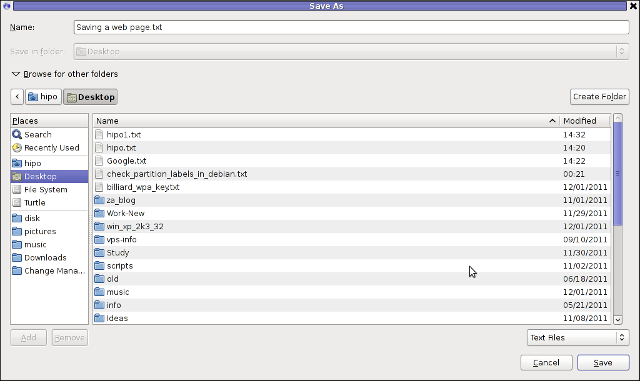

And Hooray! It worked The resulting share Old Testament (Orthodox) English translation from Septuagint PDF is here

pdftk is also ported for Fedora / CentOS / RHEL etc. (RPM distros), so you to install it there:

yum -y install pdftk

Or if missing in repositories grab the respective pdf and

rpm -ivh pdftk-*yourarch.pdf

PDFtk has also Windows and Mac OS version just in case if you need to script Merging of multiple PDFs to single ones for more check out PDftk Server page homepage here