If you are sys admin of Apache Webserver running on Debian Linux relying on logrorate to rorate logs, you might want to change the default way logroration is done.

Little changes in the way Apache log files are served on busy servers can have positive outcomes on the overall way the server CPU units burden. A good logrotation strategy can also prevent your server from occasional extra overheads or downtimes.

The way Debian GNU / Linux process logs is well planned for small servers, however the default logroration Apache routine doesn't fit well for servers which process millions of client requests each day.

I happen to administrate, few servers which are constantly under a heavy load and have occasionally overload troubles because of Debian's logrorate default mechanism.

To cope with the situation I have made few modifications to /etc/logrorate.d/apache2 and decided to share it here hoping, this might help you too.

1. Rotate Apache acccess.log log file daily instead of weekly

On Debian Apache's logrorate script is in /etc/logrotate.d/apache2

The default file content will be like so like so:

debian:~# cat /etc/logrotate.d/apache2

/var/log/apache2/*.log {

weekly

missingok

rotate 52

size 1G

compress

delaycompress

notifempty

create 640 root adm

sharedscripts

postrotate

if [ -f "`. /etc/apache2/envvars ; echo ${APACHE_PID_FILE:-/var/run/apache2.pid}`" ]; then

/etc/init.d/apache2 reload > /dev/null

fi

endscript

}

To change the rotation from weekly to daily change:

weekly

to

#weekly

2. Disable access.log log file gzip compression

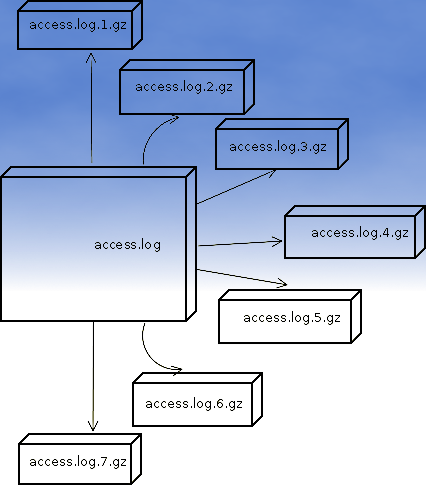

By default apache2 logrotate script is tuned ot make compression of rotated file (exmpl: copy access.log to access.log.1 and gzip it, copy access.log to access.log.2 and gzip it etc.). On servers where logs are many gigabytes, once logrotate initiates its scheduled work it will have to compress an enormous log record of apache requests. On very busy Apache servers from my experience, just for a day the log could grow up to approximately 8 / 10 Gigabytes.

I'm sure there are more busy servers out there, which log files are growing to over 100GB for just a single day.

Gzipping a 100GB file piece takes an enormous load on the CPU, as well as often takes long time. When this logrotation gzipping occurs at a moment where the servers CPU cores are already heavy loaded from Apache serving HTTP requests, Apache server becomes inaccessible to most of the clients.

Then for end clients various oddities are experienced, for example Apache dropped connection errors, webserver returning empty pages, or simply inability to respond to the client browser.

Sometimes as a result of the overload, even secure shell connection to SSHD to the server is impossible …

To prevent your server from this roration overloads remove logrorate's default access.log gzipping by commenting:

compress

to

#comment

3. Change maximum log roration by logrorate to be up to 30

By default logrorate is configured to create and keep up to 52 rotated and gzipped access.log files, changing this to a lower number is a good practice (in my view), in cases where log files grow daily to 10 or more GBs. Doing so will save a lot of disk space and reduce the chance the hard disk gets filled in because of the multiple rorated ungzipped enormous access.log files.

To tune the default keep max rorated logs to 30, change:

rotate 52

to

rotate 30

The way logrorate's apache log processing on RHEL / CentOS Linux is working better on high load servers, by default on CentOS logrorate is not configured to do log gzipping at all.

Here is the default /etc/logrorate.d/httpd script for

CentOS release 5.6 (Final)

[hipo@centos httpd]$ cat /etc/logrotate.d/httpd /var/log/httpd/*log {

missingok

notifempty

sharedscripts

postrotate

/bin/kill -HUP `cat /var/run/httpd.pid 2>/dev/null` 2> /dev/null || true

endscript

}