Sometimes if you work in a company that is following PCI standards with very tight security you might need to use a custom company prepared RPM repositories that are accessible only via a specific custom maintained repositories or alternatively you might need the proxy node to access an external internet repository from the DMZ-ed firewalled zone where the servers lays .

Hence to still be able to maintain the RPM based servers up2date to the latest security patches and install software with yumone very useful feature of yum package manager is to use a proxy host through which you will reach your Redhat Package Manager files files.

1. The http_proxy and https_proxy shell variables

To set a proxy host you need to define there the IP / Hostname or the Fully Qualified Domain Name (FQDN).

By default "http_proxy and https_proxy are empty. As you can guess https_proxy is used if you have a Secure Socket Layer (SSL) certificate for encrypting the communication channel (e.g. you have https:// URL).

[root@rhel: ~]# echo $http_proxy

[root@rhel: ~]#

2. Setting passwordless or password protected proxy host via http_proxy, https_proxy variables

There is a one time very straight forward to configure proxying of traffic via a specific remote configured server with server bourne again shell (BASH)'s understood variables:

a.) Set password free open proxy to shell environment.

[root@centos: ~]# export https_proxy="https://remote-proxy-server:8080"

Now use yum as usual to update the available installabe package list or simply upgrade to the latest packages with lets say:

[root@rhel: ~]# yum check-update && yum update

b.) Configuring password protected proxy for yum

If your proxy is password protected for even tigher security you can provide the password on the command line as well.

[root@centos: ~]# export http_proxy="http://username:pAssW0rd@server:port/"

Note that if you have some special characters you will have to pass the string inside single quotes or escape them to make sure the password will properly handled to server, before trying out the proxy with yum, echo the variable.

[root@centos: ~]# export http_proxy='http://username:p@s#w:E@192.168.0.1:3128/'

[root@centos: ~]# echo $http_proxy

http://username:p@s#w:E@server:port/

Then do whatever with yum:

[root@centos: ~]# yum check-update && yum search sharutils

If something is wrong and proxy is not properly connected try to reach for the repository manually with curl or wget

[root@centos: ~]# curl -ilk http://download.fedoraproject.org/pub/epel/7/SRPMS/ /epel/7/SRPMS/

HTTP/1.1 302 Found

Date: Tue, 07 Sep 2021 16:49:59 GMT

Server: Apache

X-Frame-Options: SAMEORIGIN

X-Xss-Protection: 1; mode=block

X-Content-Type-Options: nosniff

Referrer-Policy: same-origin

Location: http://mirror.telepoint.bg/epel/7/SRPMS/

Content-Type: text/plain

Content-Length: 0

AppTime: D=2264

X-Fedora-ProxyServer: proxy01.iad2.fedoraproject.org

X-Fedora-RequestID: YTeYOE3mQPHH_rxD0sdlGAAAA80

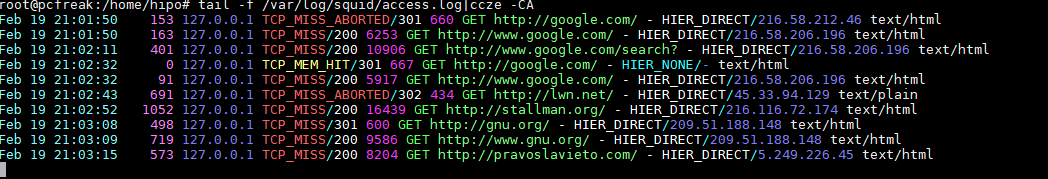

X-Cache: MISS from pcfreak

X-Cache-Lookup: MISS from pcfreak:3128

Via: 1.1 pcfreak (squid/4.6)

Connection: keep-alive

Or if you need, you can test the user, password protected proxy with wget as so:

[root@centos: ~]# wget –proxy-user=USERNAME –proxy-password=PASSWORD http://your-proxy-domain.com/optional-rpms/

If you have lynx installed on the machine you can do the remote proxy successful authentication check with it with less typing:

[root@centos: ~]# lynx -pauth=USER:PASSWORD http://proxy-domain.com/optional-rpm/

3. Making yum proxy connection permanent via /etc/yum.conf

Perhaps the easiest and quickest way to add the http_proxy / https_proxy configured is to store it to automatically load on each server ssh login in your admin user (root) in /root/.bashrc or /root/.bash_profile or in the global /etc/profile or /etc/profile.d/custom.sh etc.

However if you don't want to have hacks and have more cleanness on the systems, the recommended "Redhat way" so to say is to store the configuration inside /etc/yum.conf

To do it via /etc/yum.conf you have to have some records there like:

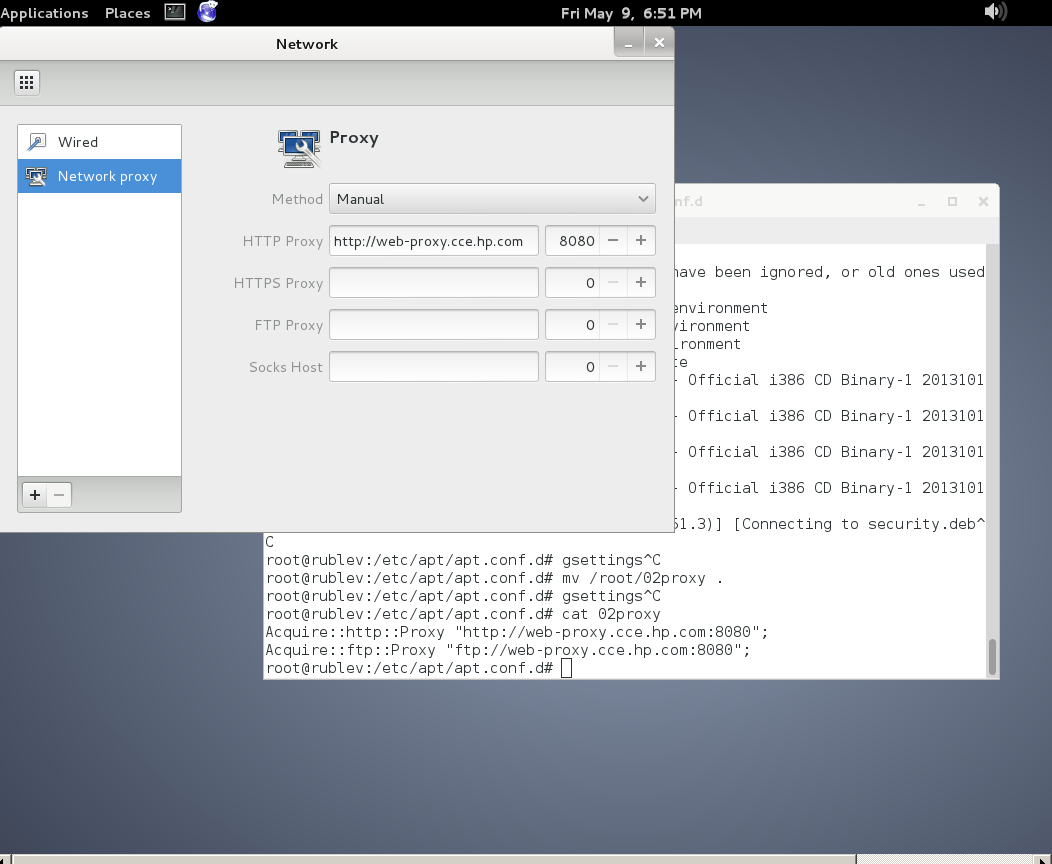

# The proxy server – proxy server:port number

proxy=http://mycache.mydomain.com:3128

# The account details for yum connections

proxy_username=yum-user

proxy_password=qwerty-secret-pass

4. Listing RPM repositories and their state

As I had to install sharutils RPM package to the server which contains the file /bin/uuencode (that is provided on CentOS 7.9 Linux from Repo: base/7/x86_64 I had to check whether the repository was installed on the server.

To get a list of all yum repositories avaiable

[root@centos:/etc/yum.repos.d]# yum repolist all

Loaded plugins: fastestmirror

Loading mirror speeds from cached hostfile

* base: centos.telecoms.bg

* epel: mirrors.netix.net

* extras: centos.telecoms.bg

* remi: mirrors.netix.net

* remi-php74: mirrors.netix.net

* remi-safe: mirrors.netix.net

* updates: centos.telecoms.bg

repo id repo name status

base/7/x86_64 CentOS-7 – Base enabled: 10,072

base-debuginfo/x86_64 CentOS-7 – Debuginfo disabled

base-source/7 CentOS-7 – Base Sources disabled

c7-media CentOS-7 – Media disabled

centos-kernel/7/x86_64 CentOS LTS Kernels for x86_64 disabled

centos-kernel-experimental/7/x86_64 CentOS Experimental Kernels for x86_64 disabled

centosplus/7/x86_64 CentOS-7 – Plus disabled

centosplus-source/7 CentOS-7 – Plus Sources disabled

cr/7/x86_64 CentOS-7 – cr disabled

epel/x86_64 Extra Packages for Enterprise Linux 7 – x86_64 enabled: 13,667

epel-debuginfo/x86_64 Extra Packages for Enterprise Linux 7 – x86_64 – Debug disabled

epel-source/x86_64 Extra Packages for Enterprise Linux 7 – x86_64 – Source disabled

epel-testing/x86_64 Extra Packages for Enterprise Linux 7 – Testing – x86_64 disabled

epel-testing-debuginfo/x86_64 Extra Packages for Enterprise Linux 7 – Testing – x86_64 – Debug disabled

epel-testing-source/x86_64 Extra Packages for Enterprise Linux 7 – Testing – x86_64 – Source disabled

extras/7/x86_64 CentOS-7 – Extras enabled: 500

extras-source/7 CentOS-7 – Extras Sources disabled

fasttrack/7/x86_64 CentOS-7 – fasttrack disabled

remi Remi's RPM repository for Enterprise Linux 7 – x86_64 enabled: 7,229

remi-debuginfo/x86_64 Remi's RPM repository for Enterprise Linux 7 – x86_64 – debuginfo disabled

remi-glpi91 Remi's GLPI 9.1 RPM repository for Enterprise Linux 7 – x86_64 disabled

remi-glpi92 Remi's GLPI 9.2 RPM repository for Enterprise Linux 7 – x86_64 disabled

remi-glpi93 Remi's GLPI 9.3 RPM repository for Enterprise Linux 7 – x86_64 disabled

remi-glpi94 Remi's GLPI 9.4 RPM repository for Enterprise Linux 7 – x86_64 disabled

remi-modular Remi's Modular repository for Enterprise Linux 7 – x86_64 disabled

remi-modular-test Remi's Modular testing repository for Enterprise Linux 7 – x86_64 disabled

remi-php54 Remi's PHP 5.4 RPM repository for Enterprise Linux 7 – x86_64 disabled

remi-php55 Remi's PHP 5.5 RPM repository for Enterprise Linux 7 – x86_64 disabled

remi-php55-debuginfo/x86_64 Remi's PHP 5.5 RPM repository for Enterprise Linux 7 – x86_64 – debuginfo disabled

!remi-php56 Remi's PHP 5.6 RPM repository for Enterprise Linux 7 – x86_64 disabled

remi-php56-debuginfo/x86_64 Remi's PHP 5.6 RPM repository for Enterprise Linux 7 – x86_64 – debuginfo disabled

remi-php70 Remi's PHP 7.0 RPM repository for Enterprise Linux 7 – x86_64 disabled

remi-php70-debuginfo/x86_64 Remi's PHP 7.0 RPM repository for Enterprise Linux 7 – x86_64 – debuginfo disabled

remi-php70-test Remi's PHP 7.0 test RPM repository for Enterprise Linux 7 – x86_64 disabled

remi-php70-test-debuginfo/x86_64 Remi's PHP 7.0 test RPM repository for Enterprise Linux 7 – x86_64 – debuginfo disabled

remi-php71 Remi's PHP 7.1 RPM repository for Enterprise Linux 7 – x86_64 disabled

remi-php71-debuginfo/x86_64 Remi's PHP 7.1 RPM repository for Enterprise Linux 7 – x86_64 – debuginfo disabled

remi-php71-test Remi's PHP 7.1 test RPM repository for Enterprise Linux 7 – x86_64 disabled

remi-php71-test-debuginfo/x86_64 Remi's PHP 7.1 test RPM repository for Enterprise Linux 7 – x86_64 – debuginfo disabled

!remi-php72 Remi's PHP 7.2 RPM repository for Enterprise Linux 7 – x86_64 disabled

remi-php72-debuginfo/x86_64 Remi's PHP 7.2 RPM repository for Enterprise Linux 7 – x86_64 – debuginfo disabled

remi-php72-test Remi's PHP 7.2 test RPM repository for Enterprise Linux 7 – x86_64 disabled

remi-php72-test-debuginfo/x86_64 Remi's PHP 7.2 test RPM repository for Enterprise Linux 7 – x86_64 – debuginfo disabled

remi-php73 Remi's PHP 7.3 RPM repository for Enterprise Linux 7 – x86_64 disabled

remi-php73-debuginfo/x86_64 Remi's PHP 7.3 RPM repository for Enterprise Linux 7 – x86_64 – debuginfo disabled

remi-php73-test Remi's PHP 7.3 test RPM repository for Enterprise Linux 7 – x86_64 disabled

remi-php73-test-debuginfo/x86_64 Remi's PHP 7.3 test RPM repository for Enterprise Linux 7 – x86_64 – debuginfo disabled

remi-php74 Remi's PHP 7.4 RPM repository for Enterprise Linux 7 – x86_64 enabled: 423

remi-php74-debuginfo/x86_64 Remi's PHP 7.4 RPM repository for Enterprise Linux 7 – x86_64 – debuginfo disabled

remi-php74-test Remi's PHP 7.4 test RPM repository for Enterprise Linux 7 – x86_64 disabled

remi-php74-test-debuginfo/x86_64 Remi's PHP 7.4 test RPM repository for Enterprise Linux 7 – x86_64 – debuginfo disabled

remi-php80 Remi's PHP 8.0 RPM repository for Enterprise Linux 7 – x86_64 disabled

remi-php80-debuginfo/x86_64 Remi's PHP 8.0 RPM repository for Enterprise Linux 7 – x86_64 – debuginfo disabled

remi-php80-test Remi's PHP 8.0 test RPM repository for Enterprise Linux 7 – x86_64 disabled

remi-php80-test-debuginfo/x86_64 Remi's PHP 8.0 test RPM repository for Enterprise Linux 7 – x86_64 – debuginfo disabled

remi-safe Safe Remi's RPM repository for Enterprise Linux 7 – x86_64 enabled: 4,549

remi-safe-debuginfo/x86_64 Remi's RPM repository for Enterprise Linux 7 – x86_64 – debuginfo disabled

remi-test Remi's test RPM repository for Enterprise Linux 7 – x86_64 disabled

remi-test-debuginfo/x86_64 Remi's test RPM repository for Enterprise Linux 7 – x86_64 – debuginfo disabled

updates/7/x86_64 CentOS-7 – Updates enabled: 2,741

updates-source/7 CentOS-7 – Updates Sources disabled

zabbix/x86_64 Zabbix Official Repository – x86_64 enabled: 178

zabbix-debuginfo/x86_64 Zabbix Official Repository debuginfo – x86_64 disabled

zabbix-frontend/x86_64 Zabbix Official Repository frontend – x86_64 disabled

zabbix-non-supported/x86_64 Zabbix Official Repository non-supported – x86_64 enabled: 5

repolist: 39,364

[root@centos:/etc/yum.repos.d]# yum repolist all|grep -i 'base/7/x86_64'

base/7/x86_64 CentOS-7 – Base enabled: 10,072

As you can see in CentOS 7 sharutils is enabled from default repositories, however this is not the case on Redhat 7.9, hence to install sharutils there you can one time enable RPM repository to install sharutils

[root@centos:/etc/yum.repos.d]# yum –enablerepo=rhel-7-server-optional-rpms install sharutils

To install zabbix-agent on the same Redhat server, without caring that I need precisely know the RPM repository that is providing zabbix agent that in that was (Repo: 3party/7Server/x86_64) I had to:

[root@centos:/etc/yum.repos.d]# yum –enablerepo \* install zabbix-agent zabbix-sender

Permanently enabling repositories of course is possible via editting or creating fresh new file configuration manually on CentOS / Fedora under directory /etc/yum.repos.d/.

On Redhat Enterprise Linux servers it is easier to use the subscription-manager command instead, like this:

[root@rhel:/root]# subscription-manager repos –disable=epel/7Server/x86_64

[root@rhel:/root]# subscription-manager repos –enable=rhel-6-server-optional-rpms