Logging into file in GNU / Linux and FreeBSD is as simple as simply redirecting the output, e.g.:

echo "$(date) Whatever" >> /home/hipo/log/output_file_log.txt

or with pyping to tee command

echo "$(date) Service has Crashed" | tee -a /home/hipo/log/output_file_log.txt

But what if you need to create a full featured logging bash robust shell script function that will run as a daemon continusly as a background process and will output

all content from itself to an external log file?

In below article, I've given example logging script in bash, as well as small example on how a specially crafted Named Pipe buffer can be used that will later store to a file of choice.

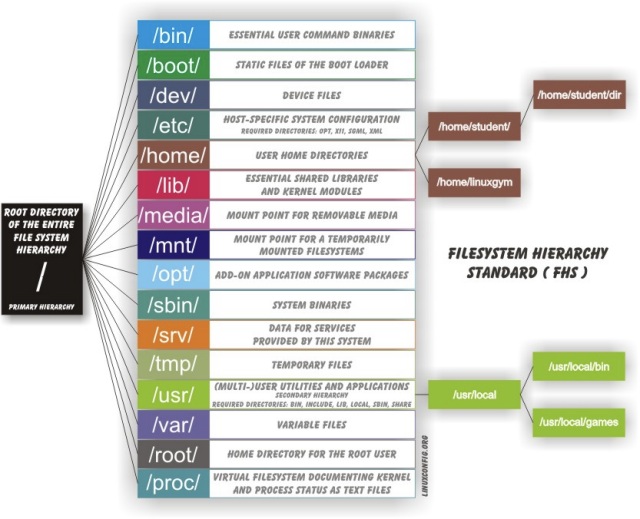

Finally I found it interesting to mention few words about logger command which can be used to log anything to many of the common / general Linux log files stored under /var/log/ – i.e. /var/log/syslog /var/log/user /var/log/daemon /var/log/mail etc.

1. Bash script function for logging write_log();

Perhaps the simplest method is just to use a small function routine in your shell script like this:

write_log()

LOG_FILE='/root/log.txt';

{

while read text

do

LOGTIME=`date "+%Y-%m-%d %H:%M:%S"`

# If log file is not defined, just echo the output

if [ “$LOG_FILE” == “” ]; then

echo $LOGTIME": $text";

else

LOG=$LOG_FILE.`date +%Y%m%d`

touch $LOG

if [ ! -f $LOG ]; then echo "ERROR!! Cannot create log file $LOG. Exiting."; exit 1; fi

echo $LOGTIME": $text" | tee -a $LOG;

fi

done

}

- Using the script from within itself or from external to write out to defined log file

echo "Skipping to next copy" | write_log

2. Use Unix named pipes to pass data – Small intro on what is Unix Named Pipe.

Named Pipe – a named pipe (also known as a FIFO (First In First Out) for its behavior) is an extension to the traditional pipe concept on Unix and Unix-like systems, and is one of the methods of inter-process communication (IPC). The concept is also found in OS/2 and Microsoft Windows, although the semantics differ substantially. A traditional pipe is "unnamed" and lasts only as long as the process. A named pipe, however, can last as long as the system is up, beyond the life of the process. It can be deleted if no longer used.

Usually a named pipe appears as a file, and generally processes attach to it for IPC.

Once named pipes were shortly explained for those who hear it for a first time, its time to say named pipe in unix / linux is created with mkfifo command, syntax is straight foward:

mkfifo /tmp/name-of-named-pipe

Some older Linux-es with older bash and older bash shell scripts were using mknod.

So idea behind logging script is to use a simple named pipe read input and use date command to log the exact time the command was executed, here is the script.

#!/bin/bash

named_pipe='/tmp/output-named-pipe';

output_named_log='/tmp/output-named-log.txt ';if [ -p $named_pipe ]; then

rm -f $named_pipe

fi

mkfifo $named_pipewhile true; do

read LINE <$named_pipe

echo $(date): "$LINE" >>/tmp/output-named-log.txt

done

To write out any other script output and get logged now, any of your output with a nice current date command generated output write out any output content to the loggin buffer like so:

echo 'Using Named pipes is so cool' > /tmp/output-named-pipe

echo 'Disk is full on a trigger' > /tmp/output-named-pipe

- Getting the output with the date timestamp

# cat /tmp/output-named-log.txt

Mon Aug 26 15:21:29 EEST 2019: Using Named pipes is so cool

Mon Aug 26 15:21:54 EEST 2019: Disk is full on a trigger

If you wonder why it is better to use Named pipes for logging, they perform better (are generally quicker) than Unix sockets.

3. Logging files to system log files with logger

If you need to do a one time quick way to log any message of your choice with a standard Logging timestamp, take a look at logger (a part of bsdutils Linux package), and is a command which is used to enter messages into the system log, to use it simply invoke it with a message and it will log your specified output by default to /var/log/syslog common logfile

root@linux:/root# logger 'Here we go, logging'

root@linux:/root # tail -n 3 /var/log/syslog

Aug 26 15:41:01 localhost CRON[24490]: (root) CMD (chown qscand:qscand -R /var/run/clamav/ 2>&1 >/dev/null)

Aug 26 15:42:01 localhost CRON[24547]: (root) CMD (chown qscand:qscand -R /var/run/clamav/ 2>&1 >/dev/null)

Aug 26 15:42:20 localhost hipo: Here we go, logging

If you have took some time to read any of the init.d scripts on Debian / Fedora / RHEL / CentOS Linux etc. you will notice the logger logging facility is heavily used.

With logger you can print out message with different priorities (e.g. if you want to write an error message to mail.* logs), you can do so with:

logger -i -p mail.err "Output of mail processing script"

To log a normal non-error (priority message) with logger to /var/log/mail.log system log.

logger -i -p mail.notice "Output of mail processing script"

A whole list of supported facility named priority valid levels by logger (as taken of its current Linux manual) are as so:

FACILITIES AND LEVELS

Valid facility names are:auth

authpriv for security information of a sensitive nature

cron

daemon

ftp

kern cannot be generated from userspace process, automatically converted to user

lpr

news

syslog

user

uucp

local0

to

local7

security deprecated synonym for authValid level names are:

emerg

alert

crit

err

warning

notice

info

debug

panic deprecated synonym for emerg

error deprecated synonym for err

warn deprecated synonym for warningFor the priority order and intended purposes of these facilities and levels, see syslog(3).

If you just want to log to Linux main log file (be it /var/log/syslog or /var/log/messages), depending on the Linux distribution, just type', even without any shell quoting:

logger 'The reason to reboot the server Currently was a System security Update

So what others is logger useful for?

In addition to being a good diagnostic tool, you can use logger to test if all basic system logs with its respective priorities work as expected, this is especially

useful as I've seen on a Cloud Holsted OpenXEN based servers as a SAP consultant, that sometimes logging to basic log files stops to log for months or even years due to

syslog and syslog-ng problems hungs by other thirt party scripts and programs.

To test test all basic logging and priority on system logs as expected use the following logger-test-all-basic-log-logging-facilities.sh shell script.

#!/bin/bash

for i in {auth,auth-priv,cron,daemon,kern, \

lpr,mail,mark,news,syslog,user,uucp,local0 \

,local1,local2,local3,local4,local5,local6,local7}

do

# (this is all one line!)

for k in {debug,info,notice,warning,err,crit,alert,emerg}

dologger -p $i.$k "Test daemon message, facility $i priority $k"

done

done

Note that on different Linux distribution verions, the facility and priority names might differ so, if you get

logger: unknown facility name: {auth,auth-priv,cron,daemon,kern,lpr,mail,mark,news, \

syslog,user,uucp,local0,local1,local2,local3,local4, \

local5,local6,local7}

check and set the proper naming as described in logger man page.

4. Using a file descriptor that will output to a pre-set log file

Another way is to add the following code to the beginning of the script

#!/bin/bash

exec 3>&1 4>&2

trap 'exec 2>&4 1>&3' 0 1 2 3

exec 1>log.out 2>&1

# Everything below will go to the file 'log.out':

The code Explaned

- Saves file descriptors so they can be restored to whatever they were before redirection or used themselves to output to whatever they were before the following redirect.

trap 'exec 2>&4 1>&3' 0 1 2 3 - Restore file descriptors for particular signals. Not generally necessary since they should be restored when the sub-shell exits.

exec 1>log.out 2>&1

- Redirect stdout to file log.out then redirect stderr to stdout. Note that the order is important when you want them going to the same file. stdout must be redirected before stderr is redirected to stdout.

From then on, to see output on the console (maybe), you can simply redirect to &3. For example

,

echo "$(date) : Do print whatever you want logging to &3 file handler" >&3

I've initially found out about this very nice bash code from serverfault.com's post how can I fully log all bash script actions (but unfortunately on latest Debian 10 Buster Linux that is prebundled with bash shell 5.0.3(1)-release the code doesn't behave exactly, well but still on older bash versions it works fine.

Sum it up

To shortlysummarize there is plenty of ways to do logging from a shell script logger command but using a function or a named pipe is the most classic. Sometimes if a script is supposed to write user or other script output to a a common file such as syslog, logger command can be used as it is present across most modern Linux distros.

If you have a better ways, please drop a common and I'll add it to this article.