Posts Tagged ‘denial of service’

Wednesday, March 28th, 2012

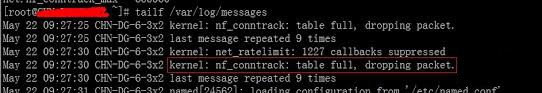

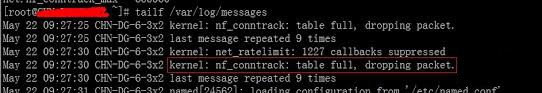

On many busy servers, you might encounter in /var/log/syslog or dmesg kernel log messages like

nf_conntrack: table full, dropping packet

to appear repeatingly:

[1737157.057528] nf_conntrack: table full, dropping packet.

[1737157.160357] nf_conntrack: table full, dropping packet.

[1737157.260534] nf_conntrack: table full, dropping packet.

[1737157.361837] nf_conntrack: table full, dropping packet.

[1737157.462305] nf_conntrack: table full, dropping packet.

[1737157.564270] nf_conntrack: table full, dropping packet.

[1737157.666836] nf_conntrack: table full, dropping packet.

[1737157.767348] nf_conntrack: table full, dropping packet.

[1737157.868338] nf_conntrack: table full, dropping packet.

[1737157.969828] nf_conntrack: table full, dropping packet.

[1737157.969928] nf_conntrack: table full, dropping packet

[1737157.989828] nf_conntrack: table full, dropping packet

[1737162.214084] __ratelimit: 83 callbacks suppressed

There are two type of servers, I've encountered this message on:

1. Xen OpenVZ / VPS (Virtual Private Servers)

2. ISPs – Internet Providers with heavy traffic NAT network routers

I. What is the meaning of nf_conntrack: table full dropping packet error message

In short, this message is received because the nf_conntrack kernel maximum number assigned value gets reached.

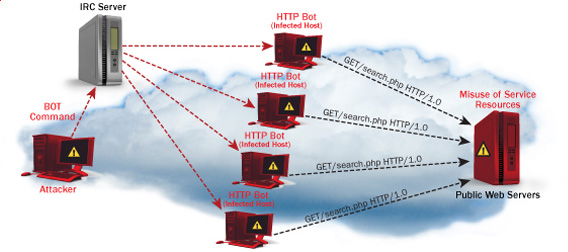

The common reason for that is a heavy traffic passing by the server or very often a DoS or DDoS (Distributed Denial of Service) attack. Sometimes encountering the err is a result of a bad server planning (incorrect data about expected traffic load by a company/companeis) or simply a sys admin error…

– Checking the current maximum nf_conntrack value assigned on host:

linux:~# cat /proc/sys/net/ipv4/netfilter/ip_conntrack_max

65536

– Alternative way to check the current kernel values for nf_conntrack is through:

linux:~# /sbin/sysctl -a|grep -i nf_conntrack_max

error: permission denied on key 'net.ipv4.route.flush'

net.netfilter.nf_conntrack_max = 65536

error: permission denied on key 'net.ipv6.route.flush'

net.nf_conntrack_max = 65536

– Check the current sysctl nf_conntrack active connections

To check present connection tracking opened on a system:

:

linux:~# /sbin/sysctl net.netfilter.nf_conntrack_count

net.netfilter.nf_conntrack_count = 12742

The shown connections are assigned dynamicly on each new succesful TCP / IP NAT-ted connection. Btw, on a systems that work normally without the dmesg log being flooded with the message, the output of lsmod is:

linux:~# /sbin/lsmod | egrep 'ip_tables|conntrack'

ip_tables 9899 1 iptable_filter

x_tables 14175 1 ip_tables

On servers which are encountering nf_conntrack: table full, dropping packet error, you can see, when issuing lsmod, extra modules related to nf_conntrack are shown as loaded:

linux:~# /sbin/lsmod | egrep 'ip_tables|conntrack'

nf_conntrack_ipv4 10346 3 iptable_nat,nf_nat

nf_conntrack 60975 4 ipt_MASQUERADE,iptable_nat,nf_nat,nf_conntrack_ipv4

nf_defrag_ipv4 1073 1 nf_conntrack_ipv4

ip_tables 9899 2 iptable_nat,iptable_filter

x_tables 14175 3 ipt_MASQUERADE,iptable_nat,ip_tables

II. Remove completely nf_conntrack support if it is not really necessery

It is a good practice to limit or try to omit completely use of any iptables NAT rules to prevent yourself from ending with flooding your kernel log with the messages and respectively stop your system from dropping connections.

Another option is to completely remove any modules related to nf_conntrack, iptables_nat and nf_nat.

To remove nf_conntrack support from the Linux kernel, if for instance the system is not used for Network Address Translation use:

/sbin/rmmod iptable_nat

/sbin/rmmod ipt_MASQUERADE

/sbin/rmmod rmmod nf_nat

/sbin/rmmod rmmod nf_conntrack_ipv4

/sbin/rmmod nf_conntrack

/sbin/rmmod nf_defrag_ipv4

Once the modules are removed, be sure to not use iptables -t nat .. rules. Even attempt to list, if there are any NAT related rules with iptables -t nat -L -n will force the kernel to load the nf_conntrack modules again.

Btw nf_conntrack: table full, dropping packet. message is observable across all GNU / Linux distributions, so this is not some kind of local distribution bug or Linux kernel (distro) customization.

III. Fixing the nf_conntrack … dropping packets error

– One temporary, fix if you need to keep your iptables NAT rules is:

linux:~# sysctl -w net.netfilter.nf_conntrack_max=131072

I say temporary, because raising the nf_conntrack_max doesn't guarantee, things will get smoothly from now on.

However on many not so heavily traffic loaded servers just raising the net.netfilter.nf_conntrack_max=131072 to a high enough value will be enough to resolve the hassle.

– Increasing the size of nf_conntrack hash-table

The Hash table hashsize value, which stores lists of conntrack-entries should be increased propertionally, whenever net.netfilter.nf_conntrack_max is raised.

linux:~# echo 32768 > /sys/module/nf_conntrack/parameters/hashsize

The rule to calculate the right value to set is:

hashsize = nf_conntrack_max / 4

– To permanently store the made changes ;a) put into /etc/sysctl.conf:

linux:~# echo 'net.netfilter.nf_conntrack_count = 131072' >> /etc/sysctl.conf

linux:~# /sbin/sysct -p

b) put in /etc/rc.local (before the exit 0 line):

echo 32768 > /sys/module/nf_conntrack/parameters/hashsize

Note: Be careful with this variable, according to my experience raising it to too high value (especially on XEN patched kernels) could freeze the system.

Also raising the value to a too high number can freeze a regular Linux server running on old hardware.

– For the diagnosis of nf_conntrack stuff there is ;

/proc/sys/net/netfilter kernel memory stored directory. There you can find some values dynamically stored which gives info concerning nf_conntrack operations in "real time":

linux:~# cd /proc/sys/net/netfilter

linux:/proc/sys/net/netfilter# ls -al nf_log/

total 0

dr-xr-xr-x 0 root root 0 Mar 23 23:02 ./

dr-xr-xr-x 0 root root 0 Mar 23 23:02 ../

-rw-r--r-- 1 root root 0 Mar 23 23:02 0

-rw-r--r-- 1 root root 0 Mar 23 23:02 1

-rw-r--r-- 1 root root 0 Mar 23 23:02 10

-rw-r--r-- 1 root root 0 Mar 23 23:02 11

-rw-r--r-- 1 root root 0 Mar 23 23:02 12

-rw-r--r-- 1 root root 0 Mar 23 23:02 2

-rw-r--r-- 1 root root 0 Mar 23 23:02 3

-rw-r--r-- 1 root root 0 Mar 23 23:02 4

-rw-r--r-- 1 root root 0 Mar 23 23:02 5

-rw-r--r-- 1 root root 0 Mar 23 23:02 6

-rw-r--r-- 1 root root 0 Mar 23 23:02 7

-rw-r--r-- 1 root root 0 Mar 23 23:02 8

-rw-r--r-- 1 root root 0 Mar 23 23:02 9

IV. Decreasing other nf_conntrack NAT time-out values to prevent server against DoS attacks

Generally, the default value for nf_conntrack_* time-outs are (unnecessery) large.

Therefore, for large flows of traffic even if you increase nf_conntrack_max, still shorty you can get a nf_conntrack overflow table resulting in dropping server connections. To make this not happen, check and decrease the other nf_conntrack timeout connection tracking values:

linux:~# sysctl -a | grep conntrack | grep timeout

net.netfilter.nf_conntrack_generic_timeout = 600

net.netfilter.nf_conntrack_tcp_timeout_syn_sent = 120

net.netfilter.nf_conntrack_tcp_timeout_syn_recv = 60

net.netfilter.nf_conntrack_tcp_timeout_established = 432000

net.netfilter.nf_conntrack_tcp_timeout_fin_wait = 120

net.netfilter.nf_conntrack_tcp_timeout_close_wait = 60

net.netfilter.nf_conntrack_tcp_timeout_last_ack = 30

net.netfilter.nf_conntrack_tcp_timeout_time_wait = 120

net.netfilter.nf_conntrack_tcp_timeout_close = 10

net.netfilter.nf_conntrack_tcp_timeout_max_retrans = 300

net.netfilter.nf_conntrack_tcp_timeout_unacknowledged = 300

net.netfilter.nf_conntrack_udp_timeout = 30

net.netfilter.nf_conntrack_udp_timeout_stream = 180

net.netfilter.nf_conntrack_icmp_timeout = 30

net.netfilter.nf_conntrack_events_retry_timeout = 15

net.ipv4.netfilter.ip_conntrack_generic_timeout = 600

net.ipv4.netfilter.ip_conntrack_tcp_timeout_syn_sent = 120

net.ipv4.netfilter.ip_conntrack_tcp_timeout_syn_sent2 = 120

net.ipv4.netfilter.ip_conntrack_tcp_timeout_syn_recv = 60

net.ipv4.netfilter.ip_conntrack_tcp_timeout_established = 432000

net.ipv4.netfilter.ip_conntrack_tcp_timeout_fin_wait = 120

net.ipv4.netfilter.ip_conntrack_tcp_timeout_close_wait = 60

net.ipv4.netfilter.ip_conntrack_tcp_timeout_last_ack = 30

net.ipv4.netfilter.ip_conntrack_tcp_timeout_time_wait = 120

net.ipv4.netfilter.ip_conntrack_tcp_timeout_close = 10

net.ipv4.netfilter.ip_conntrack_tcp_timeout_max_retrans = 300

net.ipv4.netfilter.ip_conntrack_udp_timeout = 30

net.ipv4.netfilter.ip_conntrack_udp_timeout_stream = 180

net.ipv4.netfilter.ip_conntrack_icmp_timeout = 30

All the timeouts are in seconds. net.netfilter.nf_conntrack_generic_timeout as you see is quite high – 600 secs = (10 minutes).

This kind of value means any NAT-ted connection not responding can stay hanging for 10 minutes!

The value net.netfilter.nf_conntrack_tcp_timeout_established = 432000 is quite high too (5 days!)

If this values, are not lowered the server will be an easy target for anyone who would like to flood it with excessive connections, once this happens the server will quick reach even the raised up value for net.nf_conntrack_max and the initial connection dropping will re-occur again …

With all said, to prevent the server from malicious users, situated behind the NAT plaguing you with Denial of Service attacks:

Lower net.ipv4.netfilter.ip_conntrack_generic_timeout to 60 – 120 seconds and net.ipv4.netfilter.ip_conntrack_tcp_timeout_established to stmh. like 54000

linux:~# sysctl -w net.ipv4.netfilter.ip_conntrack_generic_timeout = 120

linux:~# sysctl -w net.ipv4.netfilter.ip_conntrack_tcp_timeout_established = 54000

This timeout should work fine on the router without creating interruptions for regular NAT users. After changing the values and monitoring for at least few days make the changes permanent by adding them to /etc/sysctl.conf

linux:~# echo 'net.ipv4.netfilter.ip_conntrack_generic_timeout = 120' >> /etc/sysctl.conf

linux:~# echo 'net.ipv4.netfilter.ip_conntrack_tcp_timeout_established = 54000' >> /etc/sysctl.conf

Tags: Auto, callbacks, connection, conntrack, count, DDoS, denial of service, denial of service attack, dmesg, Draft, error message, flood, heavy traffic, host, host linux, instance, internet providers, ipt, ipv, ipv4, ISPs, kernel, Linux, linux cd, linux kernel, log, log messages, Mar, maximum number, message, nat network, nbsp, necessery, network routers, option, packet, quot, ratelimit, Resolving, root, root root, sbin, servers, support, syslog, TABLE, time, traffic load, Translation, type, value, var, virtual private servers, wait, work, xen

Posted in Computer Security, Linux, Networking, System Administration, Virtual Machines | 10 Comments »

Wednesday, February 22nd, 2012

Everyone who used Linux is probably familiar with wget or has used this handy download console tools at least thousand of times. Not so many Desktop GNU / Linux users like Ubuntu and Fedora Linux users had tried using wget to do something more than single files download.

Actually wget is not so popular as it used to be in earlier linux days. I've noticed the tendency for newer Linux users to prefer using curl (I don't know why).

With all said I'm sure there is plenty of Linux users curious on how a website mirror can be made through wget.

This article will briefly suggest few ways to do website mirroring on linux / bsd as wget is both available on those two free operating systems.

1. Most Simple exact mirror copy of website

The most basic use of wget's mirror capabilities is by using wget's -mirror argument:

# wget -m http://website-to-mirror.com/sub-directory/

Creating a mirror like this is not a very good practice, as the links of the mirrored pages will still link to external URLs. In other words link URL will not pointing to your local copy and therefore if you're not connected to the internet and try to browse random links of the webpage you will end up with many links which are not opening because you don't have internet connection.

2. Mirroring with rewritting links to point to localhost and in between download page delay

Making mirror with wget can put an heavy load on the remote server as it fetches the files as quick as the bandwidth allows it. On heavy servers rapid downloads with wget can significantly reduce the download server responce time. Even on a some high-loaded servers it can cause the server to hang completely.

Hence mirroring pages with wget without explicity setting delay in between each page download, could be considered by remote server as a kind of DoS – (denial of service) attack. Even some site administrators have already set firewall rules or web server modules configured like Apache mod_security which filter requests to IPs which are doing too frequent HTTP GET /POST requests to the web server.

To make wget delay with a 10 seconds download between mirrored pages use:

# wget -mk -w 10 -np --random-wait http://website-to-mirror.com/sub-directory/

The -mk stands for -m/-mirror and -k / shortcut argument for –convert-links (make links point locally), –random-wait tells wget to make random waits between o and 10 seconds between each page download request.

3. Mirror / retrieve website sub directory ignoring robots.txt "mirror restrictions"

Some websites has a robots.txt which restricts content download with clients like wget, curl or even prohibits, crawlers to download their website pages completely.

/robots.txt restrictions are not a problem as wget has an option to disable robots.txt checking when downloading.

Getting around the robots.txt restrictions with wget is possible through -e robots=off option.

For instance if you want to make a local mirror copy of the whole sub-directory with all links and do it with a delay of 10 seconds between each consequential page request without reading at all the robots.txt allow/forbid rules:

# wget -mk -w 10 -np -e robots=off --random-wait http://website-to-mirror.com/sub-directory/

4. Mirror website which is prohibiting Download managers like flashget, getright, go!zilla etc.

Sometimes when try to use wget to make a mirror copy of an entire site domain subdirectory or the root site domain, you get an error similar to:

Sorry, but the download manager you are using to view this site is not supported.

We do not support use of such download managers as flashget, go!zilla, or getright

This message is produced by the site dynamic generation language PHP / ASP / JSP etc. used, as the website code is written to check on the browser UserAgent sent.

wget's default sent UserAgent to the remote webserver is:

Wget/1.11.4

As this is not a common desktop browser useragent many webmasters configure their websites to only accept well known established desktop browser useragents sent by client browsers.

Here are few typical user agents which identify a desktop browser:

- Mozilla/5.0 (Windows NT 6.1; rv:6.0) Gecko/20110814 Firefox/6.0

- Mozilla/5.0 (X11; Linux i686; rv:6.0) Gecko/20100101 Firefox/6.0

- Mozilla/6.0 (Macintosh; I; Intel Mac OS X 11_7_9; de-LI; rv:1.9b4) Gecko/2012010317 Firefox/10.0a4

- Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:2.2a1pre) Gecko/20110324 Firefox/4.2a1pre

etc. etc.

If you're trying to mirror a website which has implied some kind of useragent restriction based on some "valid" useragent, wget has the -U option enabling you to fake the useragent.

If you get the Sorry but the download manager you are using to view this site is not supported , fake / change wget's UserAgent with cmd:

# wget -mk -w 10 -np -e robots=off \

--random-wait

--referer="http://www.google.com" \--user-agent="Mozilla/5.0 (Windows; U; Windows NT 5.1; en-US; rv:1.8.1.6) Gecko/20070725 Firefox/2.0.0.6" \--header="Accept:text/xml,application/xml,application/xhtml+xml,text/html;q=0.9,text/plain;q=0.8,image/png,*/*;q=0.5" \--header="Accept-Language: en-us,en;q=0.5" \--header="Accept-Encoding: gzip,deflate" \--header="Accept-Charset: ISO-8859-1,utf-8;q=0.7,*;q=0.7" \--header="Keep-Alive: 300"

For the sake of some wget anonimity – to make wget permanently hide its user agent and pretend like a Mozilla Firefox running on MS Windows XP use .wgetrc like this in home directory.

5. Make a complete mirror of a website under a domain name

To retrieve complete working copy of a site with wget a good way is like so:

# wget -rkpNl5 -w 10 --random-wait www.website-to-mirror.com

Where the arguments meaning is:

-r – Retrieve recursively

-k – Convert the links in documents to make them suitable for local viewing

-p – Download everything (inline images, sounds and referenced stylesheets etc.)

-N – Turn on time-stamping

-l5 – Specify recursion maximum depth level of 5

6. Make a dynamic pages static site mirror, by converting CGI, ASP, PHP etc. to HTML for offline browsing

It is often websites pages are ending in a .php / .asp / .cgi … extensions. An example of what I mean is for instance the URL http://php.net/manual/en/tutorial.php. You see the url page is tutorial.php once mirrored with wget the local copy will also end up in .php and therefore will not be suitable for local browsing as .php extension is not understood how to interpret by the local browser.

Therefore to copy website with a non-html extension and make it offline browsable in HTML there is the –html-extension option e.g.:

# wget -mk -w 10 -np -e robots=off \

--random-wait \

--convert-links http://www.website-to-mirror.com

A good practice in mirror making is to set a download limit rate. Setting such rate is both good for UP and DOWN side (the local host where downloading and remote server). download-limit is also useful when mirroring websites consisting of many enormous files (documental movies, some music etc.).

To set a download limit to add –limit-rate= option. Passing by to wget –limit-rate=200K would limit download speed to 200KB.

Other useful thing to assure wget has made an accurate mirror is wget logging. To use it pass -o ./my_mirror.log to wget.

Tags: adm, argument, Auto, bandwidth, briefly, browser, BSD, capabilities, cgi, common, connection, copy, denial of service, denial of service attack, Desktop, download, Draft, exact mirror, external urls, fedora linux, flashget, free operating systems, gnu linux, heavy load, home directory, How to, internet connection, Linux, linux users, localhost, make, manager, mirror copy, Mozilla, option, page, php extension, quot, random links, remote server, request, servers, site mirroring, something, tendency, text, text html, time, Ubuntu, URLs, UserAgent, website mirror, wget

Posted in Linux, System Administration, Web and CMS | 2 Comments »

Tuesday, May 3rd, 2011 In short I’ll explain here what is Grsecurity http://www.grsecurity.net/ for all those who have not used it yet and what kind of capabilities concerning enhanced kernel security it has.

Grsecurity is a combination of patches for the Linux kernel accenting at the improving kernel security.

The typical application of GrSecurity is in the field of Linux systems which are administered through SSH/Shell, e.g. (remote hosts), though you can also configure grsecurity on a normal Linux desktop system if you want a super secured Linux desktop ;).

GrSecurity is used heavily to protect server system which require a multiple users to have access to the shell.

On systems where multiple user access is required it’s a well known fact that (malicious users, crackers or dumb script kiddies) get administrator (root) privileges with a some just poped in 0 day root kernel exploit.

If you’re an administrator of a system (let’s say a web hosting) server with multiple users having access to the shell it’s also common that exploits aiming at hanging in certain daemon service is executed by some of the users.

In other occasions you have users which are trying to DoS the server with some 0 day Denial of Service exploit.

In all this cases GrSecurity having a kernel with grsecurity is priceless.

Installing grsecurity patched kernel is an easy task for Debian and Ubuntu and is explained in one of my previous articles.

This article aims to explain in short some configuration options for a GrSecurity tightened kernel, when one have to compile a new kernel from source.

I would skip the details on how to compile the kernel and simply show you some picture screens with GrSecurity configuration options which are working well and needs to be set-up before a make command is issued to compile the new kernel.

After preparing the kernel source for compilation and issuing:

linux:/usr/src/kernel-source$ make menuconfig

You will have to select options like the ones you see in the pictures below:

[nggallery id=”8″]

After completing and saving your kernel config file, continue as usual with an ordinary kernel compilation, e.g.:

linux:/usr/src/kernel-source$ make

linux:/usr/src/kernel-source$ make modules

linux:/usr/src/kernel-source$ su root

linux:/usr/src/kernel-source# make modules_install

linux:/usr/src/kernel-source# make install

linux:/usr/src/kernel-source# mkinitrd -o initrd.img-2.6.xx 2.6.xx

Also make sure the grub is properly configured to load the newly compiled and installed kernel.

After a system reboot, if all is fine you should be able to boot up the grsecurity tightened newly compiled kernel, but be careful and make sure you have a backup solution before you reboot, don’t blame me if your new grsecurity patched kernel fails to boot! You’re on your own boy 😉

This article is written thanks to based originally on his article in Bulgarian. If you’re a Bulgarian you might also checkout static’s blog

Tags: administrator, combination, compilation, config, configuration options, configure, crackers, day, Denial, denial of service, Desktop, desktop system, exploits, file, grsecurity, hosting server, How to, img, installlinux, kernel source, Linux, linux desktop, linux kernel, linux systems, make, malicious users, Maximum, maximum linux, menuconfigYou, multiple users, picture, root, root privileges, say, script, script kiddies, server system, Shell, src, system, typical application, Ubuntu, usr

Posted in Linux, Linux and FreeBSD Desktop, System Administration | No Comments »

Friday, July 8th, 2011

Some long time ago I’ve written an article Optimizing Linux tcp/ip networking

In the article I’ve examined a number of Linux kernel sysctl variables, which significantly improve the way TCP/IP networking is handled by a non router Linux based servers.

As the time progresses I’ve been continuing to read materials on blogs and internet sites on various tips and anti Denial of Service rules which one could apply on newly installed hosting (Apache/MySql/Qmail/Proxy) server to improve webserver responce times and tighten the overall security level.

In my quest for sysctl 😉 I found a few more handy sysctl variables apart from the old ones I incorporate on every Linux server I adminstrate.

The sysctl variables improves the overall network handling efficiency and protects about common SYN/ACK Denial of service attacks.

Here are the extra sysctl variables I started incorporating just recently:

############ IPv4 Sysctl Settings ################

#Enable ExecShield protection (randomize virtual assigned space to protect against many exploits)

kernel.randomize_va_space = 1

#Increase the number of PIDs processes could assign this is very needed especially on more powerful servers

kernel.pid_max = 65536

# Prevent against the common 'syn flood attack'

net.ipv4.tcp_syncookies = 1

# Controls the use of TCP syncookies two is generally a better idea, though you might experiment

#net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_synack_retries = 2

##################################################

#

############## IPv6 Sysctl Settings ################

# Number of Router Solicitations to send until assuming no routers are present.

net.ipv6.conf.default.router_solicitations = 0

# Accept Router Preference in RA? Again not necessery if the server is not a router

net.ipv6.conf.default.accept_ra_rtr_pref = 0

# Learn Prefix Information in Router Advertisement (Unnecessery) for non-routers

net.ipv6.conf.default.accept_ra_pinfo = 0

# disable accept of hop limit settings from other routers (could be used for DoS)

net.ipv6.conf.default.accept_ra_defrtr = 0

# disable ipv6 global unicasts server assignments

net.ipv6.conf.default.autoconf = 0

# neighbor solicitations to send out per address (better if disabled)

net.ipv6.conf.default.dad_transmits = 0

# disable assigning more than 1 address per network interface

net.ipv6.conf.default.max_addresses = 1

#####################################################

To use this settings paste the above sysctl variables in /etc/sysctl.conf and ask sysctl command to read and apply the newly added conf settings:

server:~# sysctl -p

...

Hopefully you should not get errors while applying the sysctl settings, if you get some errors, it’s possible some of the variable is differently named (depending on the Linux kernel version) or the Linux distribution on which sysctl’s are implemented.

For some convenience I’ve created unified sysctl variables /etc/sysct.conf containing the newly variables I started implementing to servers with the ones I already exlpained in my previous post Optimizing Linux TCP/IP Networking

Here is the optimized / hardened sysctl.conf file for download

I use this exact sysctl.conf these days on both Linux hosting / VPS / Mail servers etc. as well as on my personal notebook 😉

Here is also the the complete content of above’s sysctl.conf file, just in case if somebody wants to directly copy/paste it in his /etc/sysctl.conf

# Sysctl kernel variables to improve network performance and protect against common Denial of Service attacks

# It's possible that not all of the variables are working on all Linux distributions, test to make sure

# Some of the variables might need a slight modification to match server hardware, however in most cases it should be fine

# variables list compiled by hip0

### https://www.pc-freak.net

#### date 08.07.2011

############ IPv4 Sysctl Kernel Settings ################

net.ipv4.ip_forward = 0

# ( Turn off IP Forwarding )

net.ipv4.conf.default.rp_filter = 1

# ( Control Source route verification )

net.ipv4.conf.default.accept_redirects = 0

# ( Disable ICMP redirects )

net.ipv4.conf.all.accept_redirects = 0

# ( same as above )

net.ipv4.conf.default.accept_source_route = 0

# ( Disable IP source routing )

net.ipv4.conf.all.accept_source_route = 0

# ( - || - )net.ipv4.tcp_fin_timeout = 40

# ( Decrease FIN timeout ) - Useful on busy/high load server

net.ipv4.tcp_keepalive_time = 4000

# ( keepalive tcp timeout )

net.core.rmem_default = 786426

# Receive memory stack size ( a good idea to increase it if your server receives big files )

##net.ipv4.tcp_rmem = "4096 87380 4194304"

net.core.wmem_default = 8388608

#( Reserved Memory per connection )

net.core.wmem_max = 8388608

net.core.optmem_max = 40960

# ( maximum amount of option memory buffers )

# tcp reordering, increase max buckets, increase the amount of backlost

net.ipv4.tcp_max_tw_buckets = 360000

net.ipv4.tcp_reordering = 5

##net.core.hot_list_length = 256

net.core.netdev_max_backlog = 1024

#Enable ExecShield protection (randomize virtual assigned space to protect against many exploits)

kernel.randomize_va_space = 1

#Increase the number of PIDs processes could assign this is very needed especially on more powerful servers

kernel.pid_max = 65536

# Prevent against the common 'syn flood attack'net.ipv4.tcp_syncookies = 1

# Controls the use of TCP syncookies two is generally a better idea, though you might experiment

#net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_synack_retries = 2

###################################################

############## IPv6 Sysctl Settings ################

# Number of Router Solicitations to send until assuming no routers are present.

net.ipv6.conf.default.router_solicitations = 0

# Accept Router Preference in RA? Again not necessery if the server is not a router

net.ipv6.conf.default.accept_ra_rtr_pref = 0

# Learn Prefix Information in Router Advertisement (Unnecessery) for non-routersnet.

ipv6.conf.default.accept_ra_pinfo = 0

# disable accept of hop limit settings from other routers (could be used for DoS)

net.ipv6.conf.default.accept_ra_defrtr = 0

# disable ipv6 global unicasts server assignmentsnet.

ipv6.conf.default.autoconf = 0

# neighbor solicitations to send out per address (better if disabled)

net.ipv6.conf.default.dad_transmits = 0

# disable assigning more than 1 address per network interfacenet.

ipv6.conf.default.max_addresses = 1

#####################################################

# Reboot if kernel panic

kernel.panic = 20

These sysctl settings will tweaken the Linux kernel default network settings performance and you will notice the improvements in website responsiveness immediately in some cases implementing this kernel level goodies will make the server perform better and the system load might decrease even 😉

This optimizations on a kernel level are not only handy for servers, their implementation on Linux Desktop should also have a positive influence on the way the network behaves and could improve significantly the responce times of opening pages in Firefox/Opera/Epiphany Torrent downloads etc.

Hope this kernel tweakenings are helpful to someone.

Cheers 😉

Tags: adminstrate, amount, anti, apache mysql, autoconf, default, default network, default router, Denial, denial of service, denial of service attacks, exploits, file, harden, How to, imprpove, internet sites, ip networking, ipv, ipv4, ipv6, kernel, kernel level, level, Linux, Linux Security, linux server, memory, network efficiency, non, number, protection, proxy server, Qmail, quot, ra, randomize, reordering, responce, responce times, router linux, routers, rtr, security, security level, serverskernel, Service, solicitations, Source, SYN, syn flood attack, sysctl, time, variables, wmem

Posted in Computer Security, Linux, Linux and FreeBSD Desktop, System Administration | 4 Comments »

Wednesday, September 7th, 2011

One good module that helps in mitigating, very basic Denial of Service attacks against Apache 1.3.x 2.0.x and 2.2.x webserver is mod_evasive

I’ve noticed however many Apache administrators out there does forget to install it on new Apache installations or even some of them haven’t heard about of it.

Therefore I wrote this small article to create some more awareness of the existence of the anti DoS module and hopefully thorugh it help some of my readers to strengthen their server security.

Here is a description on what exactly mod-evasive module does:

debian:~# apt-cache show libapache2-mod-evasive | grep -i description -A 7

Description: evasive module to minimize HTTP DoS or brute force attacks

mod_evasive is an evasive maneuvers module for Apache to provide some

protection in the event of an HTTP DoS or DDoS attack or brute force attack.

.

It is also designed to be a detection tool, and can be easily configured to

talk to ipchains, firewalls, routers, and etcetera.

.

This module only works on Apache 2.x servers

How does mod-evasive anti DoS module works?

Detection is performed by creating an internal dynamic hash table of IP Addresses and URIs, and denying any single IP address which matches the criterias:

- Requesting the same page more than number of times per second

- Making more than N (number) of concurrent requests on the same child per second

- Making requests to Apache during the IP is temporarily blacklisted (in a blocking list – IP blacklist is removed after a time period))

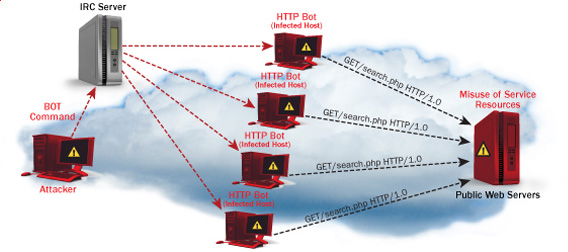

These anti DDoS and DoS attack protection decreases the possibility that Apache gets DoSed by ana amateur DoS attack, however it still opens doors for attacks who has a large bot-nets of zoombie hosts (let’s say 10000) which will simultaneously request a page from the Apache server. The result in a scenario with a infected botnet running a DoS tool in most of the cases will be a quick exhaustion of system resources available (bandwidth, server memory and processor consumption).

Thus mod-evasive just grants a DoS and DDoS security only on a basic, level where someone tries to DoS a webserver with only possessing access to few hosts.

mod-evasive however in many cases mesaure to protect against DoS and does a great job if combined with Apache mod-security module discussed in one of my previous blog posts – Tightening PHP Security on Debian with Apache 2.2 with ModSecurity2

1. Install mod-evasive

Installing mod-evasive on Debian Lenny, Squeeze and even Wheezy is done in identical way straight using apt-get:

deiban:~# apt-get install libapache2-mod-evasive

...

2. Enable mod-evasive in Apache

debian:~# ln -sf /etc/apache2/mods-available/mod-evasive.load /etc/apache2/mods-enabled/mod-evasive.load

3. Configure the way mod-evasive deals with potential DoS attacks

Open /etc/apache2/apache2.conf, go down to the end of the file and paste inside, below three mod-evasive configuration directives:

<IfModule mod_evasive20.c>

DOSHashTableSize 3097DOS

PageCount 30

DOSSiteCount 40

DOSPageInterval 2

DOSSiteInterval 1

DOSBlockingPeriod 120

#DOSEmailNotify hipo@mymailserver.com

</IfModule>

In case of the above configuration criterias are matched, mod-evasive instructs Apache to return a 403 (Forbidden by default) error page which will conserve bandwidth and system resources in case of DoS attack attempt, especially if the DoS attack targets multiple requests to let’s say a large downloadable file or a PHP,Perl,Python script which does a lot of computation and thus consumes large portion of server CPU time.

The meaning of the above three mod-evasive config vars are as follows:

DOSHashTableSize 3097 – Increasing the DoSHashTableSize will increase performance of mod-evasive but will consume more server memory, on a busy webserver this value however should be increased

DOSPageCount 30 – Add IP in evasive temporary blacklist if a request for any IP that hits the same page 30 consequential times.

DOSSiteCount 40 – Add IP to be be blacklisted if 40 requests are made to a one and the same URL location in 1 second time

DOSBlockingPeriod 120 – Instructs the time in seconds for which an IP will get blacklisted (e.g. will get returned the 403 foribden page), this settings instructs mod-evasive to block every intruder which matches DOSPageCount 30 or DOSSiteCount 40 for 2 minutes time.

DOSPageInterval 2 – Interval of 2 seconds for which DOSPageCount can be reached.

DOSSiteInterval 1 – Interval of 1 second in which if DOSSiteCount of 40 is matched the matched IP will be blacklisted for configured period of time.

mod-evasive also supports IP whitelisting with its option DOSWhitelist , handy in cases if for example, you should allow access to a single webpage from office env consisting of hundred computers behind a NAT.

Another handy configuration option is the module capability to notify, if a DoS is originating from a number of IP addresses using the option DOSEmailNotify

Using the DOSSystemCommand in relation with iptables, could be configured to filter out any IP addresses which are found to be matching the configured mod-evasive rules.

The module also supports custom logging, if you want to keep track on IPs which are found to be trying a DoS attack against the server place in above shown configuration DOSLogDir “/var/log/apache2/evasive” and create the /var/log/apache2/evasive directory, with:

debian:~# mkdir /var/log/apache2/evasive

I decided not to log mod-evasive DoS IP matches as this will just add some extra load on the server, however in debugging some mistakenly blacklisted IPs logging is sure a must.

4. Restart Apache to load up mod-evasive

debian:~# /etc/init.d/apache2 restart

...

Finally a very good reading which sheds more light on how exactly mod-evasive works and some extra module configuration options are located in the documentation bundled with the deb package to read it, issue:

debian:~# zless /usr/share/doc/libapache2-mod-evasive/README.gz

Tags: ana amateur, anti, apache 2, apache server, apache2, awareness, bandwidth server, botnet, brute force attack, concurrent requests, configured, criterias, DDoS, debian linux, Denial, denial of service, denial of service attacks, description, dos attack, dos tool, DOSHashTableSize, evasive maneuvers, event, exhaustion, existence, file, grep, hash, hash table, HTTP, IfModule, Installing, libapache, mod, number, option, page, period of time, protection, Secure Apache, server security, Service, show, small article, system resources, time, tool, uris, webpage

Posted in Computer Security, Linux, System Administration, Web and CMS | 1 Comment »

Friday, July 22nd, 2011 There are few commands I usually use to track if my server is possibly under a Denial of Service attack or under Distributed Denial of Service

Sys Admins who still have not experienced the terrible times of being under a DoS attack are happy people for sure …

1. How to Detect a TCP/IP Denial of Service Attack This are the commands I use to find out if a loaded Linux server is under a heavy DoS attack, one of the most essential one is of course netstat.

To check if a server is under a DoS attack with netstat, it’s common to use:

linux:~# netstat -ntu | awk '{print $5}' | cut -d: -f1 | sort | uniq -c | sort -n|wc -l

If the output of below command returns a result like 2000 or 3000 connections!, then obviously it’s very likely the server is under a DoS attack.

To check all the IPS currently connected to the Apache Webserver and get a very brief statistics on the number of times each of the IPs connected to my server, I use the cmd:

linux:~# netstat -ntu | awk '{print $5}' | cut -d: -f1 | sort | uniq -c | sort -n

221 80.143.207.107 233 145.53.103.70 540 82.176.164.36

As you could see from the above command output the IP 80.143.207.107 is either connected 221 times to the server or is in state of connecting or disconnecting to the node.

Another possible way to check, if a Linux or BSD server is under a Distributed DoS is with the list open files command lsof

Here is how lsof can be used to list the approximate number of ESTABLISHED connections to port 80.

linux:~# lsof -i TCP:80

litespeed 241931 nobody 17u IPv4 18372655 TCP server.www.pc-freak.net:http (LISTEN)

litespeed 241931 nobody 25u IPv4 18372659 TCP 85.17.159.89:http (LISTEN)

litespeed 241931 nobody 30u IPv4 29149647 TCP server.www.pc-freak.net:http->83.101.6.41:54565 (ESTABLISHED)

litespeed 241931 nobody 33u IPv4 18372647 TCP 85.17.159.93:http (LISTEN)

litespeed 241931 nobody 34u IPv4 29137514 TCP server.www.pc-freak.net:http->83.101.6.41:50885 (ESTABLISHED)

litespeed 241931 nobody 35u IPv4 29137831 TCP server.www.pc-freak.net:http->83.101.6.41:52312 (ESTABLISHED)

litespeed 241931 nobody 37w IPv4 29132085 TCP server.www.pc-freak.net:http->83.101.6.41:50000 (ESTABLISHED)

Another way to get an approximate number of established connections to let’s say Apache or LiteSpeed webserver with lsof can be achieved like so:

linux:~# lsof -i TCP:80 |wc -l

2100

I find it handy to keep track of above lsof command output every few secs with gnu watch , like so:

linux:~# watch "lsof -i TCP:80"

2. How to Detect if a Linux server is under an ICMP SMURF attack

ICMP attack is still heavily used, even though it’s already old fashioned and there are plenty of other Denial of Service attack types, one of the quickest way to find out if a server is under an ICMP attack is through the command:

server:~# while :; do netstat -s| grep -i icmp | egrep 'received|sent' ; sleep 1; done

120026 ICMP messages received

1769507 ICMP messages sent

120026 ICMP messages received

1769507 ICMP messages sent

As you can see the above one liner in a loop would check for sent and recieved ICMP packets every few seconds, if there are big difference between in the output returned every few secs by above command, then obviously the server is under an ICMP attack and needs to hardened.

3. How to detect a SYN flood with netstat

linux:~# netstat -nap | grep SYN | wc -l

1032

1032 SYNs per second is quite a high number and except if the server is not serving let’s say 5000 user requests per second, therefore as the above output reveals it’s very likely the server is under attack, if however I get results like 100/200 SYNs, then obviously there is no SYN flood targetting the machine 😉

Another two netstat command application, which helps determining if a server is under a Denial of Service attacks are:

server:~# netstat -tuna |wc -l

10012

and

server:~# netstat -tun |wc -l

9606

Of course there also some other ways to check the count the IPs who sent SYN to the webserver, for example:

server:~# netstat -n | grep :80 | grep SYN |wc -l

In many cases of course the top or htop can be useful to find, if many processes of a certain type are hanging around.

4. Checking if UDP Denial of Service is targetting the server

server:~# netstat -nap | grep 'udp' | awk '{print $5}' | cut -d: -f1 | sort |uniq -c |sort -n

The above command will list information concerning possible UDP DoS.

The command can easily be accustomed also to check for both possible TCP and UDP denial of service, like so:

server:~# netstat -nap | grep 'tcp|udp' | awk '{print $5}' | cut -d: -f1 | sort |uniq -c |sort -n

104 109.161.198.86

115 112.197.147.216

129 212.10.160.148

227 201.13.27.137

3148 91.121.85.220

If after getting an IP that has too many connections to the server and is almost certainly a DoS host you would like to filter this IP.

You can use the /sbin/route command to filter it out, using route will probably be a better choice instead of iptables, as iptables would load up the CPU more than simply cutting the route to the server.

Here is how I remove hosts to not be able to route packets to my server:

route add 110.92.0.55 reject

The above command would null route the access of IP 110.92.0.55 to my server.

Later on to look up for a null routed IP to my host, I use:

route -n |grep -i 110.92.0.55

Well hopefully this should be enough to give a brief overview on how, one can dig in his server and find if he is under a Distributed Denial of Service, hope it’s helpful to somebody out there.

Cheers 😉

Tags: apache webserver, approximate number, awk print, BSD, checking, cmd, command, course, Denial, denial of service, denial of service attack, Detect, difference, dos attack, ESTABLISHED, freak, How to, HTTP, ICMP, ips, Linux, linux server, linux webserver, LISTEN, litespeed, netstat, nobody, node, ntu, number, server pc, Service, sleep, statistics, SYN, SYNs, sys admins, tcp, terrible times, Watch

Posted in Linux, System Administration | 5 Comments »

Monday, May 20th, 2013

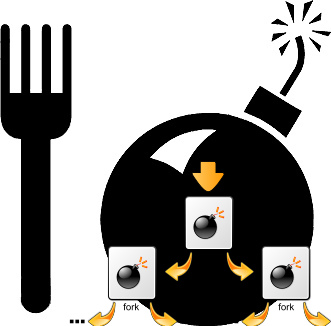

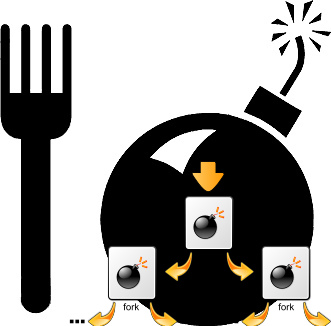

To prevent from various DoS taking advantage of unlimited forks and just to tighten up security it is good idea to limit the number of maximum processes users can spawn on Linux system. In command line such preventions are done using ulimit command.

To get list of current logged in user ulimit settings

hipo@noah:~$ ulimit -a

core file size (blocks, -c) 0

data seg size (kbytes, -d) unlimited

scheduling priority (-e) 0

file size (blocks, -f) unlimited

pending signals (-i) 16382

max locked memory (kbytes, -l) 64

max memory size (kbytes, -m) unlimited

open files (-n) 1024

pipe size (512 bytes, -p) 8

POSIX message queues (bytes, -q) 819200

real-time priority (-r) 0

stack size (kbytes, -s) 8192

cpu time (seconds, -t) unlimited

max user processes (-u) unlimited

virtual memory (kbytes, -v) unlimited

file locks (-x) unlimited

As you see from above output, there is plenty of things, that can be limited with ulimit.

Through it user can configure maximum number of open files (by default 1024), e.g.:

open files (-n) 1024

You can also set the max size of file (in blocks) user can open – through:

file size (blocks, -f) unlimited

As well as limiting user processes to be unable to use more than maximum number of CPU time via:

cpu time (seconds, -t) unlimited

ulimit is also used to assign whether Linux will produce the so annoying often large produced core files. Those who remember early time Linux distributions certainly remember GNOME and GNOME apps crashing regularly producing those large useless files. Most of modern Linux distrubutions has core file produce disabled, i.e.:

core file size (blocks, -c) 0

For Linux distributions, where for some reason core dumps are still enabled – you can disable them by running:>

noah:~# ulimit -Sc 0

By default depending on Linux distribution max user processes ulimit is either unlimited in Debian and other deb based distributions or on RPM based Linuces versions of (Fedora, RHEL, CentOS, Redhat) is 32768.

To ulimit a current logged in user to be able to spawn maximum of 50 processes;

hipo@noah:~$ ulimit -Su 50

hipo@noah:~$ ulimit -a

core file size (blocks, -c) 0

data seg size (kbytes, -d) unlimited

scheduling priority (-e) 0

file size (blocks, -f) unlimited

pending signals (-i) 16382

max locked memory (kbytes, -l) 64

max memory size (kbytes, -m) unlimited

open files (-n) 1024

pipe size (512 bytes, -p) 8

POSIX message queues (bytes, -q) 819200

real-time priority (-r) 0

stack size (kbytes, -s) 8192

cpu time (seconds, -t) unlimited

max user processes (-u) 50

virtual memory (kbytes, -v) unlimited

file locks (-x) unlimited

-Su – assigns max num of soft limit to 50, to set a hard limit of processes, there is the -Hu parameter.

Imposing ulimit user restrictions, lets say a max processes user can run is set via /etc/security/limits.conf

In limits.conf, there are some commented examples, e.g., here is paste from Debian:

#* soft core 0

#root hard core 100000

#* hard rss 10000

#@student hard nproc 20

#@faculty soft nproc 20

#@faculty hard nproc 50

#ftp hard nproc 0

#ftp – chroot /ftp

#@student – maxlogins 4

The @student example above, i.e.:

@student hard nproc 20

– sets maximum number of 20 processes for group student (@ – at sign signifies limitation is valid for users belonging to group).

As you can see there are soft and hard limit that can be assigned for user / group. soft limit sets limits for maximum spawned processes by by non-root users, soft limit can be modified by non-privileged user.

hard limit assigns maximum num of processes for programs running and only privileged user root can impose changes to that.

To add my user hipo to have limit of maximum 100 parallel running processes I had to add to /etc/security/limits.conf

hipo@noah:~$ echo 'hipo hard nproc 100' >> /etc/security/limits.conf

ulimit shell command is a wrapper around the setrlimit system call. Thus setrlimit instructs Linux kernel with interrupts depending on ulimit assigned settings.

One note to make here is whether limiting user has to use Linux system in Graphical Environment, lets say GNOME you should raise the max number of spawned processes to some high number for example at least 200 / 300 procs.

After limitting user max processes, You can test whether system is secure against fork bomb DoS by issuing in shell:

hipo@noah:~$ ulimit -u 50

hipo@noah~:$ :(){ :|:& };:

[1] 3607

hipo@noah:~$ bash: fork: Resource temporarily unavailable

bash: fork: Resource temporarily unavailable

Due to the limitation, attempt to fork more than 50 processes is blocked and system is safe from infamous denial of service fork bomb attack

Tags: bashrc, denial of service, Linux, linux user, maximum number, memory size, message queues, priority, signals, time priority

Posted in Computer Security, Linux, System Administration | No Comments »

Thursday, June 30th, 2011

Recently has become publicly known for the serious hole found in all Apache webserver versions 1.3.x and 2.0.x and 2.2.x. The info is to be found inside the security CVE-2011-3192 – https://issues.apache.org/bugzilla/show_bug.cgi?id=51714

Apache remote denial of service is already publicly cirtuculating, since about a week and is probably to be used even more heavily in the 3 months to come. The exploit can be obtained from exploit-db.com a mirror copy of #Apache httpd Remote Denial of Service (memory exhaustion) is for download here

The DoS script is known in the wild under the name killapache.pl

killapache.pl PoC depends on perl ForkManager and thus in order to be properly run on FreeBSD, its necessery to install p5-Parallel-ForkManager bsd port :

freebsd# cd /usr/ports/devel/p5-Parallel-ForkManager

freebsd# make install && make install clean

...

Here is an example of the exploit running against an Apache webserver host.

freebsd# perl httpd_dos.pl www.targethost.com 50

host seems vuln

ATTACKING www.targethost.com [using 50 forks]

:pPpPpppPpPPppPpppPp

ATTACKING www.targethost.com [using 50 forks]

:pPpPpppPpPPppPpppPp

...

In about 30 seconds to 1 minute time the DoS attack with only 50 simultaneous connections is capable of overloading any vulnerable Apache server.

It causes the webserver to consume all the machine memory and memory swap and consequently makes the server to crash in most cases.

During the Denial of Service attack is in action access the websites hosted on the webserver becomes either hell slow or completely absent.

The DoS attack is quite a shock as it is based on an Apache range problem which started in year 2007.

Today, Debian has issued a new versions of Apache deb package for Debian 5 Lenny and Debian 6, the new packages are said to have fixed the issue.

I assume that Ubuntu and most of the rest Debian distrubtions will have the apache's range header DoS patched versions either today or in the coming few days.

Therefore work around the issue on debian based servers can easily be done with the usual apt-get update && apt-get upgrade

On other Linux systems as well as FreeBSD there are work arounds pointed out, which can be implemented to close temporary the Apache DoS hole.

1. Limiting large number of range requests

The first suggested solution is to limit the lenght of range header requests Apache can serve. To implement this work raround its necessery to put at the end of httpd.conf config:

# Drop the Range header when more than 5 ranges.

# CVE-2011-3192

SetEnvIf Range (?:,.*?){5,5} bad-range=1

RequestHeader unset Range env=bad-range

# We always drop Request-Range; as this is a legacy

# dating back to MSIE3 and Netscape 2 and 3.

RequestHeader unset Request-Range

# optional logging.

CustomLog logs/range-CVE-2011-3192.log common env=bad-range

CustomLog logs/range-CVE-2011-3192.log common env=bad-req-range

2. Reject Range requests for more than 5 ranges in Range: header

Once again to implement this work around paste in Apache config file:

This DoS solution is not recommended (in my view), as it uses mod_rewrite to implement th efix and might be additionally another open window for DoS attack as mod_rewrite is generally CPU consuming.

# Reject request when more than 5 ranges in the Range: header.

# CVE-2011-3192

#

RewriteEngine on

RewriteCond %{HTTP:range} !(bytes=[^,]+(,[^,]+){0,4}$|^$)

# RewriteCond %{HTTP:request-range} !(bytes=[^,]+(?:,[^,]+){0,4}$|^$)

RewriteRule .* - [F]

# We always drop Request-Range; as this is a legacy

# dating back to MSIE3 and Netscape 2 and 3.

RequestHeader unset Request-Range

3. Limit the size of Range request fields to few hundreds

To do so put in httpd.conf:

LimitRequestFieldSize 200

4. Dis-allow completely Range headers: via mod_headers Apache module

In httpd.conf put:

RequestHeader unset Range

RequestHeader unset Request-Range

This work around could create problems on some websites, which are made in a way that the Request-Range is used.

5. Deploy a tiny Apache module to count the number of Range Requests and drop connections in case of high number of Range: requests

This solution in my view is the best one, I've tested it and I can confirm on FreeBSD works like a charm.

To secure FreeBSD host Apache, against the Range Request: DoS using mod_rangecnt, one can literally follow the methodology explained in mod_rangecnt.c header:

freebsd# wget http://people.apache.org/~dirkx/mod_rangecnt.c

..

# compile the mod_rangecnt modulefreebsd# /usr/local/sbin/apxs -c mod_rangecnt.c

...

# install mod_rangecnt module to Apachefreebsd# /usr/local/sbin/apxs -i -a mod_rangecnt.la

...

Finally to load the newly installed mod_rangecnt, Apache restart is required:

freebsd# /usr/local/etc/rc.d/apache2 restart

...

I've tested the module on i386 FreeBSD install, so I can't confirm this steps works fine on 64 bit FreeBSD install, I would be glad if I can hear from someone if mod_rangecnt is properly compiled and installed fine also on 6 bit BSD arch.

Deploying the mod_rangecnt.c Range: Header to prevent against the Apache DoS on 64 bit x86_amd64 CentOS 5.6 Final is also done without any pitfalls.

[root@centos ~]# uname -a;

Linux centos 2.6.18-194.11.3.el5 #1 SMP Mon Aug 30 16:19:16 EDT 2010 x86_64 x86_64 x86_64 GNU/Linux

[root@centos ~]# /usr/sbin/apxs -c mod_rangecnt.c

...

/usr/lib64/apr-1/build/libtool --silent --mode=link gcc -o mod_rangecnt.la -rpath /usr/lib64/httpd/modules -module -avoid-version mod_rangecnt.lo

[root@centos ~]# /usr/sbin/apxs -i -a mod_rangecnt.la

...

Libraries have been installed in: /usr/lib64/httpd/modules

...

[root@centos ~]# /etc/init.d/httpd configtest

Syntax OK

[root@centos ~]# /etc/init.d/httpd restart

Stopping httpd: [ OK ]

Starting httpd: [ OK ]

After applying the mod_rangecnt patch if all is fine the memory exhaustion perl DoS script's output should be like so:

freebsd# perl httpd_dos.pl www.patched-apache-host.com 50

Host does not seem vulnerable

All of the above pointed work-arounds are only a temporary solution to these Grave Apache DoS byterange vulnerability , a few days after the original vulnerability emerged and some of the up-pointed work arounds were pointed. There was information, that still, there are ways that the vulnerability can be exploited.

Hopefully in the coming few weeks Apache dev team should be ready with rock solid work around to the severe problem.

In 2 years duration these is the second serious Apache Denial of Service vulnerability after before a one and a half year the so called Slowloris Denial of Service attack was capable to DoS most of the Apache installations on the Net.

Slowloris, has never received the publicity of the Range Header DoS as it was not that critical as the mod_range, however this is a good indicator that the code quality of Apache is slowly decreasing and might need a serious security evaluation.

Tags: apache httpd, apache server, apache webserver, ATTACKING, bugzilla, CentOS, com, config, copy, CustomLog, deb package, denial of service, denial of service attack, dos attack, dos script, download, exploit, freebsd, host, HTTP, info, machine memory, memory exhaustion, minute time, mirror copy, mod, necessery, Netscape, number, perl httpd, poc, pPpPpppPpPPppPpppPp, REJECT, Remote, RewriteCond, script, simultaneous connections, work

Posted in Computer Security, Linux, System Administration | No Comments »

Saturday, May 28th, 2011 One of the mail server clients is running into issues with secured SSL IMAP connections ( he has to use a multiple email accounts on the same computer).

I was informed that part of the email addresses are working correctly, however the newly created ones were failing to authenticate even though all the Outlook Express email configuration was correct as well as the username and password typed in were a real existing credentials on the vpopmail server.

Initially I thought, something is wrong with his newly configured emails but it seems all the settings were perfectly correct!

After a lot of wondering what might be wrong I was dumb enough not to check my imap log files.

After checking in my /var/log/mail.log which is the default log file I’ve configured for vpopmail and some of my qmail server services, I found the following error repeating again and again:

imapd-ssl: Maximum connection limit reached for ::ffff:xxx.xxx.xxx.xxx" imapd-ssl error

where xxx.xxx.xxx.xxx was the email user computer IP address.

This issues was caused by one of my configuration settings in the imapd-ssl and imap config file:

/usr/lib/courier-imap/etc/imapd

In /usr/lib/courier-imap/etc/imapd there is a config segment called

Maximum number of connections to accept from the same IP address

Right below this commented text is the variable:

MAXPERIP=4

As you can see it seems I used some very low value for the maximum number of connections from one and the same IP address.

I suppose my logic to set such a low value was my desire to protect the IMAP server from Denial of Service attacks, however 4 is really too low and causes problem, thus to solve the mail connection issues for the user I raised the MAXPERIP value to 50:

MAXPERIP=50

Now to force the new imapd and imapd-ssl services to reload it’s config I did a restart of the courier-imap, like so:

debian:~# /etc/init.d/courier-imap restart

That’s all now the error is gone and the client could easily configure up to 50 mailbox accounts on his PC 🙂

Tags: address right, client, config, configuration settings, configure, connection, courier imap, credentials, default log, Denial, denial of service, denial of service attacks, email accounts, email addresses, errorwhere, Express, ffff, file, imap connections, imap server, init, lib, limit, mail connection, mail log, mail server, Maximum, maximum connection, maximum number, outlook, outlook express, password, quot, segment, server clients, server services, Service, something, ssl services, text, username, value

Posted in Linux, Qmail, System Administration | 2 Comments »

Tuesday, August 30th, 2011

Recently has become publicly known for the serious hole found in all Apache webserver versions 1.3.x and 2.0.x and 2.2.x. The info is to be found inside the security CVE-2011-3192 – https://issues.apache.org/bugzilla/show_bug.cgi?id=51714

Apache remote denial of service is already publicly cirtuculating, since about a week and is probably to be used even more heavily in the 3 months to come. The exploit can be obtained from exploit-db.com a mirror copy of #Apache httpd Remote Denial of Service (memory exhaustion) is for download here

The DoS script is known in the wild under the name killapache.pl

killapache.pl PoC depends on perl ForkManager and thus in order to be properly run on FreeBSD, its necessery to install p5-Parallel-ForkManager bsd port :

freebsd# cd /usr/ports/devel/p5-Parallel-ForkManager

freebsd# make install && make install clean

...

Here is an example of the exploit running against an Apache webserver host.

freebsd# perl httpd_dos.pl www.targethost.com 50

host seems vuln

ATTACKING www.targethost.com [using 50 forks]

:pPpPpppPpPPppPpppPp

ATTACKING www.targethost.com [using 50 forks]

:pPpPpppPpPPppPpppPp

...

In about 30 seconds to 1 minute time the DoS attack with only 50 simultaneous connections is capable of overloading any vulnerable Apache server.

It causes the webserver to consume all the machine memory and memory swap and consequently makes the server to crash in most cases.

During the Denial of Service attack is in action access the websites hosted on the webserver becomes either hell slow or completely absent.

The DoS attack is quite a shock as it is based on an Apache range problem which started in year 2007.

Today, Debian has issued a new versions of Apache deb package for Debian 5 Lenny and Debian 6, the new packages are said to have fixed the issue.

I assume that Ubuntu and most of the rest Debian distrubtions will have the apache’s range header DoS patched versions either today or in the coming few days.

Therefore work around the issue on debian based servers can easily be done with the usual apt-get update && apt-get upgrade

On other Linux systems as well as FreeBSD there are work arounds pointed out, which can be implemented to close temporary the Apache DoS hole.

1. Limiting large number of range requests

The first suggested solution is to limit the lenght of range header requests Apache can serve. To implement this work raround its necessery to put at the end of httpd.conf config:

# Drop the Range header when more than 5 ranges.

# CVE-2011-3192

SetEnvIf Range (?:,.*?){5,5} bad-range=1

RequestHeader unset Range env=bad-range

# We always drop Request-Range; as this is a legacy

# dating back to MSIE3 and Netscape 2 and 3.

RequestHeader unset Request-Range

# optional logging.

CustomLog logs/range-CVE-2011-3192.log common env=bad-range

CustomLog logs/range-CVE-2011-3192.log common env=bad-req-range

2. Reject Range requests for more than 5 ranges in Range: header

Once again to implement this work around paste in Apache config file:

This DoS solution is not recommended (in my view), as it uses mod_rewrite to implement th efix and might be additionally another open window for DoS attack as mod_rewrite is generally CPU consuming.

# Reject request when more than 5 ranges in the Range: header.

# CVE-2011-3192

#

RewriteEngine on

RewriteCond %{HTTP:range} !(bytes=[^,]+(,[^,]+){0,4}$|^$)

# RewriteCond %{HTTP:request-range} !(bytes=[^,]+(?:,[^,]+){0,4}$|^$)

RewriteRule .* - [F]

# We always drop Request-Range; as this is a legacy

# dating back to MSIE3 and Netscape 2 and 3.

RequestHeader unset Request-Range

3. Limit the size of Range request fields to few hundreds

To do so put in httpd.conf:

LimitRequestFieldSize 200

4. Dis-allow completely Range headers: via mod_headers Apache module

In httpd.conf put:

RequestHeader unset Range

RequestHeader unset Request-Range

This work around could create problems on some websites, which are made in a way that the Request-Range is used.

5. Deploy a tiny Apache module to count the number of Range Requests and drop connections in case of high number of Range: requests

This solution in my view is the best one, I’ve tested it and I can confirm on FreeBSD works like a charm.

To secure FreeBSD host Apache, against the Range Request: DoS using mod_rangecnt, one can literally follow the methodology explained in mod_rangecnt.c header:

freebsd# wget http://people.apache.org/~dirkx/mod_rangecnt.c

..

# compile the mod_rangecnt module

freebsd# /usr/local/sbin/apxs -c mod_rangecnt.c

...

# install mod_rangecnt module to Apache

freebsd# /usr/local/sbin/apxs -i -a mod_rangecnt.la

...

Finally to load the newly installed mod_rangecnt, Apache restart is required:

freebsd# /usr/local/etc/rc.d/apache2 restart

...

I’ve tested the module on i386 FreeBSD install, so I can’t confirm this steps works fine on 64 bit FreeBSD install, I would be glad if I can hear from someone if mod_rangecnt is properly compiled and installed fine also on 6 bit BSD arch.

Deploying the mod_rangecnt.c Range: Header to prevent against the Apache DoS on 64 bit x86_amd64 CentOS 5.6 Final is also done without any pitfalls.

[root@centos ~]# uname -a;

Linux centos 2.6.18-194.11.3.el5 #1 SMP Mon Aug 30 16:19:16 EDT 2010 x86_64 x86_64 x86_64 GNU/Linux

[root@centos ~]# /usr/sbin/apxs -c mod_rangecnt.c

...

/usr/lib64/apr-1/build/libtool --silent --mode=link gcc -o mod_rangecnt.la -rpath /usr/lib64/httpd/modules -module -avoid-version mod_rangecnt.lo

[root@centos ~]# /usr/sbin/apxs -i -a mod_rangecnt.la

...

Libraries have been installed in:

/usr/lib64/httpd/modules

...

[root@centos ~]# /etc/init.d/httpd configtest

Syntax OK

[root@centos ~]# /etc/init.d/httpd restart

Stopping httpd: [ OK ]

Starting httpd: [ OK ]

After applying the mod_rangecnt patch if all is fine the memory exhaustion perl DoS script‘s output should be like so:

freebsd# perl httpd_dos.pl www.patched-apache-host.com 50

Host does not seem vulnerable

All of the above pointed work-arounds are only a temporary solution to these Grave Apache DoS byterange vulnerability , a few days after the original vulnerability emerged and some of the up-pointed work arounds were pointed. There was information, that still, there are ways that the vulnerability can be exploited.

Hopefully in the coming few weeks Apache dev team should be ready with rock solid work around to the severe problem.

In 2 years duration these is the second serious Apache Denial of Service vulnerability after before a one and a half year the so called Slowloris Denial of Service attack was capable to DoS most of the Apache installations on the Net.

Slowloris, has never received the publicity of the Range Header DoS as it was not that critical as the mod_range, however this is a good indicator that the code quality of Apache is slowly decreasing and might need a serious security evaluation.

Tags: apache httpd, apache server, apache webserver, ATTACKING, Auto, bugzilla, com, config, copy, CustomLog, deb package, denial of service, denial of service attack, dos attack, dos script, dos vulnerability, download, Draft, exploit, freebsd, header, host, HTTP, info, machine memory, memory exhaustion, minute time, mirror copy, mod, necessery, Netscape, number, perl httpd, poc, pPpPpppPpPPppPpppPp, REJECT, Remote, RewriteCond, script, simultaneous connections, work

Posted in Computer Security, FreeBSD, Linux, System Administration, Web and CMS | No Comments »