I have updated Ubuntu version 9.04 (Jaunty) to 9.10 and followed the my previous post update ubuntu from 9.04 to Latest Ubuntu

I expected that a step by step upgrade from a release to release will work like a charm and though it does on many notebooks it doesn't on Toshiba Satellite L40

The update itself went fine, whether I used the update-manager -d and followed the above pointed tutorial, however after a system restart the PC failed to boot the X server properly, a completely blank screen with blinking cursor appeared and that was all.

I restarted the system into the 2.6.35-28-generic kernel rescue-mode recovery kernel in order to be able to enter into physical console.

Logically the first thing I did is to check /var/log/messages and /var/log/Xorg.0.log but I couldn't find nothing unusual or wrong there.

I suspected something might be wrong with /etc/X11/xorg.conf so I deleted it:

ubuntu:~# rm -f /etc/X11/xorg.conf

and attempted to re-create the xorg.conf X configuration with command:

ubuntu:~# dpkg-reconfigure xserver-xorg

This command was reported to be the usual way to reconfigure the X server settings from console, but in my case (for unknown reasons) it did nothing.

Next the command which was able to re-generate the xorg.conf file was:

ubuntu:~# X -configure

The command generates a xorg.conf sample file in /root/xorg.conf.* so I used the conf to put it in /etc/X11/xorg.conf X's default location and restarted in hope that this would fix the non-booting issue.

Very sadly again the black screen of death appeared on the notebook toshiba screen.

I further thought of completely wipe out the xorg.conf in hope that at least it might boot without the conf file but this worked out neither.

I attempted to run the Xserver with a xorg.conf configured to work with vesa as it's well known vesa X server driver is supposed to work on 99% of the video cards, as almost all of them nowdays are compatible with the vesa standard, but guess what in my case vesa worked not!

The only version of X I can boot in was the failsafe X screen mode which is available through the grub's boot menu recovery mode.

Further on I decided to try few xorg.conf which I found online and were reported to work fine with Intel GM965 internal video , and yes this was also unsucessful.

Some of my other futile attempts were: to re-install the xorg server with apt-get, reinstall the xserver-xorg-video-intel driver e.g.:

ubuntu:~# apt-get install --reinstall xserver-xorg xserver-xorg-video-intel

As nothing worked out I was completely pissed off and decided to take an alternative approach which will take a lot of time but at least will probably be succesful, I decided to completely re-install the Ubuntu from a CD after backing up the /home directory and making a list of available packages on the system, so I can further easily run a tiny bash one-liner script to install all the packages which were previously existing on the laptop before the re-install:

Here is how I did it:

First I archived the /home directory:

ubuntu:/# tar -czvf home.tar.gz home/

....

For 12GB of data with some few thousands of files archiving it took about 40 minutes.

The tar spit archive became like 9GB and I hence used sftp to upload it to a remote FTP server as I was missing a flash drive or an external HDD where I can place the just archived data.

Uploading with sftp can be achieved with a command similar to:

sftp user@yourhost.com

Password:

Connected to yourhost.com.

sftp> put home.tar.gz

As a next step to backup in a file the list of all current installed packages, before I can further proceed to boot-up with the Ubuntu Maverich 10.10 CD and prooceed with the fresh install I used command:

for i in $(dpkg -l| awk '{ print $2 }'); do

echo $i; done >> my_current_ubuntu_packages.txt

Once again I used sftp as in above example to upload my_current_update_packages.txt file to my FTP host.

After backing up all the stuff necessery, I restarted the system and booted from the CD-rom with Ubuntu.

The Ubuntu installation as usual is more than a piece of cake and even if you don't have a brain you can succeed with it, so I wouldn't comment on it 😉

Right after the installation I used the sftp client once again to fetch the home.tar.gz and my_current_ubuntu_packages.txt

I placed the home.tar.gz in /home/ and untarred it inside the fresh /home dir:

ubuntu:/home# tar -zxvf home.tar.gz

Eventually the old home directory was located in /home/home so thereon I used Midnight Commander ( the good old mc text file explorer and manager ) to restore the important user files to their respective places.

As a last step I used the my_current_ubuntu_packages.txt in combination with a tiny shell script to install all the listed packages inside the file with command:

ubuntu:~# for i in $(cat my_current_ubuntu_packagespackages.txt); do

apt-get install --yes $i; sleep 1;

done

You will have to stay in front of the computer and manually answer a ncurses interface questions concerning some packages configuration and to be honest this is really annoying and time consuming.

Summing up the overall time I spend with this stupid Toshiba Satellite L40 with the shitty Intel GM965 was 4 days, where each day I tried numerous ways to fix up the X and did my best to get through the blank screen xserver non-bootable issue, without a complete re-install of the old Ubuntu system.

This is a lesson for me that if I stumble such a shitty issues I will straight proceed to the re-install option and not loose my time with non-sense fixes which would never work.

Hope the article might be helpful to somebody else who experience some problems with Linux similar to mine.

After all at least the Ubuntu Maverick 10.10 is really good looking in general from a design perspective.

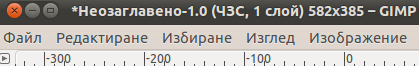

What really striked me was the placement of the close, minimize and maximize window buttons , it seems in newer Ubuntus the ubuntu guys decided to place the buttons on the left, here is a screenshot:

I believe the solution I explain, though very radical and slow is a solution that would always work and hence worthy 😉

Let me hear from you if the article was helpful.