Most of Linux distributions had introduced the NetworkManager service and are slowly trying to push out the old ways and use entirely it to manage network configs. Though at times this is very helpful stuff especially if you have Linux running on Laptop on servers is a guarantee for troubles.

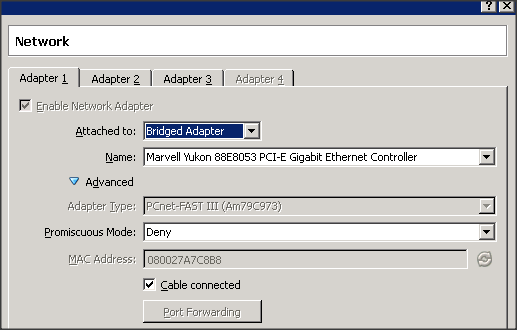

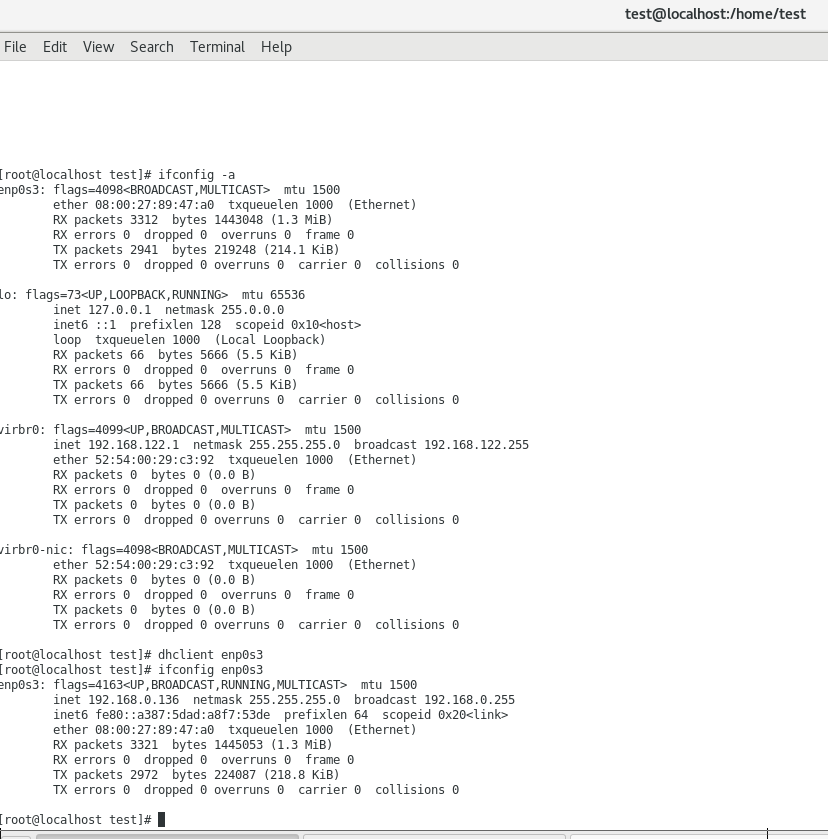

If you are a system administrator like me and you need that needs to configure a New server with lets say 8 (Ethernet interface) LAN cards each to be configured with different IPs and you have a mixture of configuration where some eth1,eth2 etc. (4 of the interface IPs has to be static IPs and others has to be taken from a DHCP lease. NetworkManager is not something that you will want as usually you don't expect soon a network IP topology change. Below is example from a Living Hypervisor server machine that has 8 Network Interfaces configured together with few Virtual Interfaces used by the running KVM Virtual Machines.

[root@redhat :~ ]# ip address show |grep ": <"

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

2: ens1f0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master team0 state UP group default qlen 1000

3: eno1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master team0 state UP group default qlen 1000

4: ens1f1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master br2 state UP group default qlen 1000

5: ens1f2: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

6: eno2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master br1 state UP group default qlen 1000

7: ens1f3: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

8: eno3: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

9: eno4: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

10: venet0: <BROADCAST,POINTOPOINT,NOARP,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default

11: br1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

12: br2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

13: team0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br0 state UP group default qlen 1000

14: br0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

15: host-routed: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

16: virbr0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default qlen 1000

17: virbr0-nic: <BROADCAST,MULTICAST> mtu 1500 qdisc pfifo_fast master virbr0 state DOWN group default qlen 1000

18: virbr1: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default qlen 1000

19: virbr1-nic: <BROADCAST,MULTICAST> mtu 1500 qdisc pfifo_fast master virbr1 state DOWN group default qlen 1000

26: vme52540019e701: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master br0 state UNKNOWN group default qlen 1000

27: vme52540081868b: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master br1 state UNKNOWN group default qlen 1000

28: vme525400a13f03: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master br2 state UNKNOWN group default qlen 1000

Having a NM managing so many LAN connected Ethernets can create you A LOT of surprises even if your servers are in a Highly Secured data center where chance of sudden IP change or network misbehaves are minimal. Even minimal some in Housing might do something wrong on the Rack mixing up with another server or switch andyour server might end up easily with unexplainable Network problems because of this NM service which is trying 'to balance' any network issues according to some algorithms …

Thus to save yourlself the troubles and completely disable NetworkManager (NM) Ethernets handling.

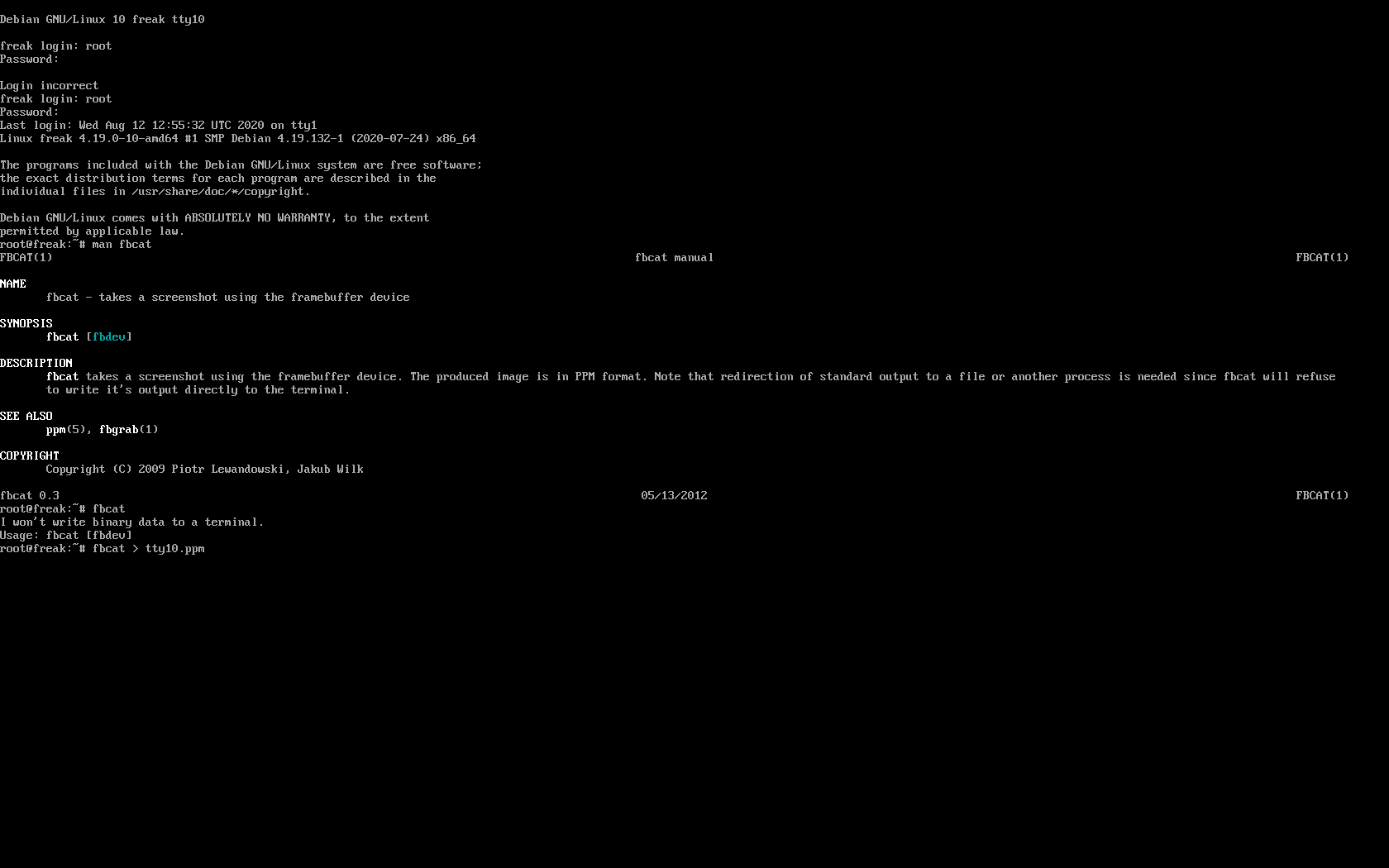

As a hint some of the troubles you might get especially if the System Hardware has issues with the Integrated Motherboard LAN Controllers such as of Dell PowerEdge R640 Rack Server.

I've recently observed one such Dell Rack mounted machine I had to configure from scratch which has out of the box NM preinstalled by a colleague and was doing strange stuff with the routings causing it to become remotely inacessible after reboot.

Even though I have started configuring the IPs and have double and triple check the configuration and machine had proper set of /etc/sysconfig/network-scripts/ifcfg-* configuration it still failed to boot with a network properly brought up and become unreachable via remote SSH connection immediately after sending machine to init 6 with /usr/sbin/init 6 (alias for shutdown -r now or reboot -f now :)

On Redhat 8 / CentOS 8 to Disabling permanently NM you have to disable NM systemd services permanently and add NM_CONTROLLED=no to each of the Ethernet configurations listed in network-scripts/ifcfg-eno3 eno4 eno1np0 etc. ifaces.

1. Disable completely Network Manager service and mask it

[root@redhat :~ ]# systemctl mask NetworkManager.service

[root@redhat :~ ]# systemctl stop NetworkManager.service

[root@redhat :~ ]# systemctl disable NetworkManager.service

2. Check if all systemd networkmanager components scripts are really disabled

# systemctl list-unit-files | grep NetworkManager

NetworkManager-dispatcher.service disabled

NetworkManager-wait-online.service enabled

NetworkManager.service disabled

NetworkManager-wait-online.service seems to be also enabled so we have to disable it.

[root@redhat :~ ]# systemctl mask NetworkManager-wait-online.service

[root@redhat :~ ]# systemctl disable NetworkManager-wait-online.service

Double check NM services

[root@redhat :~ ]# systemctl list-unit-files | grep NetworkManager

…

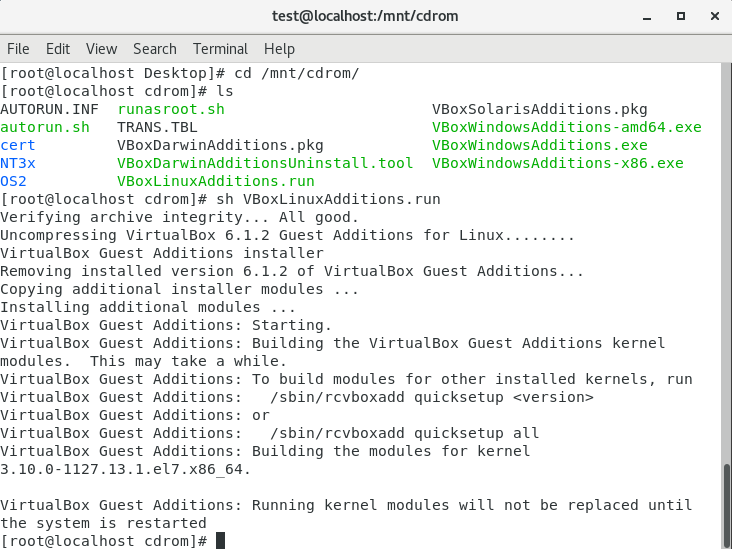

3. Install / Enable old (legacy) network-scripts

network-scripts is disabled by default due to it doesn't play well with NM.

Install the rpm package to enable it back

[root@redhat :~ ]# yum install -y network-scripts

4. Test if network-scripts is really enabled

Use Redhat's nmcli command for controlling network manager if it reports NM not running then you're fine

[root@redhat :~ ]# nmcli device

Error: NetworkManager is not running.

5. Disable legacy use network-scripts print outs

Bring down some interface with ifdown Redhat script frontend to ifconfig and bring it up with ifup iface-name

# ifup eno4

WARN : [ifup] You are using 'ifup' script provided by 'network-scripts', which are now deprecated.

WARN : [ifup] 'network-scripts' will be removed in one of the next major releases of RHEL.

WARN : [ifup] It is advised to switch to 'NetworkManager' instead – it provides 'ifup/ifdown' scripts as well.

Notice the warnings they're harmless and safe to ignore however it is pretty annoying to see them, to disable them:

[root@redhat :~ ]# touch /etc/sysconfig/disable-deprecation-warnings

6. Use network.service old-fashioned systemd service

From now on you can start using the good old well known and properly working network.service

[root@redhat :~ ]# systemctl status network

To enable the network service to start after boot:

[root@redhat :~ ]# systemctl enable network

7. Disable NetworkManager use from Network configuration scripts ifcfg-* for all server available configured ethernet cards

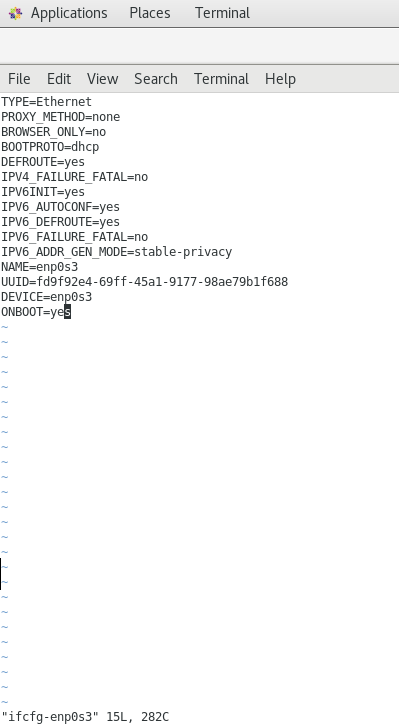

Open with text editor every network script and append NM_CONTROLLED="no" to the end of the file.

[root@redhat :~ ]# vi /etc/sysconfig/network-scripts/ifcfg-ethernetX

NM_CONTROLLED="no"

To save yourself the time if you want to disable NetworkManager use for all /etc/sysconfig/network-scripts/ifcfg-* use a simple shell loop:

[root@redhat :~ ]# cd /etc/sysconfig/network-scripts/

[root@redhat :/etc/sysconfig/network-scripts ]# for i in *ifcfg*; do echo NM_CONTROLLED="no" >> $i; done

To load the new network settings do another network reload / restart

[root@redhat :~ ]# systemctl restart network

To disable NetworkManager on older CentOS 6 / Redhat 6 / SuSE / Fedora Linux where the OS still not systemd enabled instead of using systemctl you can straight do it with old and well known chkconfig redhat script.

[root@centos6 :~ ]# service NetworkManager stop

[root@centos6 :~ ]# chkconfig NetworkManager off