In a Web application data migration project, I've come across a situation where I have to copy / transfer 500 Gigabytes of data from Linux server 1 (host A) to Linux server 2 (host B). However the two machines doesn't have direct access to each other (via port 22) for security reasons and hence I cannot use sshfs to mount remotely host dir via ssh and copy files like local ones.

As this is a data migration project its however necessery to migrate the data finding a way … Normal way companies do it is to copy the data to External Hard disk storage and send it via some Country Post services or some employee being send in Data center to attach the SAN to new server where data is being migrated However in my case this was not possible so I had to do it different.

I have access to both servers as they're situated in the same Corporate DMZ network and I can thus access both UNIX machines via SSH.

Thanksfully there is a small SSH protocol + TAR archiver and default UNIX pipe's capabilities hack that makes possible to transfer easy multiple (large) files and directories. The only requirement to use this nice trick is to have SSH client installed on the middle host from which you can access via SSH protocol Server1 (from where data is migrated) and Server2 (where data will be migrated).

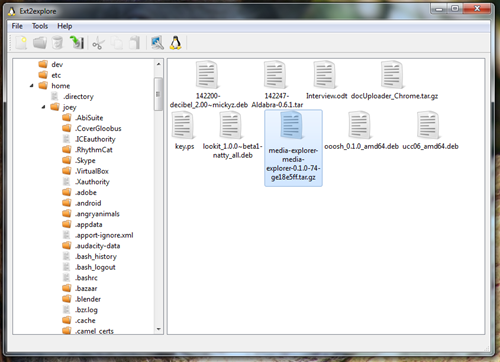

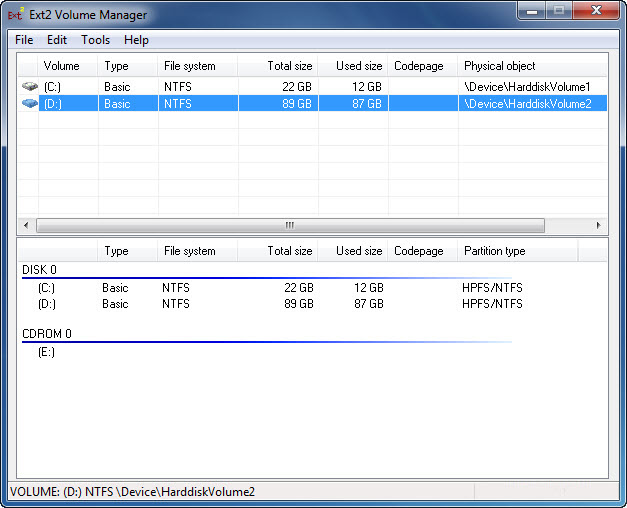

If the hopping / jump server from which you're allowed to have access to Linux servers Server1 and Server2 is not Linux and you're missing the SSH client and don't have access on Win host to install anything on it just use portable mobaxterm (as it have Cygwin SSH client embedded )

Here is how:

jump-host:~$ ssh server1 "tar czf – /somedir/" | pv | ssh server2 "cd /somedir/; tar xf

As you can see from above command line example an SSH is made to server1 a tar is used to archive the directory / directories containing my hundred of gigabytes and then this is passed to another opened ssh session to server 2 via UNIX Pipe mechanism and then TAR archiver is used second time to unarchive previously passed archived content. pv command which is in the middle is not obligitory though it is a nice way to monitor status about data transfer like below:

500GB 0:00:01 [10,5MB/s] [===================================================>] 27%

P.S. If you don't have PV installed install it either with apt-get on Debian:

debian:~# apt-get install –yes pv

Or on CentOS / Fedora / RHEL etc.

[root@centos ~]# yum -y install pv

Below is a small chunk of PV manual to give you better idea of what it does:

NAME

pv – monitor the progress of data through a pipeSYNOPSIS

pv [OPTION] [FILE]…

pv [-h|-V]DESCRIPTION

pv allows a user to see the progress of data through a pipeline, by giving information such as time elapsed, percentage

completed (with progress bar), current throughput rate, total data transferred, and ETA.To use it, insert it in a pipeline between two processes, with the appropriate options. Its standard input will be passed

through to its standard output and progress will be shown on standard error.pv will copy each supplied FILE in turn to standard output (- means standard input), or if no FILEs are specified just

standard input is copied. This is the same behaviour as cat(1).A simple example to watch how quickly a file is transferred using nc(1):

pv file | nc -w 1 somewhere.com 3000

A similar example, transferring a file from another process and passing the expected size to pv:

cat file | pv -s 12345 | nc -w 1 somewhere.com 3000

Note that with too big file transfers using PV will delay data transfer because everything will have to pass through another 2 pipes, however for file transfers up to few gigabytes its really nice to include it.

If you only need to transfer huge .tar.gz archive and you don't bother about traffic security (i.e. don't care whether transferred traffic is going through encrypted SSH tunnel and don't want to put an overhead to both systems for encrypting the data and you have some unfiltered ports between host 1 and host 2 you can run netcat on host 2 to listen for connections and forward .tar.gz content via netcat's port like so:

linux2:~$ nc -l -p 12345 > /path/destinationfile

linux2:~$ cat /path/sourcfile | nc desti.nation.ip.address 12345

Another way to transfer large data without having connection with server1 and server2 but having connection to a third host PC is to use rsync and good old SSH Tunneling, like so:

jump-host:~$ ssh -R 2200:Linux-server1:22 root@Linux-server2 "rsync -e 'ssh -p 2200' –stats –progress -vaz /directory/to/copy root@localhost:/copy/destination/dir"

Remove URL from comments in WordPress Blogs and Websites to mitigate comment spam URLs in pages

Friday, February 20th, 2015If you're running a WordPress blog or Website where you have enabled comments for a page and your article or page is well indexing in Google (receives a lot of visit / reads ) daily, your site posts (comments) section is surely to quickly fill in with a lot of "Thank you" and non-sense Spam comments containing an ugly link to an external SPAM or Phishing website.

Such URL links with non-sense message is a favourite way for SPAMmers to raise their website incoming (other website) "InLinks" and through that increase current Search Engine position.

We all know a lot of comments SPAM is generally handled well by Akismet but unfortunately still many of such spam comments fail to be identified as Spam because spam Bots (text-generator algorithms) becomes more and more sophisticated with time, also you can never stop paid a real-persons Marketers to spam you with a smart crafted messages to increase their site's SEO ).

In all those cases Akismet WP (Anti-Spam) plugin – which btw is among the first "must have" WP extensions to install on a new blog / website will be not enough ..

To fight with worsening SEO because of spam URLs and to keep your site's SEO better (having a lot of links pointing to reported spam sites will reduce your overall SEO Index Rate) many WordPress based bloggers, choose to not use default WordPress Comments capabilities – e.g. use exnternal commenting systems such as Disqus – (Web Community of Communities), IntenseDebate, LiveFyre, Vicomi …

However as Disqus and other 3rd party commenting systems are proprietary software (you don't have access to comments data as comments are kept on proprietary platform and shown from there), I don't personally recommend (or use) those ones, yes Disqus, Google+, Facebook and other comment external sources can have a positive impact on your SEO but that's temporary event and on the long run I think it is more advantageous to have comments with yourself.

A small note for people using Disqos and Facebook as comment platforms – (just imagine if Disqos or Facebook bankrupts in future, where your comments will be? 🙂 )

So assuming that you're a novice blogger and I succeeded convincing you to stick to standard (embedded) WordPress Comment System once your site becomes famous you will start getting severe amount of comment spam. There is plenty of articles already written on how to remove URL comment form spam in WordPress but many of the guides online are old or obsolete so in this article I will do a short evaluation on few things I tried to remove comment spam and how I finally managed to disable URL link spam to appear on site.

1. Hide Comment Author Link (Hide-wp-comment-author-link)

This plugin is the best one I found and I started using it since yesterday, I warmly recommend this plugin because its very easy, Download, Unzip, Activate and there you're anything typed in URL field will no longer appear in Posts (note that the URL field will stay so if you want to keep track on person's input URL you can get still see it in Wp-Admin). I'm using default WordPress WRC (Kubrick), but I guess in most newer wordpress plugins is supposed to work. If you test it on another theme please drop a comment to inform whether works for you. Hide Comment Author Link works on current latest Wordpress 4.1 websites.

A similar plugin to hide-wp-author-link that works and you can use is Hide-n-Disable-comment-url-field, I tested this one but for some reason I couldn't make it work.

Whatever I type in Website field in above form, this is wiped out of comment once submitted 🙂

2. Disable hide Comment URL (disable-hide-comment-url)

I've seen reports disable-hide-comment-url works on WordPress 3.9.1, but it didn't worked for me, also the plugin is old and seems no longer maintaned (its last update was 3.5 years ago), if it works for you please please drop in comment your WP version, on WP 4.1 it is not working.

3. WordPress Anti-Spam plugin

WordPress Anti-Spam plugin is a very useful addition plugin to install next to Akismet. The plugin is great if you don't want to remove commenter URL to show in the post but want to cut a lot of the annoying Spam Robots crawling ur site.

Anti-spam plugin blocks spam in comments automatically, invisibly for users and for admins.

Plugin is easy to use: just install it and it just works.

Anti bot works fine on WP 4.1

4. Stop Spam Comments

Stop Spam Comments is:

Stop Spam Comments works fine on WP 4.1.

I've mentioned few of the plugins which can help you solve the problem, but as there are a lot of anti-spam URL plugins available for WP its up to you to test and see what fits you best. If you know or use some other method to protect yourself from Comment Url Spam to share it please.

Import thing to note is it usually a bad idea to mix up different anti-spam plugins so don't enable both Stop Spam Comments and WordPress Anti Spam plugin.

5. Comment Form Remove Url field Manually

This (Liberian) South) African blog describes a way how to remove URL field URL manually

In short to Remove Url Comment Field manually either edit function.php (if you have Shell SSH access) or if not do it via Wp-Admin web interface:

Paste at the end of file following PHP code:

Now to make changes effect, Restart Apache / Nginx Webserver and clean any cache if you're using a plugin like W3 Total Cache plugin etc.

Other good posts describing some manual and embedded WordPress ways to reduce / stop comment spam is here and here, however as it comes to my blog, none of the described manual (code hack) ways I found worked on WordPress v. 4.1.

Thus I personally stuck to using Hide and Disable Comment URL plugin to get rid of comment website URL.

Tags: article, bloggers, btw, data, interface, lot, page, php, platform, problem, running, Search Engine, SEO, spam, Spam Robots, Stop Spam Comments, theme, URLs, use, website, Wordpress Comments, wordpress plugins, wp

Posted in Curious Facts, Various, Web and CMS, Wordpress | 2 Comments »