Earlier I've wrote extensive article on how to get hardware information on Linux using tools such as dmidecode, hardinfo, lshw, hwinfo, x86info and biosdecode but there are few other hardware reporting tools for Linux worthy to mention that has been there for historical reasons such as cpuinfo as we as some new shiny ones such as neofetch (a terminal / console hardware report tool as well the CPU-X and I-Nex which is Linux equivalent to the all known almost standard for Windows hardware detection CPU-Z worthy to say few words about.

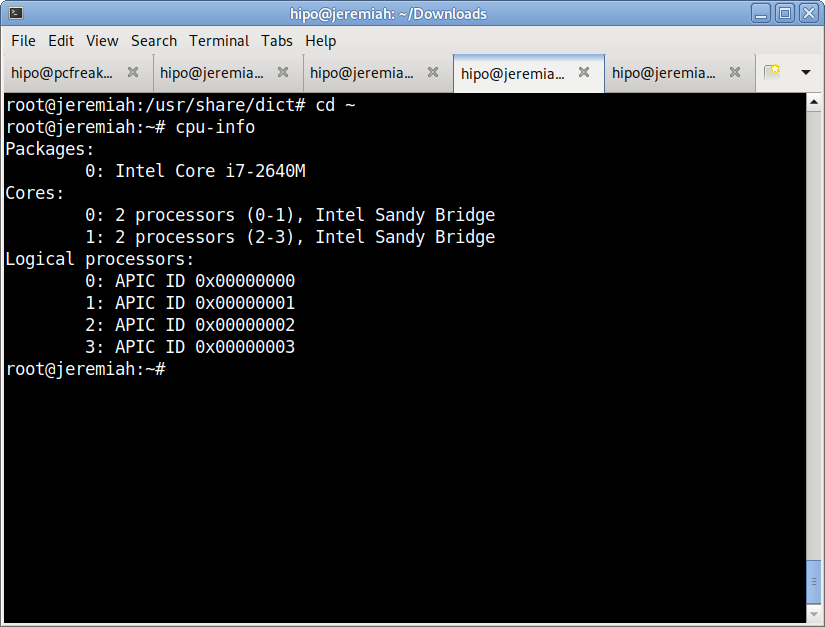

1. cpuinfo

Perhaps the most basic tool to give you a brief information about your Processor type (model) number of Cores and Logical Processors is cpuinfo

I remember cpuinfo has been there since the very beginning on almost all Linux distributions's repository, nowadays its popularity of the days when the kings on the Linux OS server scenes were Slackware, Caldera OpenLinux and Redhat 6.0 Linux and Debian 3.0 declined but still for scripting purposes it is handy small proggie.

To install and run it in Debian / Ubuntu / Mint Linux etc.:

aptitude install -y cpuinfo

…

/usr/bin/cpu-info

2. neofetch

The next one worthy to install and check is neofetch (a cross-platform and easy-to-use system information

command line script that collects your Linux system information and display it on the terminal next to an image, it could be your distributions logo or any ascii art of your choice.)

The cool thing about neofetch is besides being able to identify the System server / desktop hardware parameters, it gives some basic info about number of packages installed on the system, memory free and in use, used kernel and exact type of System (be it Dell PowerEdge Model XX, IBM eSeries Model / HP Proliant Model etc.

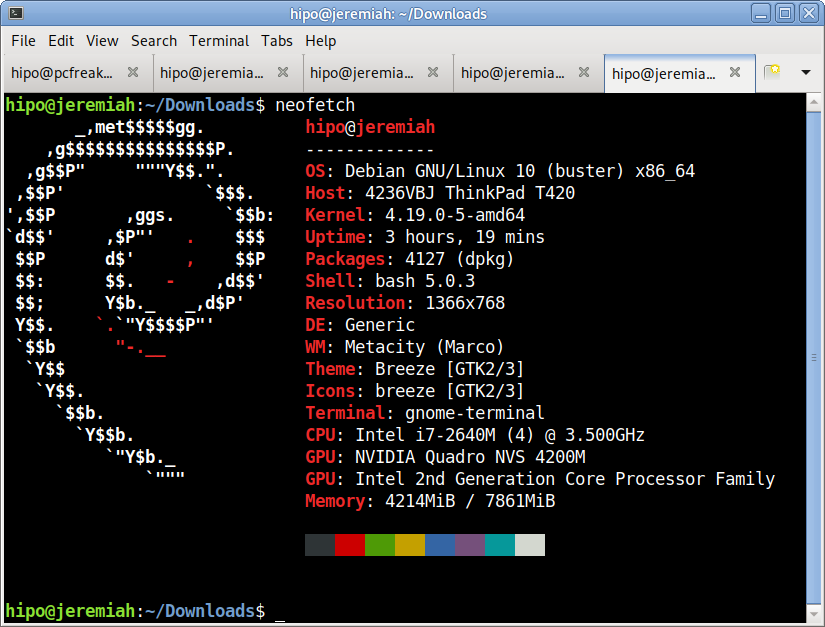

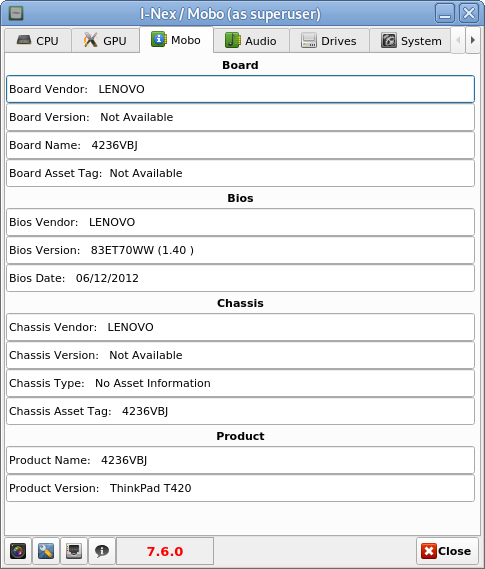

neofetch info generated on my home used Lenovo Thikpad T420

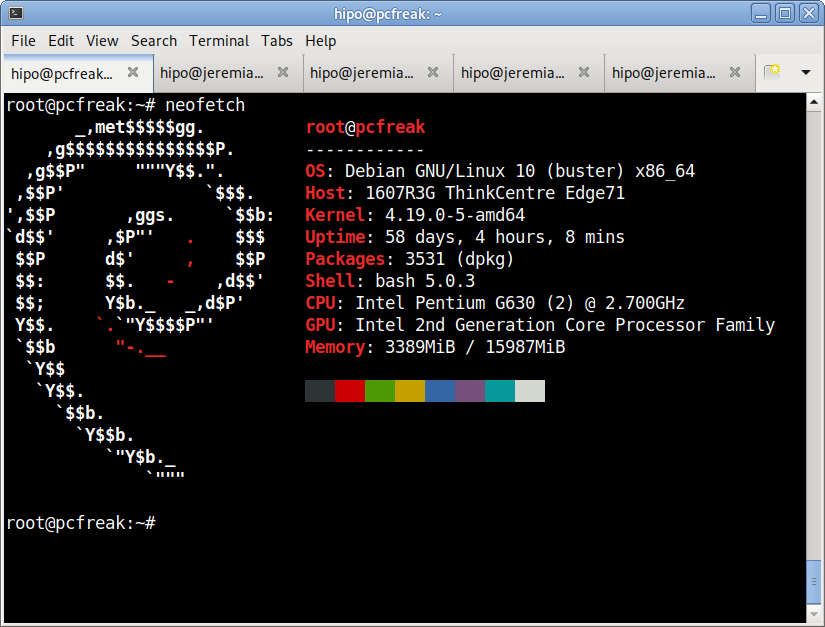

neofetch info from www.pc-freak.net running current machine

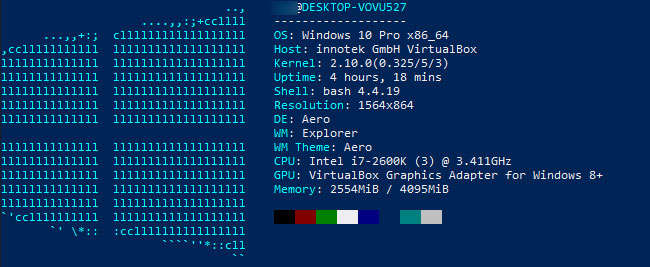

neofetch even supports Mac OS X and Windows OS ! 🙂

To install neofetch on Mac OS X:

/usr/bin/ruby -e "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/master/install)"

or via Mac ported packages using brew

brew install neofetch

neofetch is even installable on Windows OS that has the scoop command line installer tool installer manager with below PowerShell code in cmd.exe (Command line):

powershell Set-ExecutionPolicy RemoteSigned -scope CurrentUser

iex (new-object net.webclient).downloadstring('https://get.scoop.sh')

scoop install git

scoop install neofetch

By the way Scoop was quite a finding for me and it is pretty handy to install plenty of useful command line Linux / UNIX tools, such as curl, wget, git etc. in the same easy straight forward way as a standard yum or apt-get on Windows (without explicitly installing things as GnuWin and CygWin).

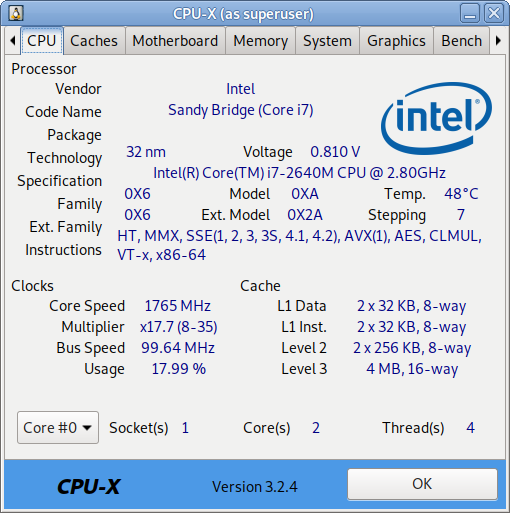

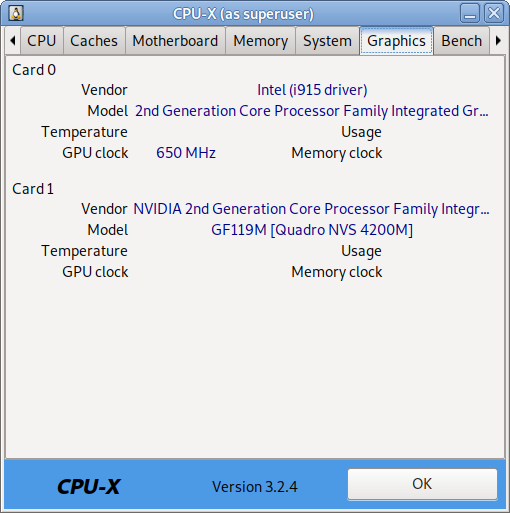

3. CPU-X graphical user interface hardware report Linux GUI alternative to Windows CPU-Z

The packages for CPU-X are a bit outdated and even though there are rpm packages for Fedora, OpenSuSE and .deb package for Debian for Debian, Ubuntu and ArchLinux (pacman), there is no up to date version for Debian 10 and the package builds distributed for different Linux distros are a bit outdated.

Thus to install CPU-X on any Linux distribution it is perhaps best to use the portable version (static binary) of CPU-X.

It is currently available on https://github.com/X0rg/CPU-X/releases

To install latest portable version of CPU-X

wget https://github.com/X0rg/CPU-X/releases/download/v3.2.4/CPU-X_v3.2.4_portable.tar.gz

…

mkdir CPU-X

cd CPU-X

tar -zxvvf CPU-X_v3.2.4_portable.tar.gz

-rwxr-xr-x yohan/users 4563032 2019-01-13 22:15 CPU-X_v3.2.4_portable.bsd64

-rwxr-xr-x yohan/users 5484968 2019-01-13 22:15 CPU-X_v3.2.4_portable.linux64

cp -rpf CPU-X_v3.2.4_portable.linux64 /usr/local/bin/

ln -sf /usr/local/bin/CPU-X_v3.2.4_portable.linux64 /usr/local/bin/cpu-x

Next run as superuser (root)

hipo@jeremiah:~$ su -c 'cpu-x'

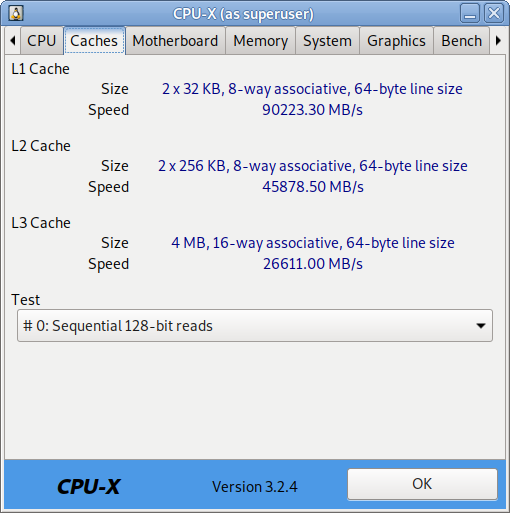

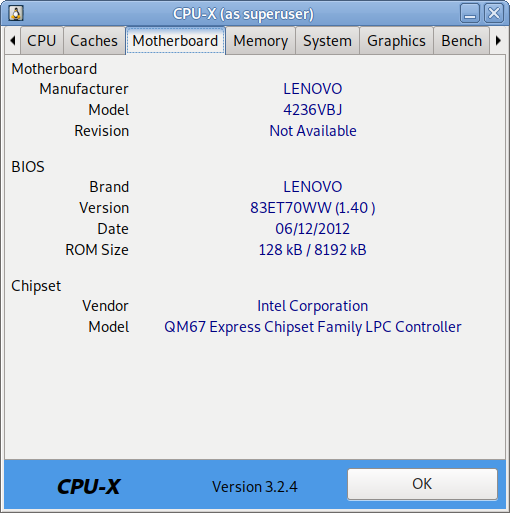

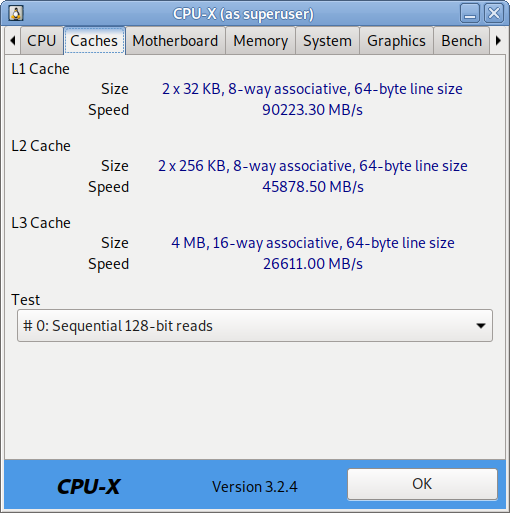

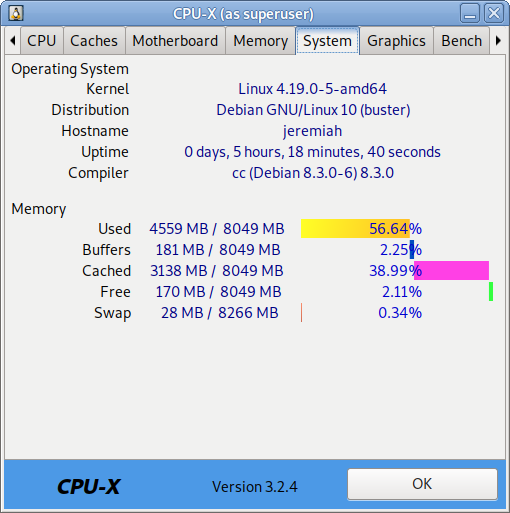

As seen from below screenshots cpu-x reports a lot of concrete specific hardware data on:

- Processor

- Motherboard

- Memory

- System

- Graphic card

- Performance

CPU-X can be installed also on FreeBSD very easily by just installing from BSD port tree sysutils/cpu-x/

It is also said to work on other *BSDs, NetBSD, OpenBSD Unixes but I guess this will require a manual compilation based on FreeBSD's port Makefile.

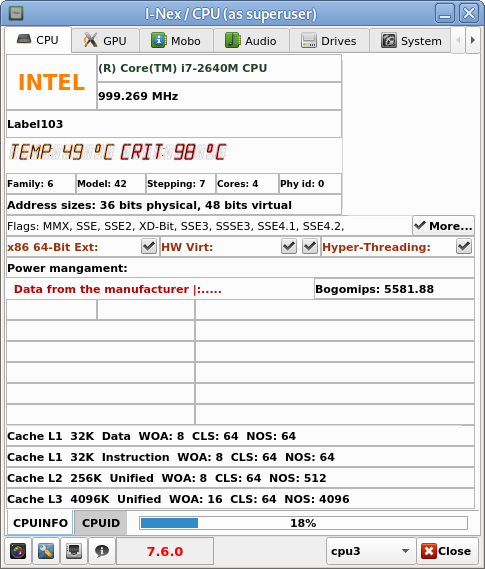

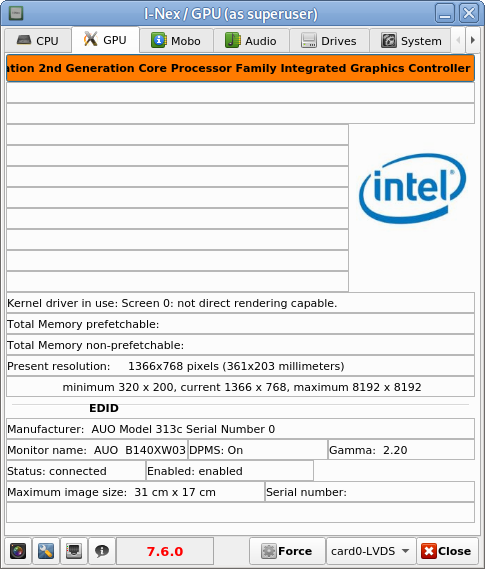

4. I-Nex another GUI alternative to CPU-Z for UNIX / Linux

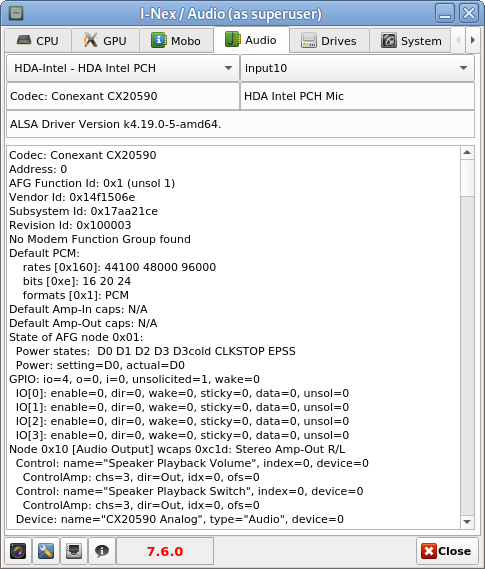

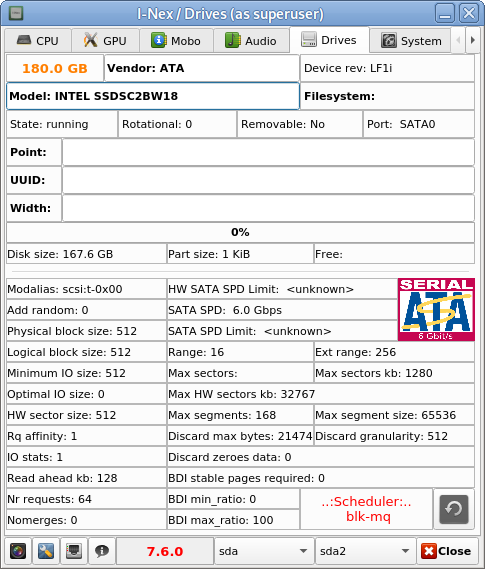

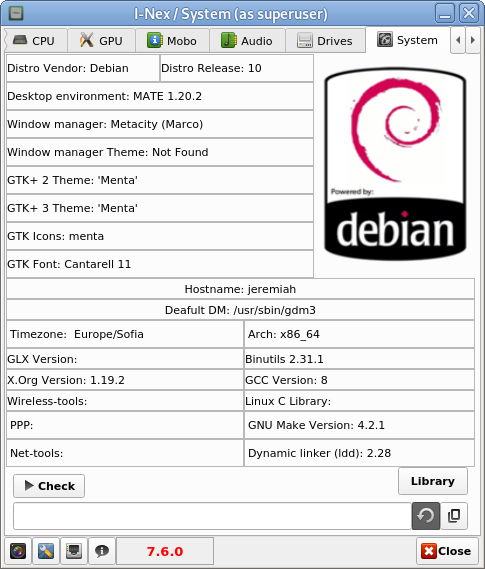

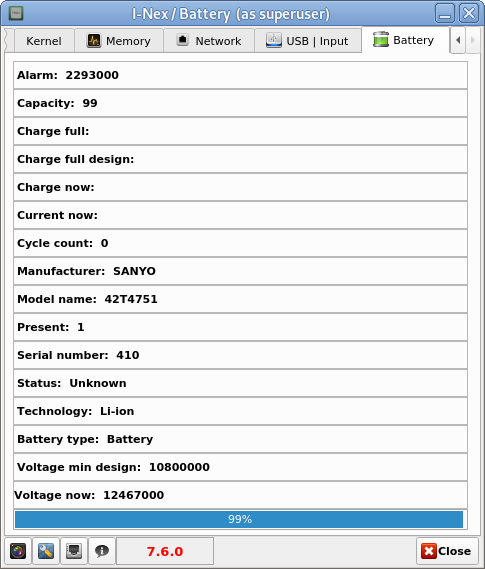

I-Nex is even more useful for general hardware reporting as it reports many hardware specifications not reported by CPU-X such as Battery type and Model Name (if the hardware report is on a laptop), info on USB devices slots or plugged USB devices brand and specifications, the available Network devices on the system (MAC Addresses) of each of it, Installed and used drivers on Hard Disk (ATA / SATA / SCSI / SSD), HW Sector size, Logical Block size, HDD Sectors count and other specific Hard Drive data as well as information on available Audio (Sound Blaster) devices (HDA-Intel), used Codecs, loaded kernel ALSA driver, Video card used and most importantly indicators on Processor reported CPU (temperature).

To install I-nex

Go to https://launchpad.net/i-nex or any of the mirror links where it resides and install the respective package, in my case, I was doing the installation on Debian Linux, so fetched current latest amd64 package which as of moment of writting this article is i-nex_7.6.0-0-bzr977-20161012-ubuntu16.10.1_amd64.deb , next installed it with dpkg

dpkg -i i-nex_7.6.0-0-bzr977-20161012-ubuntu16.10.1_amd64.deb

As the package was depending on some other .deb packages, which failed to install to install the missing ones I had to further run

apt –fix-broken install

hre

I-Nex thermal indicators about CPU temperature on a Linux Desktop notebook

There are other Hardware identification report tools such as CUDA-Z – that are useful to check if you have Nvidia Video Card hardware Installed on the PC to check the status of CUDA enabled GPUs, useful if working with nVidia Geforce, Quadro, Tesla cards and ION chipsets.

If you use it however be aware that CUDA-Z is not compatible with 3rd-party linux drivers for NVidia so make sure you have the current official Nvidia version.

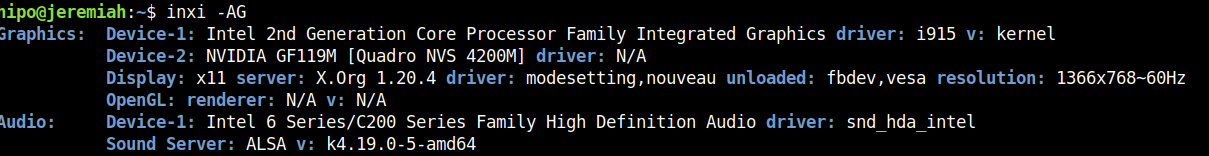

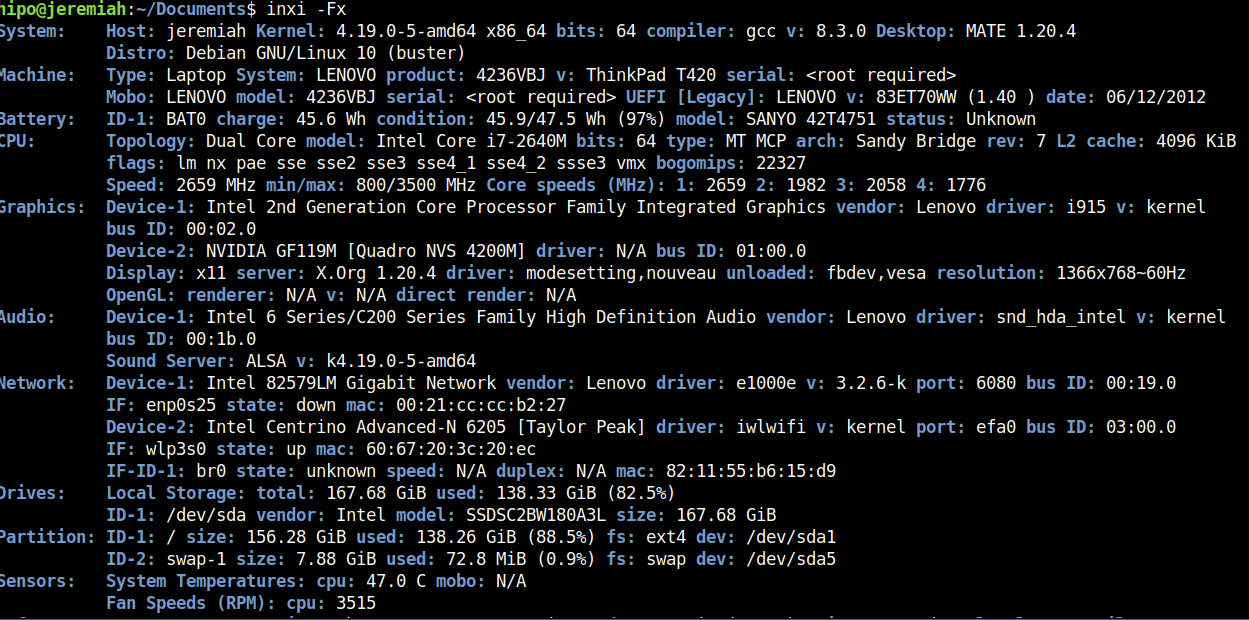

5. Inxi full featured system information script

Inxi is a 10000 lines mega bash script that fetches hardware details from multiple different sources in /proc /sys and from commands on the system, and generates a beautiful looking console report that non technical users can read easily.

inxi -Fx

Each of the pointed above tools has different method of collection of Hardware information from various resources e.g. – kernel loaded modules, dmesg, files like /proc/meminfo /proc/version /proc/scsi/scsi /proc/partitions.

Hence some of the tools are likely to report more info than otheres, so in case if some information you need regarding the system plugged in hardware is missing you can perhaps obtain it from another program. Most Linux distribution desktop provided GNOME package are including Hardinfo gui tool, but in many cases above mentioned tools are likely to add even more on info on what is inside your PC Box.

If you're aware of others tools that are useful not mentioned here please share it.