Saint Markianos (Saint Markian) and Martyrios are little known saints in the Western realm and there is too little of information in English about this two early martyrs who lived circa year 340. What is special about them is that besides being a strong confessors of the True Eastern Orthodox faith, they served in the Church as simple 'reader' and 'sub-deacon'. This two designations were very much respected in the early Church as sub-deacons were usually the ones who have served in the Church inseparable as a Church service helpers to the patriarchs or some high clergy as Metropolitans and Bishops. We have many saints in the Church that are from a simple warriors as Saint Georg and Saint Dimitrios the Wonderworker (The MyrhBringer) to monks, bishops, patriarchs and pretty much all kind of people from the society from the begger to the richest and most famous kings and queens. However it is rare to meet in the ( Act of the Martyrs – latin: Acta Martyrum), to find canonized saints that were in the lowest step in Church hierarchy as a simple 'psalm' and holy writtings reader or a sub-deacon. A Sub-deacon for those who don't know is a pearon that is a like a servant helper to the priest or bishop) that has been responsible for helping with the Church service and resolution of material and administrative needs of the christian community.

Usually in the Eastern Orthodox Church, the church reader or sub-deacons were and asre still called hipodeacon or "ipodiakon" in Greek / Slavonic church language), they didn't have the right at that early ages of christianity to publicly teach on faith matters or do apologetics (defendings of faith), however this 2 saintly man Markianos and Martyrios seem to have been a burning with the power of the spirit of God in their heart and the situation they were put in when the Church was under persecution and the patriarch Paul of Constantinople I (was patriarch from 340 ~ 350 AD). Saint Paul removed from his Church headship sent to Exile in Armenia and some time after drawned. He is commemorated in the Church on 6th of November. Hence considering situation St. Markian and Martyrius had to either defend and die for the faith or be scared and run away far in the caves or distant places of the empire such as villages on the outskits far away from the center city Rome …

The Heresy of Arius has been the most modern and the new modified faith claiming Christianity gathering followers in a viral way, and due to that the Arians have been in position where most of the public authorities in the Roman empire has been on their side against the Orthodox Christians.

Due to that in the church communities in near and distant lands of empire, the Arians were fiercely persecuting the Orthodox, and for a time even Emperor Saint Constantine The Great were deceived by their hypocrisy. It was terrible times for true confessors of faith. But not only Arians were persecuting Christians, as paganism were still deeply rooted in many of the lands and the Edict of Mediolan who gave equal rights to the religion in AD 313 was not strictly followed and senators of Roman regions with Paganist beliefs, were also harshly raising persucutions against their enemies the Christians who according to them are destroying the ancient culture and beautfy of paganism, not venerating the old pagan gods and against the wicked debauchery customs who were followed by pagans in 3rd / 4th century.

Practically everyone who have admitted publicly Jesus Christ as a Creator of the World and a Son of God one hipostasys of the Holy Trinity God The Father, The Son and the Holy Spirit, were captured put to prison and quickly executed, if they don't turn out from their christian beliefs.

Arians has taken a lead even more with the set on the throne of Emperor Constantius II the son of Constantine I-st, as he has also fallen in the Arianism* heresy and who has taken in the court as a close advisory Eusebius and Philip who due to their half-pagan half-arian half superstitious understanding of the world have led a fierce war against Christianity and did a lot of evils to Christ Church.

* Arianism – believes that Jesus Christ is the Son of God, who was begotten by God the Father, and is distinct from the Father (therefore subordinate to him), but the Son is also God the Son but not co-eternal with God the Father. Arian theology was first attributed to Arius (c. AD 256–336), a Christian presbyter in Alexandria of Egypt.

saint-Markian-and-Saint-Martirios-cleargymen-church-martyrs-3rd-century.jpg

Until dethronment of Patriarch Paul I, St. Markianos and St. Martyrios have been a notaries of St. Paul (a typist to the patriarch and a kind of personal secretaries of the Patriarch) besides serving as Church reader and sub-deacon. They were famous for their time with their warm preaching of the Words of God – the Gospel of the Christ following the example of the apostles. Due to the raising heresies they also take an active part in writting many documents against the heretical "arians" and so called "macedonians" who teached anti-christian teachings who were newly invented and unknown to the ancient church teachings. They've had a special gift from God to be able to speak in a way to defend the faith so noone with his knowledge or high-education couldn't stand overcome them in disputes on church matters and many times they have disputed with Arian heretics exposing their fallacy (delusions) putting them to shame.

After the exile of Patriarch Paul heresy-archs arians turned their poisonous hatred against the patriarch two pupils Markianos and Martyrios. Craftly acting they acted slyly with a craftul lie and promised them a lot of gold a good place in the emperor's court, to raise them in the church hierarcy (in the part of the church which was already confessing arian heresy) and give them a lot of privileges from the king with the condition to accept, support and confess arianism.

But God's servents despised everything from this world, rejected the offered golden gifts, preferred eternal Heavenly honors than short and vain worldly and even laughed at them.

As Arians saw nothing can't convince them to their malice teaching, heretics condemned them to death, which was desired by the confessors (which remembered well the exile and the manly martyrdom of their teacher St. Patriarch Paul) and with all their being desired to be with Christ in the Eternal prepared palaces, where life will be without end in never ending bliss as promised by Christ in the Holy Scriptures. They preferred Christ more than the temporary life enjoyments.

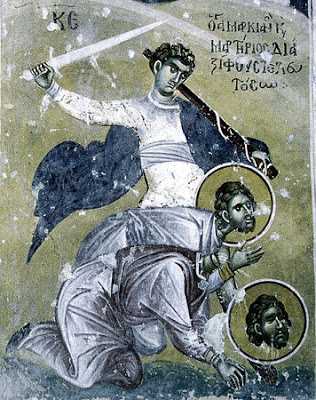

![]()

When brought to the place of the execution of their false made accusement and sentence for being blasphemers of Christ, two saints asked for a small time

to pray. Brough up their eyes to the heaven and prayed with the words:

" – Oh Lord, who have unseenly created our hearts, who arrange all our deeds – "He formed the hearts of them all; he understands everything they do." (Psalm 33:15), receive with peace the souls of your servents, because we're mortified for your name – "Yet for Your sake we are killed all day long; We are accounted as sheep for the slaughter." (Psalm 44:22). We're joyful that you give us such a death, we depart from this life because of your name. Let us to participate in the eternal life in You, the source and giver of life."

Praying with this words, they bowed their holy heads and under sword and was killed by beheading by the unfortunate arians because of their confession of the divinity of Christ as true uncreated Son of God who existed before all ages before the creation of the world as we Christians believe to this date.

Some of the Christians took their holy relics and buried them outside the Melandissia Gate of the Constantinople. Later Saint John of Chrysostom built a church in their name over the place of their miracle-working relics. There the sick for many ages received divine healings of different incurable diseases by the prayers of the holy martyrs of God, Praised in Trinity in all ages.

By the prayers of your Holy Martyrs St. Markianos and Martyrios Lord Jesus Christ have mercy on us !