Haproxy LB backend BACKEND_ROUNDROBIN are configured to roundrobin with check health check port (check port 33333).

For example letsa say haproxy server is running with a haproxy_roundrobin.cfg like this one.

Under some circumstances however if check port TCP 33333 is UP, but behind 1 or more of Application that is providing the resources to customers misbehaves ,

(app-server1, app-server2, app-server3, app-server4) members , Load Balancer cannot know this, because traffic routing decision is made based on Echo port.

One example scenario when this can happen is if Application server has issue with connectivity towards Database hosts:

(db-host1, db-host2, db-host3, db-host4)

If this happens 25% of traffic might still get balanced to broken Application server. If such scenario happens during OnCall and this is identified as problem,

work around would be to temporary disable the misbehaving App servers member from the 4 configured roundrobin pairs in haproxyproduction.cfg :

For example if app-server3 App node is identified as failing and 25% via LB is lost, to resolve it until broken Application server node is fixed, you will have to temporary exclude it from the ring of roundrobin backend hosts.

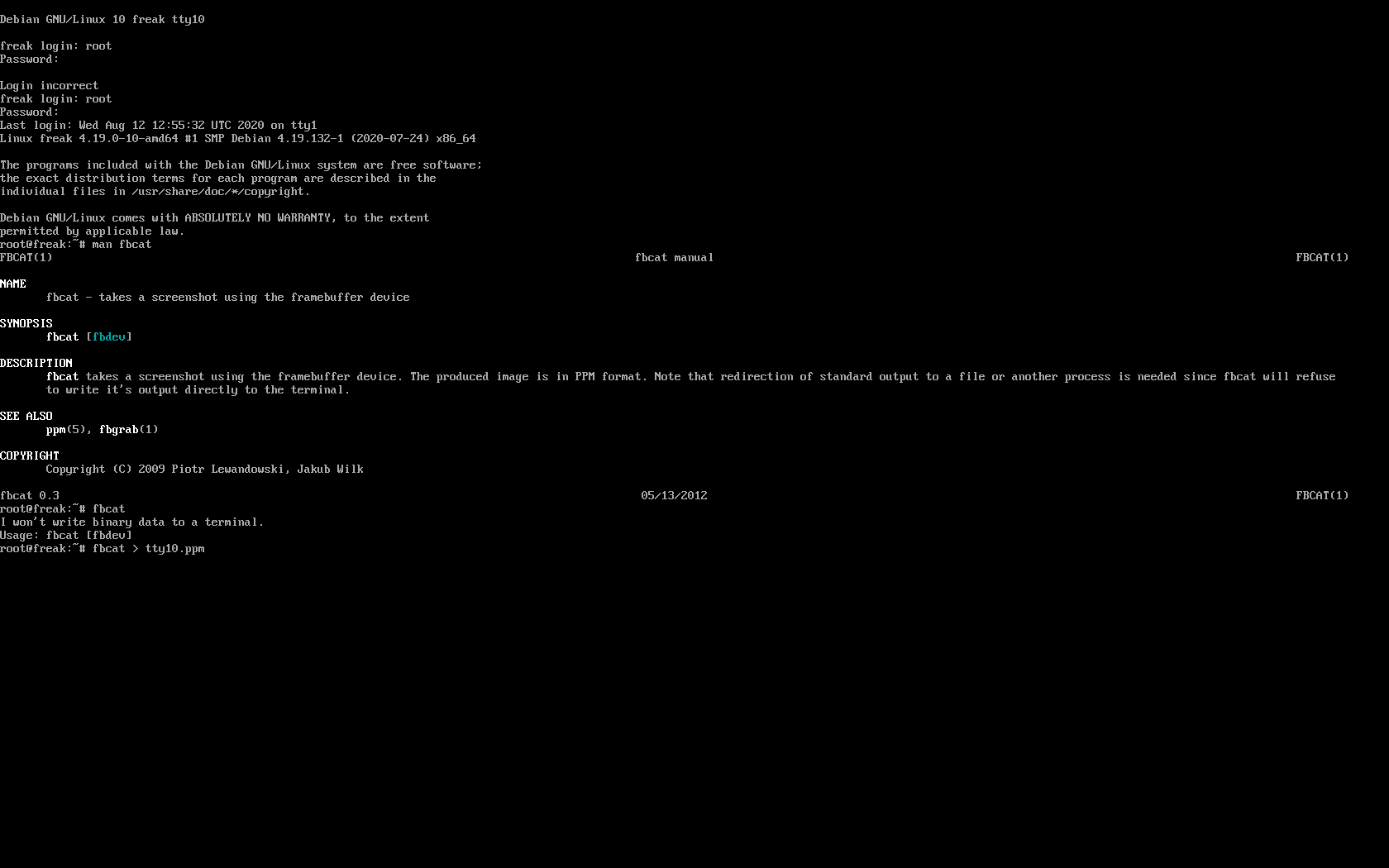

1. Check the status of haproxy backends

# echo "show stat" | socat stdio /var/lib/haproxy/stats

As you can see the backend is disabled.

Another way to do it which will make your sessions to the server not directly cut but kept for some time is to put the server you want to exclude from haproxy roundrobin to "maintenace mode".

echo "set server bk_BACKEND_ROUNDROBIN/app-server3 state maint" | socat unix-connect:/var/lib/haproxy/stats stdio

Actually, there is even better and more advanced way to disable backend from a configured rounrobin pair of hosts, with putting the available connections in a long waiting queue in the proxy, and if the App host is inavailable for not too short, haproxy will just ask the remote client to keep the connection for longer and continue the session interaction to remote side and wait for the App server connectivity to go out of maintenance, this is done via "drain" option.

echo "set server bk_BACKEND_ROUNDROBIN/app-server3 state drain" | socat unix-connect:/var/lib/haproxy/stats stdio

-

This sets the backend in DRAIN mode. No new connections are accepted and existing connections are drained.

To get a better idea on what is drain state, here is excerpt from haproxy official documentation:

Force a server's administrative state to a new state. This can be useful to

disable load balancing and/or any traffic to a server. Setting the state to

"ready" puts the server in normal mode, and the command is the equivalent of

the "enable server" command. Setting the state to "maint" disables any traffic

to the server as well as any health checks. This is the equivalent of the

"disable server" command. Setting the mode to "drain" only removes the server

from load balancing but still allows it to be checked and to accept new

persistent connections. Changes are propagated to tracking servers if any.

2. Disable backend app-server3 from rounrobin

# echo "disable server BACKEND_ROUNDROBIN/app-server3" | socat unix-connect:/var/lib/haproxy/stats stdio

# pxname,svname,qcur,qmax,scur,smax,slim,stot,bin,bout,dreq,dresp,ereq,econ,eresp,wretr,wredis,status,weight,act,bck,chkfail,chkdown,lastchg,downtime,qlimit,pid,iid,sid,throttle,lbtot,tracked,type,rate,rate_lim,rate_max,check_status,check_code,check_duration,hrsp_1xx,hrsp_2xx,hrsp_3xx,hrsp_4xx,hrsp_5xx,hrsp_other,hanafail,req_rate,req_rate_max,req_tot,cli_abrt,srv_abrt,comp_in,comp_out,comp_byp,comp_rsp,lastsess,last_chk,last_agt,qtime,ctime,rtime,ttime,

stats,FRONTEND,,,0,0,3000,0,0,0,0,0,0,,,,,OPEN,,,,,,,,,1,2,0,,,,0,0,0,0,,,,0,0,0,0,0,0,,0,0,0,,,0,0,0,0,,,,,,,,

stats,BACKEND,0,0,0,0,300,0,0,0,0,0,,0,0,0,0,UP,0,0,0,,0,282917,0,,1,2,0,,0,,1,0,,0,,,,0,0,0,0,0,0,,,,,0,0,0,0,0,0,-1,,,0,0,0,0,

Frontend_Name,FRONTEND,,,0,0,3000,0,0,0,0,0,0,,,,,OPEN,,,,,,,,,1,3,0,,,,0,0,0,0,,,,,,,,,,,0,0,0,,,0,0,0,0,,,,,,,,

Backend_Name,app-server4,0,0,0,0,,0,0,0,,0,,0,0,0,0,UP,1,1,0,1,0,282917,0,,1,4,1,,0,,2,0,,0,L4OK,,12,,,,,,,0,,,,0,0,,,,,-1,,,0,0,0,0,

Backend_Name,app-server3,0,0,0,0,,0,0,0,,0,,0,0,0,0,MAINT,1,0,1,1,2,2,23,,1,4,2,,0,,2,0,,0,L4OK,,11,,,,,,,0,,,,0,0,,,,,-1,,,0,0,0,0,

Backend_Name,BACKEND,0,0,0,0,300,0,0,0,0,0,,0,0,0,0,UP,1,1,0,,0,282917,0,,1,4,0,,0,,1,0,,0,,,,,,,,,,,,,,0,0,0,0,0,0,-1,,,0,0,0,0,

…

Once it is confirmed from Application supprt colleagues, that machine is out of maintenance node and working properly again to reenable it:

3. Enable backend app-server3

# echo "enable server bk_BACKEND_ROUNDROBIN/app-server3" | socat unix-connect:/var/lib/haproxy/stats stdio

4. Check backend situation again

# echo "show stat" | socat stdio /var/lib/haproxy/stats

# pxname,svname,qcur,qmax,scur,smax,slim,stot,bin,bout,dreq,dresp,ereq,econ,eresp,wretr,wredis,status,weight,act,bck,chkfail,chkdown,lastchg,downtime,qlimit,pid,iid,sid,throttle,lbtot,tracked,type,rate,rate_lim,rate_max,check_status,check_code,check_duration,hrsp_1xx,hrsp_2xx,hrsp_3xx,hrsp_4xx,hrsp_5xx,hrsp_other,hanafail,req_rate,req_rate_max,req_tot,cli_abrt,srv_abrt,comp_in,comp_out,comp_byp,comp_rsp,lastsess,last_chk,last_agt,qtime,ctime,rtime,ttime,

stats,FRONTEND,,,0,0,3000,0,0,0,0,0,0,,,,,OPEN,,,,,,,,,1,2,0,,,,0,0,0,0,,,,0,0,0,0,0,0,,0,0,0,,,0,0,0,0,,,,,,,,

stats,BACKEND,0,0,0,0,300,0,0,0,0,0,,0,0,0,0,UP,0,0,0,,0,282955,0,,1,2,0,,0,,1,0,,0,,,,0,0,0,0,0,0,,,,,0,0,0,0,0,0,-1,,,0,0,0,0,

Frontend_Name,FRONTEND,,,0,0,3000,0,0,0,0,0,0,,,,,OPEN,,,,,,,,,1,3,0,,,,0,0,0,0,,,,,,,,,,,0,0,0,,,0,0,0,0,,,,,,,,

Backend_Name,app-server4,0,0,0,0,,0,0,0,,0,,0,0,0,0,UP,1,1,0,1,0,282955,0,,1,4,1,,0,,2,0,,0,L4OK,,12,,,,,,,0,,,,0,0,,,,,-1,,,0,0,0,0,

Backend_Name,app-server3,0,0,0,0,,0,0,0,,0,,0,0,0,0,UP,1,0,1,1,2,3,58,,1,4,2,,0,,2,0,,0,L4OK,,11,,,,,,,0,,,,0,0,,,,,-1,,,0,0,0,0,

Backend_Name,BACKEND,0,0,0,0,300,0,0,0,0,0,,0,0,0,0,UP,1,1,1,,0,282955,0,,1,4,0,,0,,1,0,,0,,,,,,,,,,,,,,0,0,0,0,0,0,-1,,,0,0,0,0,

…

You should see the backend enabled again.

NOTE:

If you happen to get some "permission denied" errors when you try to send haproxy commands via the configured haproxy status this might be related to the fact you have enabled the socket in read only mode, if that is so it means the haproxy cannot be written to and therefore you can only read info from it with status commands, but not send any write operations to haproxy via unix socket.

One example haproxy configuration that enables haproxy socket in read only looks like this in haproxy.cfg:

stats socket /var/lib/haproxy/stats

To make the haproxy socket read / write mode, for root superuser and some other users belonging to admin group 'adm', you should set the haproxy.cfg to something like:

stats socket /var/lib/haproxy/stats-qa mode 0660 group adm level admin

or if no special users with a set admin group needed to have access to socket, use instead config like:

stats socket /var/lib/haproxy/stats-qa.sock mode 0600 level admin