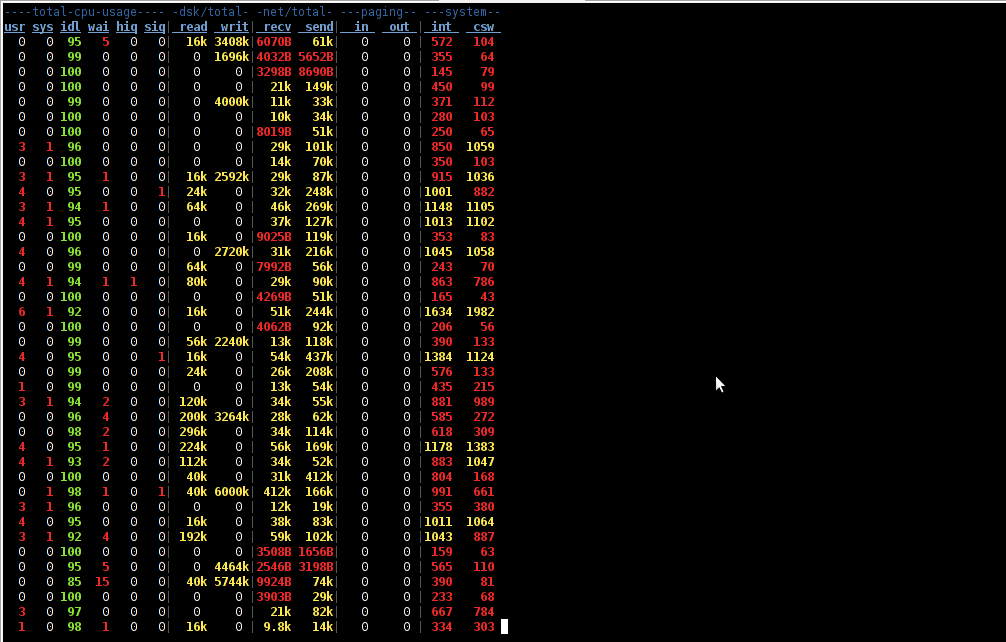

Have you looked for a universal physical check up tool to check up any filesystem type existing on your hard drive partitions?

I did! and was more than happy to just recently find out that the small UNIX program dd is capable to check any file system which is red by the Linux or *BSD kernel.

I’ll give an example, I have few partitions on my laptop computer with linux ext3 filesystem and NTFS partition.

My partitions looks like so:

noah:/home/hipo# fdisk -l

Disk /dev/sda: 160.0 GB, 160041885696 bytes

255 heads, 63 sectors/track, 19457 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x2d92834c

Device Boot Start End Blocks Id System

/dev/sda1 1 721 5786624 27 Unknown

Partition 1 does not end on cylinder boundary.

/dev/sda2 * 721 9839 73237024 7 HPFS/NTFS

/dev/sda3 9839 19457 77263200 5 Extended

/dev/sda5 9839 12474 21167968+ 83 Linux

/dev/sda6 12474 16407 31593208+ 83 Linux

/dev/sda7 16407 16650 1950448+ 82 Linux swap / Solaris

/dev/sda8 16650 19457 22551448+ 83 Linux

For all those unfamiliar with dd – dd – convert and copy a file this tiny program is capable of copying data from (if) input file to an output file as in UNIX , the basic philosophy is that everything is a file partitions themselves are also files.

The most common use of dd is to make image copies of a partition with any type of filesystem on it and move it to another system

Looking from a Windows user perspective dd is the command line Norton Ghost equivalent for Linux and BSD systems.

The classic way dd is used to copy let’s say my /dev/sda1 partition to another hard drive /dev/hdc1 is by cmds:

noah:/home/hipo# dd if=/dev/sda1 of=/dev/hdc1 bs=16065b

Even though the basic use of dd is to copy files, its flexibility allows a “trick” through which dd can be used to check any partition readable by the operating system kernel for bad blocks

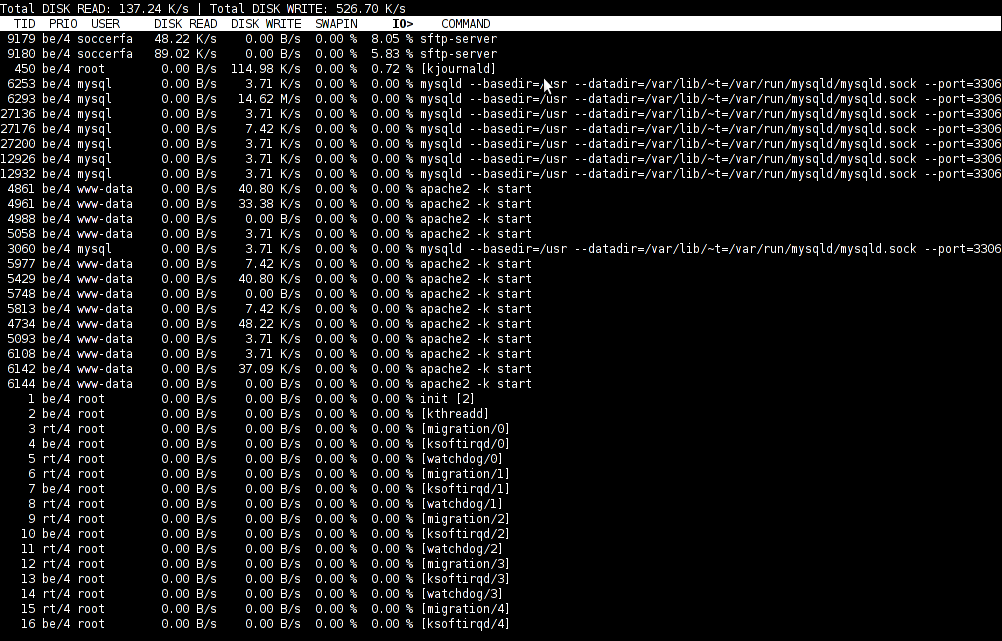

In order to check any of the partitions listed, let’s say the one listed with filesystem HPFS/NTFS on /dev/sda2 using dd

noah:/home/hipo# dd if=/dev/sda2 of=/dev/null bs=1M

As you can see the of (output file) for dd is set to /dev/null in order to prevent dd to write out any output red by /dev/sda2 partition. bs=1M instructs dd to read from /dev/sda2 by chunks of 1 Megabyte in order to accelerate the speed of checking the whole drive.

Decreasing the bs=1M to less will take more time but will make the bad block checking be more precise.

Anyhow in most cases bs of 1 Megabyte will be a good value.

After some minutes (depending on the partition size), dd if, of operations outputs a statistics informing on how dd operations went.

Hence ff some of the blocks on the partition failed to be red by dd this will be shown in the final stats on its operation completion.

The drive, I’m checking does not have any bad blocks and dd statistics for my checked partition does not show any hard drive bad block problems:

71520+1 records in

71520+1 records out

74994712576 bytes (75 GB) copied, 1964.75 s, 38.2 MB/s

The statistics is quite self explanatory my partition of s size 75 GB was scanned for 1964 seconds roughly 32 minutes 46 seconds. The number of records red and written are 71520+1 e.g. (records in / records out). This means that all the records were properly red and wrote to /dev/null and therefore no BAD blocks on my NTFS partition 😉