Posts Tagged ‘solution’

Friday, September 23rd, 2011

In my recent question of looking for best ways to optimize my wordpress blog, as well as other wordpress based websites, I’m managing I’ve come across a great plugin called W3 Total Cache or W3TC as it’s widely known among wordpress geeks.

The full caching wordpress solution in face of W3TC is already actively deployed among many major wordpress powered websites, to name a few:

stevesouders.com, mattcutts.com, mashable.com

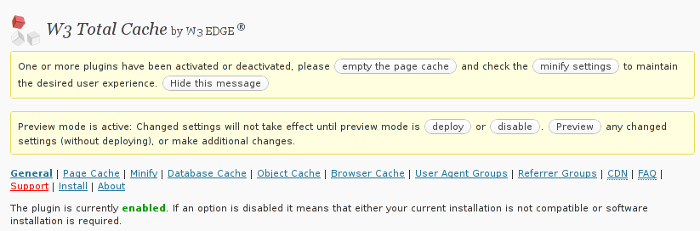

I gave a try W3 Total Cache and was amazed about the rich caching functionality it provides. Having the W3TC plugin installed adds a whole menu on the left wordpress admin panel reading Performance , clicking on it shows a menu with thorough choices on numerous things which deal with the running of wordpress on the server.

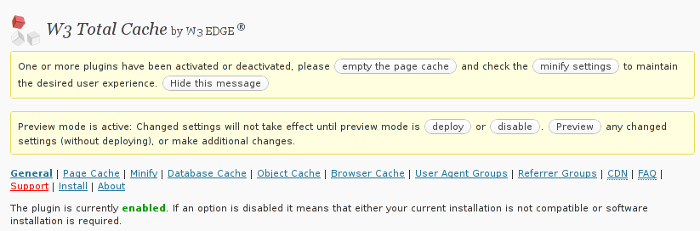

Here is a screenshot on the menus W3 Total Cache provides:

The plugin has support for html caching, sql caching, CDN, CSS and Javascript compress & minify etc.

To test the plugin adequately I disabled HyperCache and Db Cache . My observations is that with W3TC wordpress renders php and the overall user experience and download times in wordpress are better. Therefore I’ll probably use only W3 Total Cache as a cacher for wordpress installs.

Besides that I had some issues on some wordpress based websites with the Db Cache SQL caching.

On these websites after enabling Db Cache, suddenly page edditing of the created existing pages started returning empty pages. This could be due to the fact that these wordpress based websites was customly tailered and many code was wiped out, however it could also be a Db Cache bug. So to conclude W3TC is the perfect solution for wordpress caching 😉

Tags: amp, blog, cacher, CDN, choices, com, comI, download, experience, face, geeks, Javascript, left, mashable, menus, minify, page, panel, perfect solution, performance, php, plugin, question, reading, reading performance, running, screenshot, solution, use, user experience, Wordpress

Posted in SEO, Various, Web and CMS, Wordpress | 2 Comments »

Friday, October 28th, 2011 One of the qmail servers I manage today has started returning strange errors in Squirrel webmail and via POP3/IMAP connections with Thunderbird.

What was rather strange is if the email doesn’t contain a link to a webpage or and attachment, e.g. mail consists of just plain text the mail was sent properly, if not however it failed to sent with an error message of:

Requested action aborted: error in processing Server replied: 451 qq temporary problem (#4.3.0)

After looking up in the logs and some quick search in Google, I come across some online threads reporting that the whole issues are caused by malfunction of the qmail-scanner.pl (script checking mail for viruses).

After a close examination on what is happening I found out /usr/sbin/clamd was not running at all?!

Then I remembered a bit earlier I applied some updates on the server with apt-get update && apt-get upgrade , some of the packages which were updated were exactly clamav-daemon and clamav-freshclam .

Hence, the reason for the error:

451 qq temporary problem (#4.3.0)

was pretty obvious qmail-scanner.pl which is using the clamd daemon to check incoming and outgoing mail for viruses failed to respond, so any mail which contained any content which needed to go through clamd for a check and returned back to qmail-scanner.pl did not make it and therefore qmail returned the weird error message.

Apparently for some reason apparently the earlier update of clamav-daemon failed to properly restart, the init script /etc/init.d/clamav-daemon .

Following fix was very simple all I had to do is launch clamav-daemon again:

linux:~# /etc/inid.d/clamav-daemon restart

Afterwards the error is gone and all mails worked just fine 😉

Tags: action, amp, cause and solution, checking, Clamav, clamd, daemon, email, examination, fine, fix, g mail, google, imap connections, init script, link, logs, mail, online, outgoing mail, pl script, processing, Qmail, qq, reason, Requested, scanner, Search, servers, solution, Squirrel, strange errors, Thunderbird, today, update, usr, Viruses, weird error message

Posted in Linux, Qmail, System Administration | No Comments »

Thursday, September 10th, 2009 While playing with my installed programs on my recently updated Debian I stepped into a problem with /usr/lib32/alsa-lib/libasound_module_pcm_pulse.so. It seems the library was linked to two non-existing libraries: /emul/ia32-linux/lib/libwrap.so.0 as well as /emul/ia32-linux/usr/lib/libgdbm.so.3. A temporary solution to the issue is pointed out in Debian of the Debian Bug reports . As the report reads to solve that it’s required to:

1. Download libwrap0_7.6.q-18_i386.deb and libgdbm3_1.8.3-6+b1_i386.deb.

2. Extract the packages:dpkg -X libwrap0_7.6.q-18_i386.deb /emul/ia32-linux/dpkg -X libgdbm3_1.8.3-6+b1_i386.deb /emul/ia32-linux/

3. echo /emul/ia32-linux/lib >> /etc/ld.so.conf.d/ia32.conf

4. Execute /sbin/ldconfig

5. Check if all is properly linkedExecute ldd /usr/lib32/alsa-lib/libasound_module_pcm_pulse.so|grep -i “not found”Hopefully all should be fixed now.

Tags: b1, bug reports, conf, deb, Debian, debian bug, download, dpkg, emul, grep, issue, ld, ldquo, lib, libasound, libgdbm, libraries, linkedExecute, Linux, Module, pulse, report, rsquo, sbin, sid, solution, squeeze, temporary solution, Unstable, usr

Posted in Linux and FreeBSD Desktop, Linux Audio & Video, Skype on Linux, System Administration | No Comments »

Wednesday, July 13th, 2011 One server with a broken Raid array was having troubles with it’s software raid.

I tried to scan the raid array via a rescue cd like so:

server:~# mdadm --assemble --scan /dev/md1

just to be suprised by the message:

mdadm: /dev/md1 assembled from 2 drives – not enough to start the array.

In /proc/mdstat respectively the raid was showing inactive, e.g.:

server:~# cat /proc/mdstat

Personalities : [raid10] [raid1]

md1 : inactive sda2[0] sdc2[2] sdb2[1]

12024384 blocks

Respectively trying to activate the software Linux raid array with:

server:~# mdadm -A -s

Couldn’t be completed because of the same annoying error:

/dev/md1 assembled from 2 drives – not enough to start the array.

Thanksfully finally thanks to some Russian, who posted having same issues reported to be able to active his software RAID with mdadm’s –force option.

Thus enabling the problematic RAID 5 array was possible with:

server:~# mdadm -A -s --force

This solution of course is temporary and will have to further check what’s wrong with the array, however at least now I can chroot to the server’s / directory. 😉

Tags: annoying error, cat, check, course, dev, drive, ERROR, force option, Linux, md1, mdstat, option, Personalities, proc, Raid, raid 5 array, raid array, rescue, rescue cd, sCould, sda, sdb, sdc, software, software linux, software raid, solution, Thanksfully, way

Posted in Linux, System Administration | 1 Comment »

Saturday, July 9th, 2011 I’m currently writting a script which is supposed to be adding new crontab jobs and do a bunch of other mambo jambo.

By so far I’ve been aware of only one way to add a cronjob non-interactively like so:

linux:~# echo '*/5 * * * * /root/myscript.sh' | crontab -

Though using the | crontab – would work it has one major pitfall, I did completely forgot | crontab – OVERWRITES CURRENT CRONTAB! with the crontab passed by with the echo command.

One must be extremely careful if he decides to use the above example as you might loose your crontab definitions permanently!

Thanksfully it seems there is another way to add crontabs non interactively via a script, as I couldn’t find any good blog which explained something different from the classical example with pipe to crontab –, I dropped by in the good old irc.freenode.net to consult the bash gurus there 😉

So I entered irc and asked the question how can I add a crontab via bash shell script without overwritting my old existing crontab definitions less than a minute later one guy with a nickname geirha was kind enough to explain me how to get around the annoying overwridding.

The solution to the ovewrite was expected, first you use crontab to dump current crontab lines to a file and then you append the new cron job as a new record in the file and finally you ask the crontab program to read and insert the crontab definitions from the newly created files.

So here is the exact code one could run inside a script to include new crontab jobs, next to the already present ones:

linux:~# crontab -l > file; echo '*/5 * * * * /root/myscript.sh >/dev/null 2>&1' >> file; crontab file

The above definition as you could read would make the new record of */5 * * * * /root/myscript.sh >/dev/null be added next to the existing crontab scheduled jobs.

Now I’ll continue with my scripting, in the mean time I hope this will be of use to someone out there 😉

Tags: bash scripts, bash shell script, blog, classical example, cron, cron job, cron jobs, crontab, definitions, dev, echo, echo 5, echo command, exact code, file, fileThe, gurus, irc, jambo, kind, line, Linux, mambo jambo, mean time, minute, nbsp nbsp nbsp nbsp nbsp, nickname, ovewrite, pipe, pitfall, root, scripting, Shell, solution, someone, Thanksfully, time, use, way

Posted in Linux, System Administration | 9 Comments »

Wednesday, April 27th, 2011 I just completed a fresh Ubuntu 10.10 Maverick-Merkaat install.

Following the installation I used a small script to install a bunch of packages I used on the same notebook before the Ubuntu re-installation.

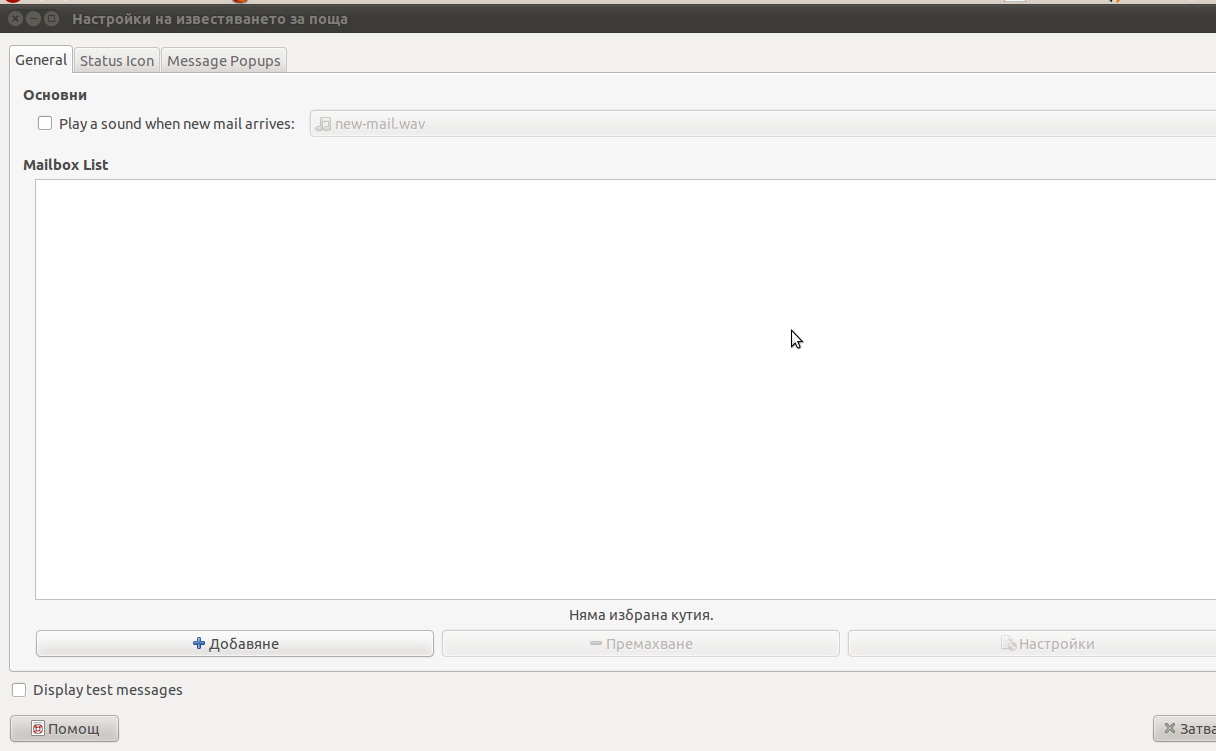

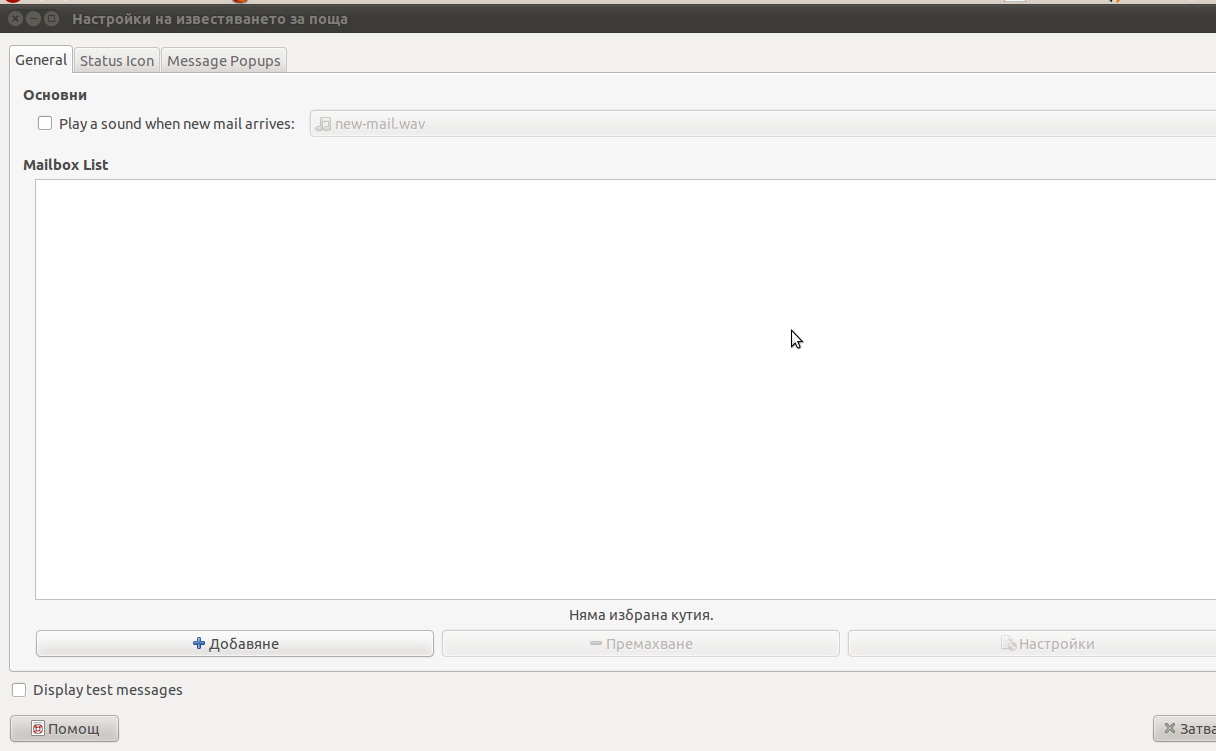

Now after the number of packages are installed on the newly installed Ubuntu, everytime I login with any GNOME user account I get mail notification settings window to automatically start-up

Closing on every gnome login session the mail settings is not a pleasent experience, therefore I took a bit of seconds to find out what launches the New Mail pop-up window

Here is how the annoying window looks like everytime I login on my ubuntu:

Some of the text on the above screenshot is in Bulgarian as the default configured locale for this Ubuntu install is set to Bulgarian but I hope this doesn’t matter as other people who have the same popup can still recognize the window.

Now to find out the process which spawned the mail notification popup I issued:

root@ubuntu:~# ps ax |grep -i mail 2651 pts/1 Sl+ 0:01 mail-notification --sm-disable

Further on I checked what is the original location of mail notification command :

root@ubuntu:~# which mail-notification

/usr/bin/mail-notification

To be absolutely sure mail-notification does spawn the mail settings window I used pkill -9 mail-notification

As the window suddenly died now I was absolutely sure that mail-notification is spawning the unwanted pop-up window which appeared right after me logging in.

I used dpkg -S to check which package does the mail-notification program belong to as I thought that the solution to get rid of this annoying popup will come to removing the whole package, here is what I did:

root@ubuntu:~# dpkg -S /usr/bin/mail-notification

mail-notification: /usr/bin/mail-notification

root@ubuntu:~#

Now knowing the package I simply wiped it off:

root@ubuntu:~# apt-get remove --yes mail-notification

...

root@ubuntu:~# dpkg --purge mail-notification

...

After that I guarantee you you won’t see the irritating new mail settings pop-up window again.

Farewell mail-notification annoyance, hope to never see you again!!! 🙂

Tags: annoyance, annoying popup, annoying window, command, dpkg, experience, Farewell, Gnome, gnome user, grep, installation, locale, location, login, mail notification program, mail pop, mail settings, maverick, new mail, notebook, notification, notification settings, ome, package, pkill, pleasent experience, pop up window, popup, purge, root, screenshot, settings window, solution, Ubuntu, usr, usr bin

Posted in Linux, Linux and FreeBSD Desktop | No Comments »

Monday, April 11th, 2011

If you’re experiencing problems with maximising flash (let’s say youtube) videos on your Debian or Ubuntu or any other debian derivative.

You’re not the only one! I myself has often experienced the same annoying issue.

The flash fullscreen failures or slownesses are caused by flash player’s attempts to use directly your machine hardware, as Linux kernel is rather different than Windows and the guys from Macromedia are creating always a way more buggy port of flash for unix than it’s windows versions, it’s quite normal that the flash player is unable to properly address the computer hardware on Linux.

As i’m not programmer and I couldn’t exactly explain the cause for the fullscreen flash player mishaps, I’ll skip this and right give you the two command lines solution:

debian:~# mkdir /etc/adobe

debian:~# echo "OverrideGPUValidation = 1" >> /etc/adobe/mms.cfg

This should fix it for, you now just restart your Icedove (Firefox), Epiphany Opera or whatever browser you’re used to and launch some random video in youtube to test the solution, hopefully it should be okay 😉 But you never know with flash let’s just hope that very soon the open flash alternative gnash will be production ready and at last we the free software users will be freed from the evil “slavery” of adobe’s non-free flash player!

Though this tip is tested on Debian based Linux distributions it should most likely work same in all kind of other Linuxes.

The tip should also probably have effect in FreeBSD, though the location of the adobe directory and mms.cfg should probably be /usr/local/etc/adobe, I’ll be glad to hear from some FreeBSD user if including the OverrideGPUValidation = 1 flash option to mms.cfg like below:

# mkdir /usr/local/etc/adobe

# echo "OverrideGPUValidation = 1" >> /usr/local/etc/adobe/mms.cfg

would have an impact on any flash player fullscreen issues on FreeBSD and other BSD direvative OSes that run the linux-flash port.

Tags: Adobe, adobe flash, adobedebian, browser, BSD, buggy, cause, cfg, command, Computer, computer hardware, derivative, Flash, flash fullscreen, flash issues, flash option, free flash player, free software users, freebsd user, fullscreen flash, gnash, hardware, Icedove, impact, issue, kernel, linux distributions, linux flash, linuxes, location, machine hardware, macromedia, mms, option, oses, player, port, production, programmer, Resolving, right, slavery, software, solution, sudo, tip, Ubuntu, video, way, windows versions, work, youtube, youtube videos

Posted in Linux, Linux and FreeBSD Desktop, Linux Audio & Video | No Comments »

Wednesday, February 17th, 2010 Configuring some Virtualhosts on a Debian server I administrate has led me to a really shitty problem. The problem itself consisted in that nomatter what kind of the configured VirtualHosts on the server I try to access the default one or the first one listed among Virtualhosts gets accessed. Believe me such an Apache behaviour is a real pain in the ass! I went through the VirtualHosts configurations many without finding any fault in them, everything seemed perfectly fine there. I started doubting something might prevent VirtualHosts to be served by the Webserver. Therefore to check if VirtualHosts configurations are properly loadedI used the following command:

debian-server:~# /usr/sbin/apache2ctl -S

Guess what, All was perfectly fine there as well. The command returned, my webserver configured VirtualHosts as enabled (linked) in: /etc/apache2/sites-enabled I took some time to ask in irc.freenode.net #debian channel if somebody has encountered the same weirdness, but unfortunately noobody could help there. I thinked over and over the problem and I started experimenting with various stuff in configurations until I got the problem.

The issue with non-working Virtualhosts in Debian lenny was caused by;

wrong NameVirtualHost *:80 directive

It’s really odd because enabling the directive as NameVirtualHost *:80 would report a warning just like there are more than one NameVirtualHost variable in configuration, on the other hand completely removing it won’t report any warnings during Apache start/restart but same time VirtualHosts would still be non-working.

So to fix the whole mess-up with VirtualHosts not working I had to modify in; my /etc/apache2/sites-enabled/000-default as follows;

NameVirtualHost *:80 changes toNameVirtualHost *

The rest of the Virtualhost stays the same;

This simple thing eradicated the f*cking issue which tortured me

for almost 3 hours! ghhh

Tags: Debian, default, lenny, problem, solution, VirtualHosts

Posted in System Administration | 4 Comments »

Thursday, February 18th, 2010 I’m building a new subversion repository, after installing it and configuring it to be accessed via https:// I stumbled upon the error;

Could not open the requested SVN filesystem when accessing one of the SVN repositories. The problem was caused by incorrect permissions of the repository, some of the files in the repository had permissions of thesystem user with which the files were imported.

simply changing the permissions with to make them readable for apache fixed the issue.

Tags: cause, could, filesystem, Requested, solution

Posted in System Administration | No Comments »