1. Use the good old times rinetd – internet “redirection server” service

Perhaps, many people who are younger wouldn't remember rinetd's use was pretty common on old Linuxes in the age where iptables was not on the scene and its predecessor ipchains was so common.

In the raise of mass internet rinetd started loosing its popularity because the service was exposed to the outer world and due to security holes and many exploits circulating the script kiddie communities

many servers get hacked "pwned" in the jargon of the script kiddies.

rinetd is still available even in modern Linuxes and over the last years I did not heard any severe security concerns regarding it, but the old paranoia perhaps and the set to oblivion makes it still unpopular soluttion for port redirect today in year 2021.

However for a local secured DMZ lans I can tell you that its use is mostly useful and I chooes to use it myself, everynow and then due to its simplicity to configure and use.

rinetd is pretty standard among unixes and is also available in old Sun OS / Solaris and BSD-es and pretty much everything on the Unix scene.

Below is excerpt from 'man rinetd':

DESCRIPTION

rinetd redirects TCP connections from one IP address and port to another. rinetd is a single-process server which handles any number of connections to the address/port pairs

specified in the file /etc/rinetd.conf. Since rinetd runs as a single process using nonblocking I/O, it is able to redirect a large number of connections without a severe im‐

pact on the machine. This makes it practical to run TCP services on machines inside an IP masquerading firewall. rinetd does not redirect FTP, because FTP requires more than

one socket. rinetd is typically launched at boot time, using the following syntax: /usr/sbin/rinetd The configuration file is found in the file /etc/rinetd.conf, unless another file is specified using the -c command line option.

To use rinetd on any LInux distro you have to install and enable it with apt or yum as usual. For example on my Debian GNU / Linux home machine to use it I had to install .deb package, enable and start it it via systemd :

server:~# apt install –yes rinetd

…

server:~# systemctl enable rinetd

server:~# systemctl start rinetd

server:~# systemctl status rinetd

● rinetd.service

Loaded: loaded (/etc/init.d/rinetd; generated)

Active: active (running) since Tue 2021-09-21 10:48:20 EEST; 2 days ago

Docs: man:systemd-sysv-generator(8)

Tasks: 1 (limit: 4915)

Memory: 892.0K

CGroup: /system.slice/rinetd.service

└─1364 /usr/sbin/rinetd

rinetd is doing the traffic redirect via a separate process daemon, in order for it to function once you have service up check daemon is up as well.

root@server:/home/hipo# ps -ef|grep -i rinet

root 359 1 0 16:10 ? 00:00:00 /usr/sbin/rinetd

root 824 26430 0 16:10 pts/0 00:00:00 grep -i rinet

+ Configuring a new port redirect with rinetd

Is pretty straight forward everything is handled via one single configuration – /etc/rinetd.conf

The format (syntax) of a forwarding rule is as follows:

[bindaddress] [bindport] [connectaddress] [connectport]

Besides that rinetd , could be used as a primitive firewall substitute to iptables, general syntax of allow deny an IP address is done with (allow, deny) keywords:

allow 192.168.2.*

deny 192.168.2.1?

To enable logging to external file ,you'll have to include in the configuration:

# logging information

logfile /var/log/rinetd.log

Here is an example rinetd.conf configuration, redirecting tcp mysql 3306, nginx on port 80 and a second web service frontend for ILO to server reachable via port 8888 and a redirect from External IP to local IP SMTP server.

#

# this is the configuration file for rinetd, the internet redirection server

#

# you may specify global allow and deny rules here

# only ip addresses are matched, hostnames cannot be specified here

# the wildcards you may use are * and ?

#

# allow 192.168.2.*

# deny 192.168.2.1?

#

# forwarding rules come here

#

# you may specify allow and deny rules after a specific forwarding rule

# to apply to only that forwarding rule

#

# bindadress bindport connectaddress connectport

# logging information

logfile /var/log/rinetd.log

83.228.93.76 80 192.168.0.20 80

192.168.0.2 3306 192.168.0.19 3306

83.228.93.76 443 192.168.0.20 443

# enable for access to ILO

83.228.93.76 8888 192.168.1.25 443

127.0.0.1 25 192.168.0.19 25

83.228.93.76 is my external ( Public ) IP internet address where 192.168.0.20, 192.168.0.19, 192.168.0.20 (are the DMZ-ed Lan internal IPs) with various services.

To identify the services for which rinetd is properly configured to redirect / forward traffic you can see it with netstat or the newer ss command

root@server:/home/hipo# netstat -tap|grep -i rinet

tcp 0 0 www.pc-freak.net:8888 0.0.0.0:* LISTEN 13511/rinetd

tcp 0 0 www.pc-freak.n:http-alt 0.0.0.0:* LISTEN 21176/rinetd

tcp 0 0 www.pc-freak.net:443 0.0.0.0:* LISTEN 21176/rinetd

…

+ Using rinetd to redirect External interface IP to loopback's port (127.0.0.1)

If you have the need to redirect an External connectable living service be it apache mysql / privoxy / squid or whatever rinetd is perhaps the tool of choice (especially since there is no way to do it with iptables.

If you want to redirect all traffic which is accessed via Linux's loopback interface (localhost) to be reaching a remote host 11.5.8.1 on TCP port 1083 and 1888, use below config

# bindadress bindport connectaddress connectport

11.5.8.1 1083 127.0.0.1 1083

11.5.8.1 1888 127.0.0.1 1888

For a quick and dirty solution to redirect traffic rinetd is very useful, however you'll have to keep in mind that if you want to redirect traffic for tens of thousands of connections constantly originating from the internet you might end up with some disconnects as well as notice a increased use of rinetd CPU use with the incrased number of forwarded connections.

2. Redirect TCP / IP port using DNAT iptables firewall rules

Lets say you have some proxy, webservice or whatever service running on port 5900 to be redirected with iptables.

The easeiest legacy way is to simply add the redirection rules to /etc/rc.local. In newer Linuxes rc.local so if you decide to use,

you'll have to enable rc.local , I've written earlier a short article on how to enable rc.local on newer Debian, Fedora, CentOS

# redirect 5900 TCP service

sysctl -w net.ipv4.conf.all.route_localnet=1

iptables -t nat -I PREROUTING -p tcp –dport 5900 -j REDIRECT –to-ports 5900

iptables -t nat -I OUTPUT -p tcp -o lo –dport 5900 -j REDIRECT –to-ports 5900

iptables -t nat -A OUTPUT -o lo -d 127.0.0.1 -p tcp –dport 5900 -j DNAT –to-destination 192.168.1.8:5900

iptables -t nat -I OUTPUT –source 0/0 –destination 0/0 -p tcp –dport 5900 -j REDIRECT –to-ports 5900

Here is another two example which redirects port 2208 (which has configured a bind listener for SSH on Internal host 192.168.0.209:2208) from External Internet IP address (XXX.YYY.ZZZ.XYZ)

# Port redirect for SSH to VM on openxen internal Local lan server 192.168.0.209

-A PREROUTING -p tcp –dport 2208 -j DNAT –to-destination 192.168.0.209:2208

-A POSTROUTING -p tcp –dst 192.168.0.209 –dport 2208 -j SNAT –to-source 83.228.93.76

3. Redirect TCP traffic connections with redir tool

If you look for an easy straight forward way to redirect TCP traffic, installing and using redir (ready compiled program) might be a good idea.

root@server:~# apt-cache show redir|grep -i desc -A5 -B5

Version: 3.2-1

Installed-Size: 60

Maintainer: Lucas Kanashiro <kanashiro@debian.org>

Architecture: amd64

Depends: libc6 (>= 2.15)

Description-en: Redirect TCP connections

It can run under inetd or stand alone (in which case it handles multiple

connections). It is 8 bit clean, not limited to line mode, is small and

light. Supports transparency, FTP redirects, http proxying, NAT and bandwidth

limiting.

.

redir is all you need to redirect traffic across firewalls that authenticate

based on an IP address etc. No need for the firewall toolkit. The

functionality of inetd/tcpd and "redir" will allow you to do everything you

need without screwy telnet/ftp etc gateways. (I assume you are running IP

Masquerading of course.)

Description-md5: 2089a3403d126a5a0bcf29b22b68406d

Homepage: https://github.com/troglobit/redir

Tag: interface::daemon, network::server, network::service, role::program,

use::proxying

Section: net

Priority: optional

server:~# apt-get install –yes redir

…

Here is a short description taken from its man page 'man redir'

DESCRIPTION

redir redirects TCP connections coming in on a local port, [SRC]:PORT, to a specified address/port combination, [DST]:PORT. Both the SRC and DST arguments can be left out,

redir will then use 0.0.0.0.

redir can be run either from inetd or as a standalone daemon. In –inetd mode the listening SRC:PORT combo is handled by another process, usually inetd, and a connected

socket is handed over to redir via stdin. Hence only [DST]:PORT is required in –inetd mode. In standalone mode redir can run either in the foreground, -n, or in the back‐

ground, detached like a proper UNIX daemon. This is the default. When running in the foreground log messages are also printed to stderr, unless the -s flag is given.

Depending on how redir was compiled, not all options may be available.

+ Use redir to redirect TCP traffic one time

Lets say you have a MySQL running on remote machine on some internal or external IP address, lets say 192.168.0.200 and you want to redirect all traffic from remote host to the machine (192.168.0.50), where you run your Apache Webserver, which you want to configure to use

as MySQL localhost TCP port 3306.

Assuming there are no irewall restrictions between Host A (192.168.0.50) and Host B (192.168.0.200) is already permitting connectivity on TCP/IP port 3306 between the two machines.

To open redirection from localhost on 192.168.0.50 -> 192.168.0.200:

server:~# redir –laddr=127.0.0.1 –lport=3306 –caddr=192.168.0.200 –cport=3306

If you need other third party hosts to be additionally reaching 192.168.0.200 via 192.168.0.50 TCP 3306.

root@server:~# redir –laddr=192.168.0.50 –lport=3306 –caddr=192.168.0.200 –cport=3306

Of course once you close, the /dev/tty or /dev/vty console the connection redirect will be cancelled.

+ Making TCP port forwarding from Host A to Host B permanent

One solution to make the redir setup rules permanent is to use –rinetd option or simply background the process, nevertheless I prefer to use instead GNU Screen.

If you don't know screen is a vVrtual Console Emulation manager with VT100/ANSI terminal emulation to so, if you don't have screen present on the host install it with whatever Linux OS package manager is present and run:

root@server:~#screen -dm bash -c 'redir –laddr=127.0.0.1 –lport=3306 –caddr=192.168.0.200 –cport=3306'

That would run it into screen session and detach so you can later connect, if you want you can make redir to also log connections via syslog with ( -s) option.

I found also useful to be able to track real time what's going on currently with the opened redirect socket by changing redir log level.

Accepted log level is:

-l, –loglevel=LEVEL

Set log level: none, err, notice, info, debug. Default is notice.

root@server:/ # screen -dm bash -c 'redir –laddr=127.0.0.1 –lport=3308 –caddr=192.168.0.200 –cport=3306 -l debug'

To test connectivity works as expected use telnet:

root@server:/ # telnet localhost 3308

Trying 127.0.0.1…

Connected to localhost.

Escape character is '^]'.

g

5.5.5-10.3.29-MariaDB-0+deb10u1-log�+c2nWG>B�����o+#ly=bT^]79mysql_native_password

6��#HY000Proxy header is not accepted from 192.168.0.19 Connection closed by foreign host.

once you attach to screen session with

root@server:/home # screen -r

You will get connectivity attempt from localhost logged : .

redir[10640]: listening on 127.0.0.1:3306

redir[10640]: target is 192.168.0.200:3306

redir[10640]: Waiting for client to connect on server socket …

redir[10640]: target is 192.168.0.200:3306

redir[10640]: Waiting for client to connect on server socket …

redir[10793]: peer IP is 127.0.0.1

redir[10793]: peer socket is 25592

redir[10793]: target IP address is 192.168.0.200

redir[10793]: target port is 3306

redir[10793]: Connecting 127.0.0.1:25592 to 127.0.0.1:3306

redir[10793]: Entering copyloop() – timeout is 0

redir[10793]: Disconnect after 1 sec, 165 bytes in, 4 bytes out

The downsides of using redir is redirection is handled by the separate process which is all time hanging in the process list, as well as the connection redirection speed of incoming connections might be about at least 30% slower to if you simply use a software (firewall ) redirect such as iptables. If you use something like kernel IP set ( ipsets ). If you hear of ipset for a first time and you wander whta it is below is short package description.

root@server:/root# apt-cache show ipset|grep -i description -A13 -B5

Maintainer: Debian Netfilter Packaging Team <pkg-netfilter-team@lists.alioth.debian.org>

Architecture: amd64

Provides: ipset-6.38

Depends: iptables, libc6 (>= 2.4), libipset11 (>= 6.38-1~)

Breaks: xtables-addons-common (<< 1.41~)

Description-en: administration tool for kernel IP sets

IP sets are a framework inside the Linux 2.4.x and 2.6.x kernel which can be

administered by the ipset(8) utility. Depending on the type, currently an

IP set may store IP addresses, (TCP/UDP) port numbers or IP addresses with

MAC addresses in a way which ensures lightning speed when matching an

entry against a set.

.

If you want to

.

* store multiple IP addresses or port numbers and match against the

entire collection using a single iptables rule.

* dynamically update iptables rules against IP addresses or ports without

performance penalty.

* express complex IP address and ports based rulesets with a single

iptables rule and benefit from the speed of IP sets.

.

then IP sets may be the proper tool for you.

Description-md5: d87e199641d9d6fbb0e52a65cf412bde

Homepage: http://ipset.netfilter.org/

Tag: implemented-in::c, role::program

Section: net

Priority: optional

Filename: pool/main/i/ipset/ipset_6.38-1.2_amd64.deb

Size: 50684

MD5sum: 095760c5db23552a9ae180bd58bc8efb

SHA256: 2e2d1c3d494fe32755324bf040ffcb614cf180327736c22168b4ddf51d462522

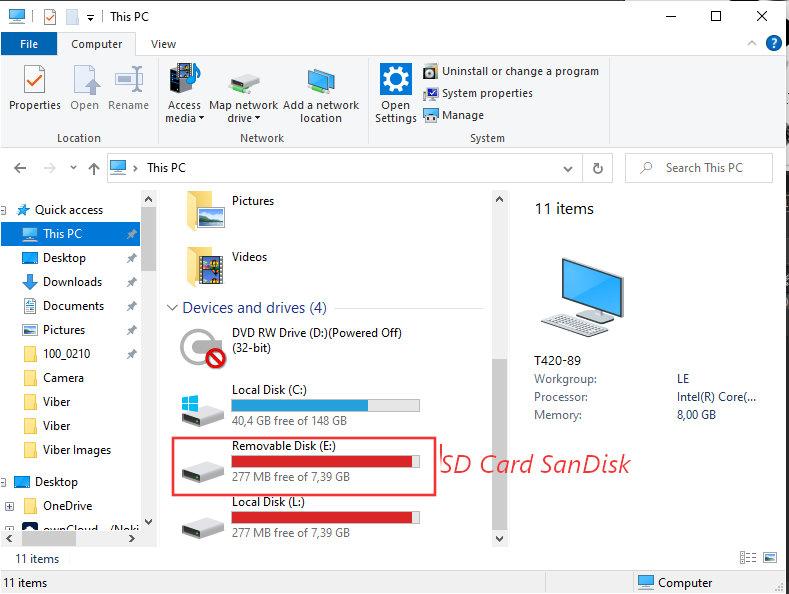

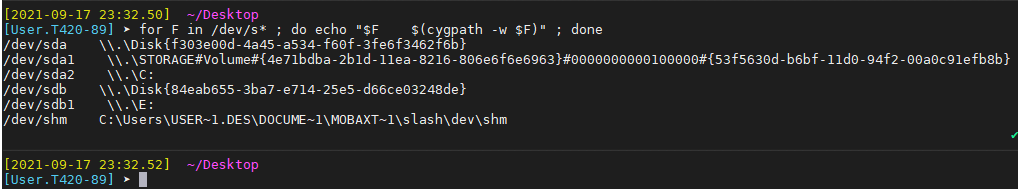

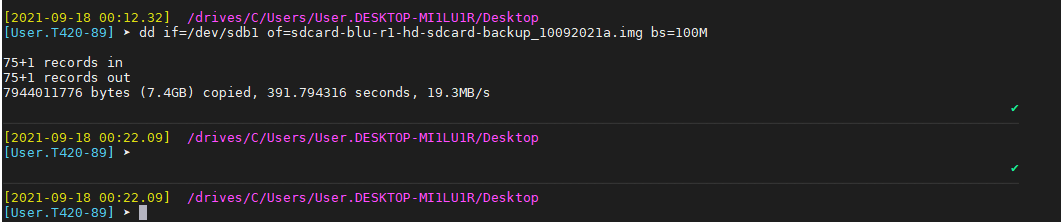

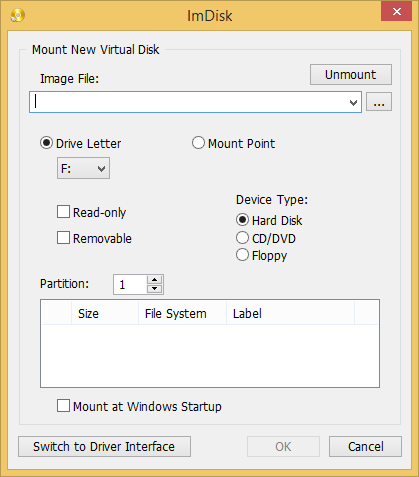

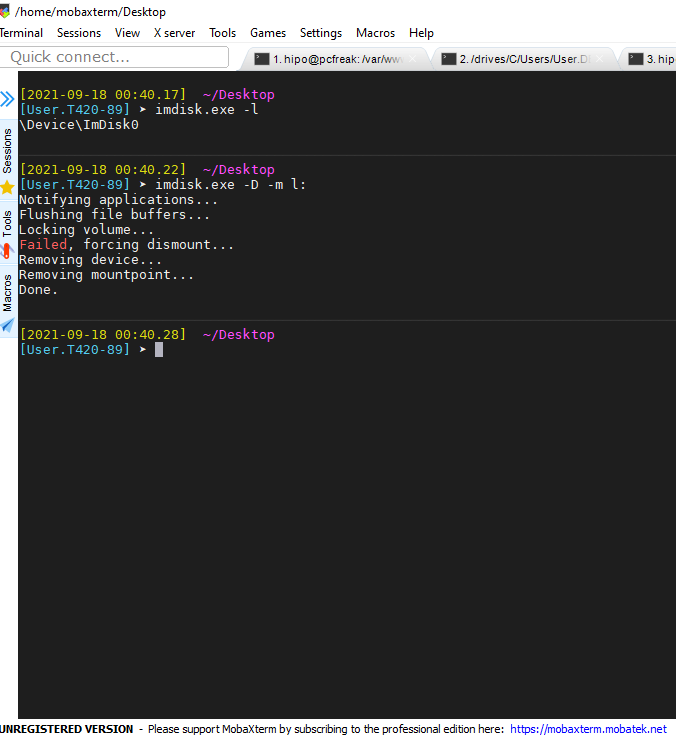

Check drives device naming on WIndows PC – Screenshot extract from Mobaxterm

Check drives device naming on WIndows PC – Screenshot extract from Mobaxterm

As a sysadmin that have my own Open Xen Debian Hypervisor running on a Lenovo ThinkServer few months ago due to a human error I managed to mess up one of my virtual machines and rebuild the Operating System from scratch and restore files service and MySQl data from backup that really pissed me of and this brought the need for having a decent Virtual Machine OpenXen backup solution I can implement on the Debian ( Buster) 10.10 running the free community Open Xen version 4.11.4+107-gef32c7afa2-1. The Hypervisor is a relative small one holding just 7 VM s:

As a sysadmin that have my own Open Xen Debian Hypervisor running on a Lenovo ThinkServer few months ago due to a human error I managed to mess up one of my virtual machines and rebuild the Operating System from scratch and restore files service and MySQl data from backup that really pissed me of and this brought the need for having a decent Virtual Machine OpenXen backup solution I can implement on the Debian ( Buster) 10.10 running the free community Open Xen version 4.11.4+107-gef32c7afa2-1. The Hypervisor is a relative small one holding just 7 VM s: