If you try to delete more than 131072 of files on Linux with rm -f *, where the files are all stored in the same directory, you will get an error:

/bin/rm: Argument list too long.

I've earlier blogged on deleting multiple files on Linux and FreeBSD and this is not my first time facing this error.

Anyways, as time passed, I've found few other new ways to delete large multitudes of files from a server.

In this article, I will explain shortly few approaches to delete few million of obsolete files to clean some space on your server.

Here are 3 methods to use to clean your tons of junk files.

1. Using Linux find command to wipe out millions of files

a.) Finding and deleting files using find's -exec switch:

# find . -type f -exec rm -fv {} \;

This method works fine but it has 1 downside, file deletion is too slow as for each found file external rm command is invoked.

For half a million of files or more, using this method will take "long". However from a server hard disk stressing point of view it is not so bad as, the files deletion is not putting too much strain on the server hard disk.

b.) Finding and deleting big number of files with find's -delete argument:

Luckily, there is a better way to delete the files, by using find's command embedded -delete argument:

# find . -type f -print -delete

c.) Deleting and printing out deleted files with find's -print arg

If you would like to output on your terminal, what files find is deleting in "real time" add -print:

# find . -type f -print -delete

To prevent your server hard disk from being stressed and hence save your self from server normal operation "outages", it is good to combine find command with ionice, e.g.:

# ionice -c 3 find . -type f -print -delete

Just note, that ionice cannot guarantee find's opeartions will not affect severely hard disk i/o requests. On heavily busy servers with high amounts of disk i/o writes still applying the ionice will not prevent the server from being hanged! Be sure to always keep an eye on the server, while deleting the files nomatter with or without ionice. if throughout find execution, the server gets lagged in serving its ordinary client requests or whatever, stop the execution of the cmd immediately by killing it from another ssh session or tty (if physically on the server).

2. Using a simple bash loop with rm command to delete "tons" of files

An alternative way is to use a bash loop, to print each of the files in the directory and issue /bin/rm on each of the loop elements (files) like so:

for i in *; do

rm -f $i;

done

If you'd like to print what you will be deleting add an echo to the loop:

# for i in $(echo *); do \

echo "Deleting : $i"; rm -f $i; \

The bash loop, worked like a charm in my case so I really warmly recommend this method, whenever you need to delete more than 500 000+ files in a directory.

3. Deleting multiple files with perl

Deleting multiple files with perl is not a bad idea at all.

Here is a perl one liner, to delete all files contained within a directory:

# perl -e 'for(<*>){((stat)[9]<(unlink))}'

If you prefer to use more human readable perl script to delete a multitide of files use delete_multple_files_in_dir_perl.pl

Using perl interpreter to delete thousand of files is quick, really, really quick.

I did not benchmark it on the server, how quick exactly is it, but I guess the delete rate should be similar to find command. Its possible even in some cases the perl loop is quicker …

4. Using PHP script to delete a multiple files

Using a short php script to delete files file by file in a loop similar to above bash script is another option.

To do deletion with PHP, use this little PHP script:

<?php

$dir = "/path/to/dir/with/files";

$dh = opendir( $dir);

$i = 0;

while (($file = readdir($dh)) !== false) {

$file = "$dir/$file";

if (is_file( $file)) {

unlink( $file);

if (!(++$i % 1000)) {

echo "$i files removed\n";

}

}

}

?>

As you see the script reads the $dir defined directory and loops through it, opening file by file and doing a delete for each of its loop elements.

You should already know PHP is slow, so this method is only useful if you have to delete many thousands of files on a shared hosting server with no (ssh) shell access.

This php script is taken from Steve Kamerman's blog . I would like also to express my big gratitude to Steve for writting such a wonderful post. His post actually become inspiration for this article to become reality.

You can also download the php delete million of files script sample here

To use it rename delete_millioon_of_files_in_a_dir.php.txt to delete_millioon_of_files_in_a_dir.php and run it through a browser .

Note that you might need to run it multiple times, cause many shared hosting servers are configured to exit a php script which keeps running for too long.

Alternatively the script can be run through shell with PHP cli:

php -l delete_millioon_of_files_in_a_dir.php.txt.

5. So What is the "best" way to delete million of files on Linux?

In order to find out which method is quicker in terms of execution time I did a home brew benchmarking on my thinkpad notebook.

a) Creating 509072 of sample files.

Again, I used bash loop to create many thousands of files in order to benchmark.

I didn't wanted to put this load on a productive server and hence I used my own notebook to conduct the benchmarks. As my notebook is not a server the benchmarks might be partially incorrect, however I believe still .they're pretty good indicator on which deletion method would be better.

hipo@noah:~$ mkdir /tmp/test

hipo@noah:~$ cd /tmp/test;

hiponoah:/tmp/test$ for i in $(seq 1 509072); do echo aaaa >> $i.txt; done

I had to wait few minutes until I have at hand 509072 of files created. Each of the files as you can read is containing the sample "aaaa" string.

b) Calculating the number of files in the directory

Once the command was completed to make sure all the 509072 were existing, I used a find + wc cmd to calculate the directory contained number of files:

hipo@noah:/tmp/test$ find . -maxdepth 1 -type f |wc -l

509072

real 0m1.886s

user 0m0.440s

sys 0m1.332s

Its intesrsting, using an ls command to calculate the files is less efficient than using find:

hipo@noah:/tmp/test$ time ls -1 |wc -l

509072

real 0m3.355s

user 0m2.696s

sys 0m0.528s

c) benchmarking the different file deleting methods with time

– Testing delete speed of find

hipo@noah:/tmp/test$ time find . -maxdepth 1 -type f -delete

real 15m40.853s

user 0m0.908s

sys 0m22.357s

You see, using find to delete the files is not either too slow nor light quick.

– How fast is perl loop in multitude file deletion ?

hipo@noah:/tmp/test$ time perl -e 'for(<*>){((stat)[9]<(unlink))}'real 6m24.669suser 0m2.980ssys 0m22.673s

Deleting my sample 509072 took 6 mins and 24 secs. This is about 3 times faster than find! GO-GO perl 🙂

As you can see from the results, perl is a great and time saving, way to delete 500 000 files.

– The approximate speed deletion rate of of for + rm bash loop

hipo@noah:/tmp/test$ time for i in *; do rm -f $i; done

real 206m15.081s

user 2m38.954s

sys 195m38.182s

You see the execution took 195m en 38 secs = 3 HOURS and 43 MINUTES!!!! This is extremely slow ! But works like a charm as the running of deletion didn't impacted my normal laptop browsing. While the script was running I was mostly browsing through few not so heavy (non flash) websites and doing some other stuff in gnome-terminal) 🙂

As you can imagine running a bash loop is a bit CPU intensive, but puts less stress on the hard disk read/write operations. Therefore its clear using it is always a good practice when deletion of many files on a dedi servers is required.

b) my production server file deleting experience

On a production server I only tested two of all the listed methods to delete my files. The production server, where I tested is running Debian GNU / Linux Squeeze 6.0.3. There I had a task to delete few million of files.

The tested methods tried on the server were:

– The find . type -f -delete method.

– for i in *; do rm -f $i; done

The results from using find -delete method was quite sad, as the server almost hanged under the heavy hard disk load the command produced.

With the for script all went smoothly. The files were deleted for a long long time (like few hours), but while it was running, the server continued with no interruptions..

While the bash loop was running, the server load avarage kept at steady 4

Taking my experience in mind, If you're running a production, server and you're still wondering which delete method to use to wipe some multitude of files, I would recommend you go the bash for loop + /bin/rm way. Yes, it is extremely slow, expect it run for some half an hour or so but puts not too much extra load on the server..

Using the PHP script will probably be slow and inefficient, if compared to both find and the a bash loop.. I didn't give it a try yet, but suppose it will be either equal in time or at least few times slower than bash.

If you have tried the php script and you have some observations, please drop some comment to tell me how it performs.

To sum it up;

Even though there are "hacks" to clean up some messy parsing directory full of few million of junk files, having such a directory should never exist on the first place.

Frankly, keeping millions of files within the same directory is very stupid idea.

Doing so will have a severe negative impact on a directory listing performance of your filesystem in the long term.

If you know better (more efficient) ways to delete a multitude of files in a dir please share in comments.

Fix Null error in WordPress comment reply with wordpress-threaded-comments plugin enabled

Friday, April 6th, 2012I'm running WordPress for already 3 years or so now. Since some very long time. The first wordpress install, I can hardly remember but it something like wordpress 2.5 or wordpress 2.4

Since quite a long time my wordpress blog is powered by a number of plugins, which I regularly update, whenever new plugins pops up …

I haven't noticed most of the time problems during major WordPress platform updates or the update of the installed extensions. However, today while I tried to reply back to one of my blog comments, I've been shocked that, I couldn't.

Pointing at the the Comment Reply box and typing inside was impossible and a null message was stayed filled in the form:

To catch what was causing this weird misbehaving with the reply comments functionality, I grepped through my /var/www/blog/wp-content/plugins/* for the movecfm(null,0,1,null):

# cd /var/www/blog/wp-content/plugins

# grep -rli 'movecfm(null,0,1,null)' */*.php

wordpress-thread-comment/wp-thread-comment.php

I've taken the string movecfm(null,0,1,null) from the browser page source in in my Firefox by pressing – Ctrl+U).

Once I knew of the problem, I first tried commenting the occurances of the null fields in wp-thread-comment.php, but as there, were other troubles in commenting this and I was lazy to read the whole code, checked online if some other fellows experienced the same shitty null void javascript error and already someone pointed at a solution. In the few minutes search I was unable to find anyone who reported for this bug, but what I found is some user threads on wordpress.org mentioning since WordPress 2.7+ the wordpress-threaded-comments is obsolete and the functionality provided by the plugin is already provided by default in newer WPinstalls.

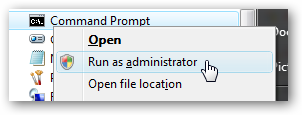

Hence in order to enable the threaded comments WordPress (embedded) reply functionality from within the wp-admin panel used:

Settings -> Discussions -> Enable Threaded (nested) comments (Tick)

You see there is also an option to define how many nested comments subcomments, can be placed per comment, the default was 5, but I thought 5 is a bit low so increased it to 10 comments reply possible per comment.

Finally, to prevent the default threaded comments to interfere with the WordPress Threaded Comments plugin, disabled the plugin through menus:

Plugins -> Active -> WordPress Thread Comments (Deactivate)This solved the weird javascript null "bug" caused by wordpress-threaded-comments once and for all.

Hopefully onwards, my blog readers will not have issues with threaded Reply Comments.

Tags: admin panel, Auto, code, Comment, Ctrl, Draft, fellows, few minutes, Firefox, form, grep, long time, misbehaving, movecfm, null fields, null message, number, occurances, option, page, page source, php, phpI, platform, plugin, Plugins, quot, reply comments, rli, someone, something, thread, threads, tick, time, time problems, Wordpress, wordpress blog, wp

Posted in Web and CMS, Wordpress | 1 Comment »