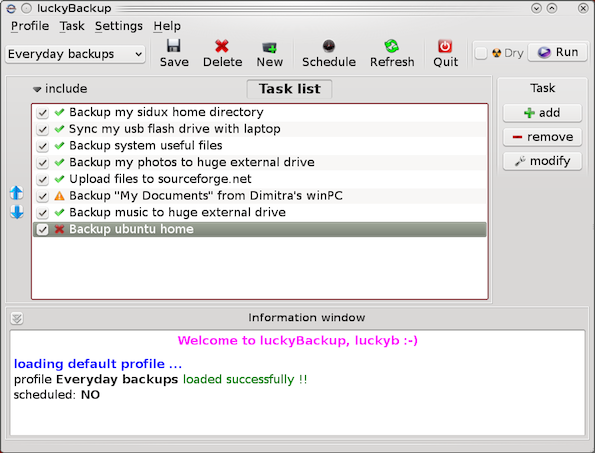

If you're a sysadmin on a large server environment such as a couple of hundred of Virtual Machines running Linux OS on either physical host or OpenXen / VmWare hosted guest Virtual Machine, you might end up sometimes at an odd case where some mounted partition mount point reports its file use different when checked with

df cmd than when checked with du command, like for example:

root@sqlserver:~# df -hT /var/lib/mysql

Filesystem Type Size Used Avail Use% Mounted On

/dev/sdb5 ext4 19G 3,4G 14G 20% /var/lib/mysql

Here the '-T' argument is used to show us the filesystem.

root@sqlserver:~# du -hsc /var/lib/mysql

0K /var/lib/mysql/

0K total

1. Simple debug on what might be the root cause for df / du inconsistency reporting

Of course the basic thing to do when in that weird situation is to be totally shocked how this is possible and to investigate a bit what is the biggest first level sub-directories that eat up the space on the mounted location, with du:

# du -hkx –max-depth=1 /var/lib/mysql/|uniq|sort -n

4 /var/lib/mysql/test

8 /var/lib/mysql/ezmlm

8 /var/lib/mysql/micropcfreak

8 /var/lib/mysql/performance_schema

12 /var/lib/mysql/mysqltmp

24 /var/lib/mysql/speedtest

64 /var/lib/mysql/yourls

144 /var/lib/mysql/narf

320 /var/lib/mysql/webchat_plus

424 /var/lib/mysql/goodfaithair

528 /var/lib/mysql/moonman

648 /var/lib/mysql/daniel

852 /var/lib/mysql/lessn

1292 /var/lib/mysql/gallery

The given output is in Kilobytes so it is a little bit hard to read, if you're used to Mbytes instead, do

# du -hmx –max-depth=1 /var/lib/mysql/|uniq|sort -n|less

…

I've also investigated on the complete /var directory contents sorted by size with:

# du -akx ./ | sort -n

5152564 ./cache/rsnapshot/hourly.2/localhost

5255788 ./cache/rsnapshot/hourly.2

5287912 ./cache/rsnapshot

7192152 ./cache

…

Even after finding out the bottleneck dirs and trying to clear up a bit, continued facing that inconsistently shown in two commands and if you're likely to be stunned like me and try … to move some files to a different filesystem to free up space or assigned inodes with a hope that shown inconsitency output will be fixed as it might be caused due to some kernel / FS caching ?? and this will eventually make the mounted FS to refresh …

But unfortunately, if you try it you'll figure out clearing up a couple of Megas or Gigas will make no difference in cmd output.

In my exact case /var/lib/mysql is a separate mounted ext4 filesystem, however same issue was present also on a Network Filesystem (NFS) and thus, my first thought that this is caused by a network failure problem or NFS bug turned to be wrong.

After further short investigation on the inodes on the Filesystem, it was clear enough inodes are available:

# df -i /var/lib/mysql

Filesystem Inodes IUsed IFree IUse% Mounted on

/dev/sdb5 1221600 2562 1219038 1% /var/lib/mysql

So the filled inodes count assumed issue also has been rejected.

P.S. (if you're not well familiar with them read manual, i.e. – man 7 inode).

– Remounting the mounted filesystem

To make sure the filesystem shown inconsistency between du and df is not due to some hanging network mount or bug, first logical thing I did is to remount the filesytem showing different in size, in my case this was done with:

# mount -o remount,rw -t ext4 /var/lib/mysql

For machines with NFS remote mounted storage locations, used:

# mount -o remount,rw -t nfs /var/www

FS remount did not solved it so I continued to ponder what oddity and of course I thought of a workaround (in case if this issues are caused by kernel bug or OS lib issue) reboot might be the solution, however unfortunately restarting the VMs was not a wanted easy to do solution, thus I continued investigating what is wrong …

Next check of course was to check, what kind of network connections are opened to the affected hosts with:

# netstat -tupanl

Did not found anything that might point me to the reported different Megabytes issue, so next step was to check what is the situation with currently opened files by running processes on the weird df / du reported systems with lsof, and boom there I observed oddity such as multiple files

# lsof -nP | grep '(deleted)'

COMMAND PID USER FD TYPE DEVICE SIZE NLINK NODE NAME

mysqld 2588 mysql 4u REG 253,17 52 0 1495 /var/lib/mysql/tmp/ibY0cXCd (deleted)

mysqld 2588 mysql 5u REG 253,17 1048 0 1496 /var/lib/mysql/tmp/ibOrELhG (deleted)

mysqld 2588 mysql 6u REG 253,17 777884290 0 1497 /var/lib/mysql/tmp/ibmDFAW8 (deleted)

mysqld 2588 mysql 7u REG 253,17 123667875 0 11387 /var/lib/mysql/tmp/ib2CSACB (deleted)

mysqld 2588 mysql 11u REG 253,17 123852406 0 11388 /var/lib/mysql/tmp/ibQpoZ94 (deleted)

…

Notice that There were plenty of '(deleted)' STATE files shown in memory an overall of 438:

# lsof -nP | grep '(deleted)' |wc -l

438

As I've learned a bit online about the problem, I found it is also possible to find deleted unlinked files only without any greps (to list all deleted files in memory files with lsof args only):

# lsof +L1|less

The SIZE field (fourth column) shows a number of files that are really hard in size and that are kept in open on filesystem and in memory, totally messing up with the filesystem. In my case this is temp files created by MYSQLD daemon but depending on the server provided service this might be apache's www-data, some custom perl / bash script executed via a cron job, stalled rsync jobs etc.

2. Check all the list open files with the mysql / root user as part of the the server filesystem inconsistency debugging with:

– Grep opened files on server by user

# lsof |grep mysql

mysqld 1312 mysql cwd DIR 8,21 4096 2 /var/lib/mysql

mysqld 1312 mysql rtd DIR 8,1 4096 2 /

mysqld 1312 mysql txt REG 8,1 20336792 23805048 /usr/sbin/mysqld

mysqld 1312 mysql mem REG 8,21 24576 20 /var/lib/mysql/tc.log

mysqld 1312 mysql DEL REG 0,16 29467 /[aio]

mysqld 1312 mysql mem REG 8,1 55792 14886933 /lib/x86_64-linux-gnu/libnss_files-2.28.so

# lsof | grep root

COMMAND PID TID TASKCMD USER FD TYPE DEVICE SIZE/OFF NODE NAME

systemd 1 root cwd DIR 8,1 4096 2 /

systemd 1 root rtd DIR 8,1 4096 2 /

systemd 1 root txt REG 8,1 1489208 14928891 /lib/systemd/systemd

systemd 1 root mem REG 8,1 1579448 14886924 /lib/x86_64-linux-gnu/libm-2.28.so

…

Other command that helped to track the discrepancy between df and du different file usage on FS is:

# du -hxa / | egrep '^[[:digit:]]{1,1}G[[:space:]]*'

3. Fixing large files kept in memory filesystem problem

What is the real reason for ending up with this file handlers opened by running backgrounded programs on the Linux OS?

It could be multiple but most likely it is due to exceeded server / client interactions or breaking up RAM or HDD drive with writing plenty of logs on the FS without ending keeping space occupied or Programming library bugs used by hanged service leaving the FH opened on storage.

What is the solution to file system files left in memory problem?

The best solution is to first fix custom script or hanged service and then if possible to simply restart the server to make the kernel / services reload or if this is not possible just restart the problem creation processes.

Once the process is identified like in my case this was MySQL on systemd enabled newer OS distros, just do:

# systemctl restart mysqld.service

or on older init.d system V ones:

# /etc/init.d/service restart

For custom hanged scripts being listed in ps axuwef you can grep the pid and do a kill -HUP (if the script is written in a good way to recognize -HUP and restart the sub-running process properly – BE EXTRA CAREFUL IF YOU'RE RESTARTING BROKEN SCRIPTS as this might cause your running service disruptions …).

# pgrep -l script.sh

7977 script.sh

# kill -HUP PID

Now finally this should either mitigate or at best case completely solve the reported disagreement between df and du, after which the calculated / reported disk space should be back to normal and show up approximately the same (note that size changes a bit as mysql service is writting data) constantly extending the size between the two checks.

# df -hk /var/lib/mysql; du -hskc /var/lib/mysql

Filesystem Inodes IUsed IFree IUse% Mounted on

/dev/sdb5 19097172 3472744 14631296 20% /var/lib/mysql

3427772 /var/lib/mysql

3427772 total

What we learned?

What I've explained in this article is why and how it comes that 'zoombie' files reside on a filesystem

appearing to be eating disk space on a mounted local or network partition, giving strange inconsistent

reports, leading to system service disruptions and impossibility to have correctly shown information on used

disk space on mounted drive.

I went through with some standard logic on debugging service / filesystem / inode issues up explainat, that led me to the finding about deleted files being kept in filesystem and producing the filesystem strange sized / showing not correct / filled even after it was extended with tune2fs and was supposed to have extra 50GBs.

Finally it was explained shortly how to HUP / restart hanging script / service to fix it.

Some few good readings that helped to fix the issue:

What to do when du and df report different usage is here

df in linux not showing correct free space after file removal is here

Why do “df” and “du” commands show different disk usage?