Posts Tagged ‘freak’

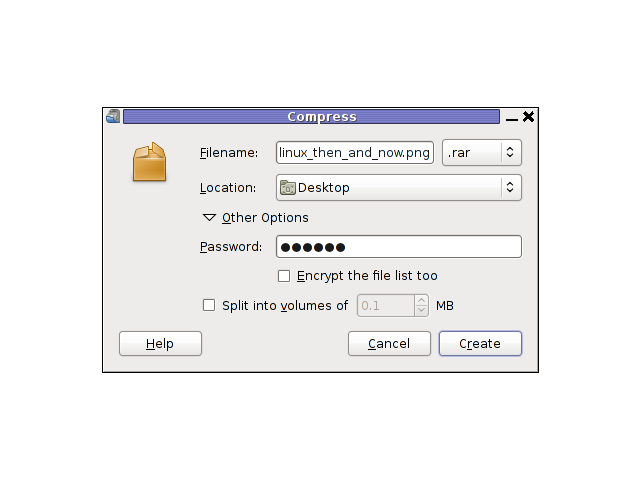

Friday, September 16th, 2011 By the default wordpress does not have support for changing the exact font, therefore copying and pasting a text made in Open Office or MS Word often places in the Post or Page wp edit fonts different from the default one set for articles.

Hence some articles after being published on a wordpress blog show up with improper font and the only way to fix that is to change the font first in Open Office and then copy back to wordpress instead of simply being able to change the font from within the wordpress article.

To get around this problem, there is a nice plugin fckeditor-for-wordpress-plugin which aids wordpress with an awesome Word like edit functionalities.

, downhload location for FCKEdit for WordPress is here

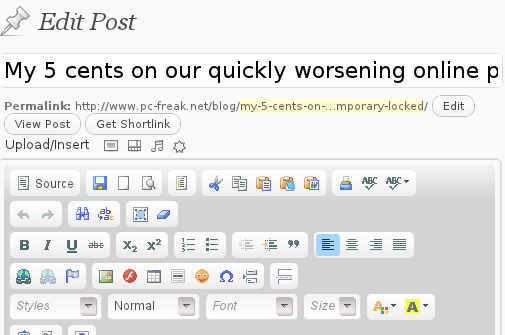

Here is a screenshot of the extra nice functionality the FCKEditor for wordpress providces.

Installing the plugin is like installing any other wordpress plugin and comes easily to:

debian:/~# cd /var/www/blog/wp-content/plugins

debian:/plugins# wget http://downloads.wordpress.org/plugin/fckeditor-for-wordpress-plugin.3.3.1.zip

...

debian:/plugins# unzip fckeditor-for-wordpress-plugin.3.3.1.zip

...

debian:/plugins# cd fckeditor-for-wordpress-plugin/

And further enabling the plugin from:

Plugins -> Inactive -> (Dean's FCKEditor For WordPress) Enable

fckeditor for wordpress will replace the default wordpress editor TinyMCE straight after being enabled.

I’ve done also a mirror of the current version as of time of writting of this article, one can download the fckeditor for wordpress mirrored here

Now really file editting inside wordpress admin panel is way easier and convenient 😉 Cheers

Tags: admin panel, aids, blog, Cheers, current version, dean, download, exact font, FCKEdit, FCKEditor, freak, functionality, hereHere, Inactive, Installing, location, lt, Microsoft, microsoft word, mirror, ms word, Open, open office, page, panel, plugin, post, quot, screenshot, show, time, unzip, var, version, way, wget, Word, Wordpress, wp, writting, www

Posted in Everyday Life, Various, Web and CMS, Wordpress | 2 Comments »

Tuesday, December 13th, 2011

Facebook and Youtube has become for just a few years a defacto standard service for 80% of computer users in our age.

This is true and it seems there is growing tendency for people to adopt new easy to use services and a boom in the social networks.

We’ve seen that with the fast adoption of the anti-human freedom program Skype , the own privacy breaching FaceBook as well as the people interests tracking service YouTube.

We’ve seen similar high adoption rates in earlier times as well with the already dying (if not dead MySpace), with the early yahoo mail boom etc and in even earlier times with the AltaVista search engine use.

However this time it appears Youtube and FaceBook are here to stay with us and become standard online services for longer times …

Many people who work in office all day staring in a computer screen as well as growing teenagers and practically anyone in the developed and the development world is using those services heavily for (in between 5 to 10 hours a day or more). The Software as a Service users spends approximately half of their time spend on the internet in Youtube or and Facebook.

Its true Youtube can be massively educative with this global database of videos on all kind of topics and in some cases facebook can be considered helpful in keeping in touch people or keeping a catalog of pictures easily accessible from everywhere, however when few services becomes more used and influential than other provided services on the internet this makes these services harmful to the communities and destroyes cultures. The concentration of most of the human popuplation who uses high technologies around few online services creates a big electronic monopoly. In other words the tendency, we see of amalgamation of businesses in real world (building of big malls and destroyment of small and middle sized shops is being observed in the Internet space.

Besides that Facebook and Youtube and Twitter are highly contrary to the true hacker spirit and creates a big harm for intellectuals and other kind of tight and technical community culture by creating one imaginative casual disco culture without any deepness of thought or spirit.

Its observable that most of the people that are heavily using those services are turning into (if not exaggerate) a brainless consumer zombies, a crowd of pathless people who watch videos and pictures and write meaningless commentaries all day long.

You have as a result a “unified dumbness” (dumbness which unifies people).

Even if we can accept the grown and fully formed character people are aware of the threats of using Youtube or Facebook, this is definitely not the case with the growing people which are still in a process of building personality and personal likings.

The harmful resuls that the so called Social networks create can be seen almost everywhere, most of the cafeterias I visit the bar tender uses facebook or youtube all da long, most of random people I see outside in a coffee or university or any public institution where internet access is available they are again in Youtube and Facebook. The result is people almost did not use the Net but just hang around in those few services wasting network bandwidth and loading networks and computer equipment and spending energy for nothing. The wasteful computer and Internet deepens the ecologic problems as energy is spend on nonsense and not goal oriented tasks but on “empty” false entertainment.

Hence the whole original idea of internet for many is changed and comes to few words ( Skype, Youtube, Facebook etc.

Besides that youngsters instead of reading some classical valuable books, are staring in the computer screen most of their cognitive time at only this few “services” and are learned to become more a consumers than self opinion thinkers and inventors.

I have not lately met any growing real thinking man. I’ve seen already by own experience the IQ level of younger generations than mine (I’m 28) is getting downer and downer. Where I see as a main cause the constant interaction with technology built in a way to restrict, a consumers technology so to say.

Facebook and Youtube puts in young and growing man’s mind, the wrong idea that they should be limited choice people always praising what is newest and brightest (without taking in consideration any sight effects). These services lead people to the idea that one should always be with the crowds and never have a solid own opinion or solid state on lifely matters. As said own opionion is highly mitigated especially in facebook where all young people try to look not what they really are but copy / paste some trendy buzz words, modern style or just copying the today’s hearoes of the day. This as one can imagine prevents a person of getting a strong unique self identity and preference on things.

Many of the older people or computer illiterates can hardly recognize the severe problems, as they’re not aware on the technical side of things and does not realize how much security compromising as well as binding the constant exposure to those online hives are.

The purpose of this small post is hence just a small attempt to try to raise up some awareness of the potential problems, we as society might face very soon if we continue to follow the latest buzz trends instead of stop for a moment have a profound think on what is the moral consequences of giving so much power on Internet medias like Facebook and Youtube? …

Tags: adoption rates, age, AltaVista, altavista search engine, amalgamation, computer screen, computer users, consumer, Culture, defacto, disco, downer, earlier times, Engine, facebook, freak, freedom, freedom program, global database, half, human freedom, impact, internet space, mail boom, malls, middle, monopoly, Myspace, negative impact, opinion, popuplation, quot, screen, Search, service users, Skype, social networks, software, technology, tendency, time, tru, yahoo mail, youtube

Posted in Everyday Life, Various | 7 Comments »

Thursday, December 8th, 2011

I’m realizing the more I’m converting to a fully functional GUI user, the less I’m doing coding or any interesting stuff…

I remembered of the old glorious times, when I was full time console user and got a memory on a nifty trick I was so used to back in the day.

Back then I was quite often writing shell scripts which were fetching (html) webpages and converting the html content into a plain TEXT (TXT) files

In order to fetch a page back in the days I used lynx – (a very simple UNIX text browser, which by the way lacks support for any CSS or Javascipt) in combination with html2text – (an advanced HTML-to-text converter).

Let’s say I wanted to fetch a my personal home page https://www.pc-freak.net/, I did that via the command:

$ lynx -source https://www.pc-freak.net/ | html2text > pcfreak_page.txt

The content from www.pc-freak.net got spit by lynx as an html source and passed html2pdf wchich saves it in plain text file pcfreak_page.txt

The bit more advanced elinks – (lynx-like alternative character mode WWW browser) provides better support for HTML and even some CSS and Javascript so to properly save the content of many pages in plain html file its better to use it instead of lynx, the way to produce .txt using elinks files is identical, e.g.:

$ elinks -source https://www.pc-freak.net/blog/ | html2text > pcfreak_blog_page.txt

By the way back in the days I was used more to links , than the superior elinks , nowdays I have both of the text browsers installed and testing to fetch an html like in the upper example and pipe to html2text produced garbaged output.

Here is the time to tell its not even necessery to have a text browser installed in order to fetch a webpage and convert it to a plain text TXT!. wget file downloading tools supports source dump as well, for all those who did not (yet) tried it and want to test it:

$ wget -qO- https://www.pc-freak.net | html2text

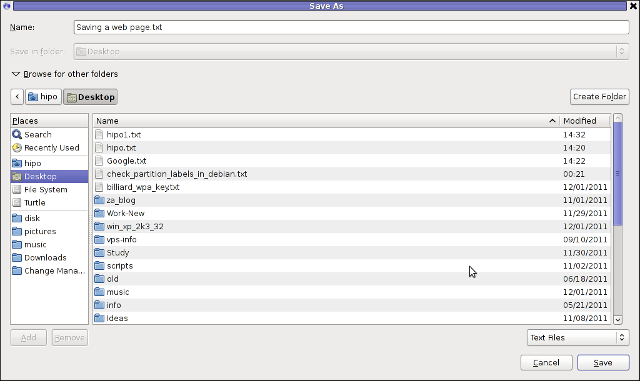

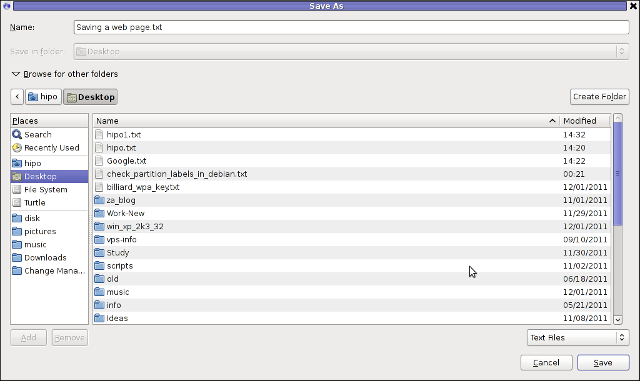

Anyways of course, some pages convertion of text inside HTML tags would not properly get saved with neither lynx or elinks cause some texts might be embedded in some elinks or lynx unsupported CSS or JavaScript. In those cases the GUI browser is useful. You can use any browser like Firefox, Epiphany or Opera ‘s File -> Save As (Text Files) embedded functionality, below is a screenshot showing an html page which I’m about to save as a plain Text File in Mozilla Firefox:

Besides being handy in conjunction with text browsers, html2text is also handy for converting .html pages already existing on the computer’s hard drive to a plain (.TXT) text format.

One might wonder, why would ever one would like to do that?? Well I personally prefer reading plain text documents instead of htmls 😉

Converting an html files already existing on hard drive with html2text is done with cmd:

$ html2text index.html >index.txt

To convert a whole directory full of .html (documentation) or whatever files to plain text .TXT , cd the directory with HTMLs and issue the one liner bash loop command:

$ cd html/

html$ for i in $(echo *.html); do html2text $i > $(echo $i | sed -e 's#.html#.txt#g'); done

Now lay off your back and enjoy reading the dox like in the good old hacker days when .TXT files were fashionable 😉

Tags: advanced html, character mode, command lynx, content, convertion, course, CSS, drive, file, freak, full time, glorious times, gnu linux, html pages, html source, HTML-to-text, html2text, index, interesting stuff, javascipt, Javascript, Lynx, necessery, nifty trick, page, page txt, pcfreak, PDF, personal home page, Shell, shell scripts, spit, support, terminal, text, text browser, text converter, time, trick, TXT, unix text, wget

Posted in Everyday Life, FreeBSD, Linux, Linux and FreeBSD Desktop, Various | 1 Comment »

Friday, October 7th, 2011

RarCrack is able to crack rar and 7z archive files protected by password on Linux.

The program is currently at release version 0.2, so its far from perfection, but at least it can break rars.

RarCrack is currently installable on most Linux distributions only from source, to install on a random Linux distro, download and make && make install . RarCrack’s official site is here, I’ve mirrored the current version of RarCrack for download here . To install rarcrack from source using the mirrored version:

linux:~# wget https://www.pc-freak.net/files/rarcrack-0.2.tar.bz2

...

linux:~# tar -jxvvf rarcrack-0.2.tar.bz2

linux:~# cd rarcrack-0.2

linux:~/rarcrack-0.2# make

...

linux:~/rarcrack-0.2# make install

...

On FreeBSD, rarcrack is available and installable via the ports tree, to install on FreeBSD:

freebsd# cd /usr/ports/security/rarcrack

freebsd# make && make install

...

To use RarCrack to crack rar, zip or 7z archive file:

freebsd% rarcrack rar_file_protected_with_password.rar --type rar

The argument –type rar is optional, in most archives RarCrack should detect the archive automatically. The –type option could also take the arguments of rar and 7z .

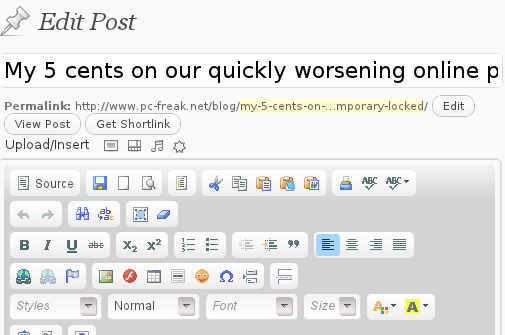

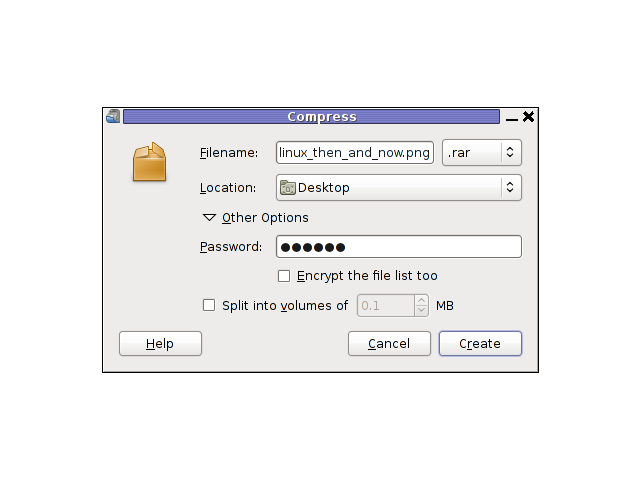

I’ve created a sample rar file protected with password linux_then_and_now.png.rar . The archive linux_then_and_now.png contains a graphic file illustrating the linux growth in use in computers, mobiles and servers. linux_then_and_now.png.rar is protected with the sample password parola

RarCrack also supports threads (a simultaneous instance spawned copies of the program). Using threads speeds up the process of cracking and thus using the –threads is generally a good idea. Hence a good way to use rarcrack with the –threads option is:

freebsd% rarcrack linux_then_and_now.png.rar --threads 8 --type rar

RarCrack! 0.2 by David Zoltan Kedves (kedazo@gmail.com)

INFO: the specified archive type: rar

INFO: cracking linux_then_and_now.png.rar, status file: linux_then_and_now.png.rar.xml

Probing: '0i' [24 pwds/sec]

Probing: '1v' [25 pwds/sec]

RarCrack‘s source archive also comes with three sample archive files (rar, 7z and zip) protected with passwords for the sake of testing the tool.

One downside of RarCrack is its extremely slow in breaking the passwords on my Lenovo notebook – dual core 1.8ghz with 2g ram it was able to brute force only 20-25 passwords per second.

This means cracking a normal password of 6 symbols will take at least 5 hours.

RarCrack is also said to support cracking zip passwords, but my tests to crack password protected zip file did not bring good results and even one of the tests ended with a segmentation fault.

To test how rarcrack performs with password protected zip files and hence compare if it is superior or inferior to fcrackzip, I used the fcrackzip’s sample pass protected zip noradi.zip

hipo@noah:~$ rarcrack --threads 8 noradi.zip --type zip

2 by David Zoltan Kedves (kedazo@gmail.com)

INFO: the specified archive type: zip

INFO: cracking noradi.zip, status file: noradi.zip.xml

Probing: 'hP' [386 pwds/sec]

Probing: 'At' [385 pwds/sec]

Probing: 'ST' [380 pwds/sec]

As you can see in above’s command output, the zip password cracking rate of approximately 380 passwords per second is a bit quicker, but still slower than fcrackzip.

RarCrack seg faults if cracking a pass protected zip is passed on without specifying the –type zip command arguments:

linux:~$ rarcrack --threads 8 noradi.zip

RarCrack! 0.2 by David Zoltan Kedves (kedazo@gmail.com)

Segmentation fault

While talking about cracking protected rar and zip archives with password, its worthy to mention creating a password protected archive with Gnome Desktop on Linux and FreeBSD is very easy.

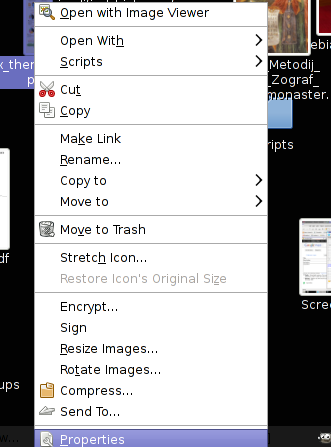

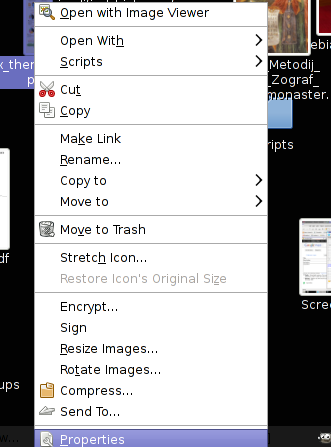

To create the password protected archive in Gnome graphic environment:

a. Point the cursor to the file you want to archive with password

b. Press on Other Options and fill in the password in the pwd dialog

I think as of time of writting, no GUI frontend interface for neither RarCrack or FcrackZip is available. Lets hope some good guy from the community will take the time to write extension for Gnome to allow us to crack rar and zip from a nice GUI interface.

Tags: amp, archive type, argument, argument type, bz2 linux, com, current version, download, fcrackzip, file, freak, Gmail, Gnome, gnu linux, instance, Linux, linux cd, linux growth, linux tar, most linux distributions, option, password, png, ports, random linux, rar, rarcrackfreebsd, rarINFO, second, segmentation, status, tar bz2, time, tool, tree, type, type option, usr, version linux, wget, xml, zoltan

Posted in Computer Security, System Administration, Various | 3 Comments »

Friday, September 30th, 2011

If you're looking for a command line utility to generate PDF file out of any webpage located online you are looking for Wkhtmltopdf

The conversion of webpages to PDF by the tool is done using Apple's Webkit open source render.

wkhtmltopdf is something very useful for web developers, as some webpages has a requirement to produce dynamically pdfs from a remote website locations.

wkhtmltopdf is shipped with Debian Squeeze 6 and latest Ubuntu Linux versions and still not entered in Fedora and CentOS repositories.

To use wkhtmltopdf on Debian / Ubuntu distros install it via apt;

linux:~# apt-get install wkhtmltodpf

...

Next to convert a webpage of choice use cmd:

linux:~$ wkhtmltopdf www.pc-freak.net www.pc-freak.net_website.pdf

Loading page (1/2)

Printing pages (2/2)

Done

If the web page to be snapshotted in long few pages a few pages PDF will be generated by wkhtmltopdf

wkhtmltopdf also supports to create the website snapshot with a specified orientation Landscape / Portrait

-O Portrait options to it, like so:

linux:~$ wkhtmltopdf -O Portrait www.pc-freak.net www.pc-freak.net_website.pdf

wkhtmltopdf has many useful options, here are some of them:

- Javascript disabling – Disable support for javascript for a website

- Grayscale pdf generation – Generates PDf in Grayscale

- Low quality pdf generation – Useful to shrink the output size of generated pdf size

- Set PDF page size – (A4, Letter etc.)

- Add zoom to the generated pdf content

- Support for password HTTP authentication

- Support to use the tool over a proxy

- Generation of Table of Content based on titles (only in static version)

- Adding of Header and Footers (only in static version)

To generate an A4 page with wkhtmltopdf:

wkhtmltopdf -s A4 www.pc-freak.net/blog/ www.pc-freak.net_blog.pdf

wkhtmltopdf looks promising but seems a bit buggy still, here is what happened when I tried to create a pdf without setting an A4 page formatting:

linux:$ wkhtmltopdf www.pc-freak.net/blog/ www.pc-freak.net_blog.pdf

Loading page (1/2)

OpenOffice path before fixup is '/usr/lib/openoffice' ] 71%

OpenOffice path is '/usr/lib/openoffice'

OpenOffice path before fixup is '/usr/lib/openoffice'

OpenOffice path is '/usr/lib/openoffice'

** (:12057): DEBUG: NP_Initialize

** (:12057): DEBUG: NP_Initialize succeeded

** (:12057): DEBUG: NP_Initialize

** (:12057): DEBUG: NP_Initialize succeeded

** (:12057): DEBUG: NP_Initialize

** (:12057): DEBUG: NP_Initialize succeeded

** (:12057): DEBUG: NP_Initialize

** (:12057): DEBUG: NP_Initialize succeeded

Printing pages (2/2)

Done

Printing pages (2/2)

Segmentation fault

Debian and Ubuntu version of wkhtmltopdf does not support TOC generation and Adding headers and footers, to support it one has to download and install the static version of wkhtmltopdf

Using the static version of the tool is also the only option for anyone on Fedora or any other RPM based Linux distro.

Tags: apple, authentication support, CentOS, choice, command, command line utility, content support, conversion, DEBUG, DoneIf, fedora, freak, generation, gnu linux, Grayscale, Initialize, Javascript, Landscape, landscape portrait, line, Linux, linux versions, loading page, low quality, nbsp, online, Open, open source, OpenOffice, orientation, page, password, PDF, pdf content, pdf size, portrait options, printing, quality pdf, repositories, requirement, Set, size a4, snapshot, something, squeeze, static version, support, table of content, tool, Ubuntu, use, Useful, web developers, web page, Webkit, webpage, www

Posted in Linux Audio & Video, System Administration, Various, Web and CMS | 2 Comments »

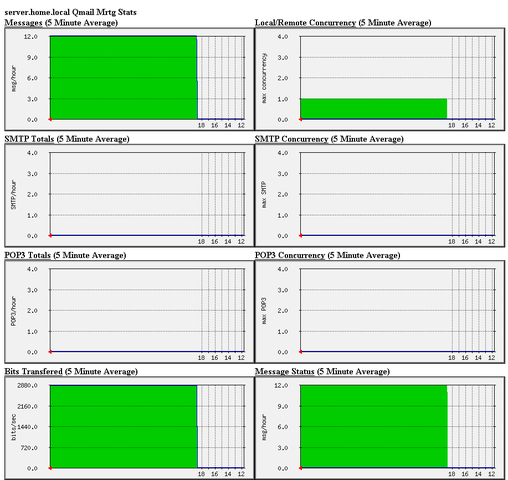

Monday, April 19th, 2010 1. First it’s necessery to have the mrtg debian package installed.

If it’s not installed then we have to install it:

debian-server:~# apt-get install mrtg

2. Second download the qmailmrtg source binary

To download the latest current source release of qmailmrtg execute:

debian-server:~# wget http://www.inter7.com/qmailmrtg7/qmailmrtg7-4.2.tar.gz

It’s a pity qmailmrtg is not available for download via debian repositories.

3. Third download the qmail.mrtg.cfg configuration file

debian-server~# wget https://www.pc-freak.net/files/qmail.mrtg.cfg

Now you have to put the file somewhere, usually it’s best to put it in the /etc/ directory.

Make sure the file is existing in /etc/qmail.mrtg.cfg

4. Untar compile and install qmailmrtg binary

debian-server:~# tar -xzvvf qmailmrtg7-4.2.tar.gz

...

debian-server:~# make && make install

strip qmailmrtg7

cp qmailmrtg7 /usr/local/bin

rm -rf *.o qmailmrtg7 checkq core

cc checkq.c -o checkq

./checkq

cc -s -O qmailmrtg7.c -o qmailmrtg7

qmailmrtg7.c: In function ‘main’:

qmailmrtg7.c:69: warning: incompatible implicit declaration of

built-in function ‘exit’

qmailmrtg7.c:93: warning: incompatible implicit declaration of

built-in function ‘exit’

qmailmrtg7.c:131: warning: incompatible implicit declaration of

built-in function ‘exit’

qmailmrtg7.c:137: warning: incompatible implicit declaration of

built-in function ‘exit’

5. Set proper file permissions according to the user you indent to execute qmailmrtg as

I personally execute it as root user, if you intend to do so as well set a permissions to

/etc/qmail.mrtg.cfg of 700.

In order to do that issue the command:

debian-server:~# chmod 700 /etc/qmail.mrtg.cfg

6. You will now need to modify the qmail.mrtg.cfg according to your needs

There you have to set a proper location where the qmailmrtg shall generate it’s html data files.

I use the /var/www/qmailmrtg qmailmrtg log file location. If you will do so as wellyou have to create the directory.

7. Create qmailmrtg html log files directory

debian-server:~# mkdir /var/log/qmailmrtg

8. Now all left is to set a proper cron line to periodically invoke qmailmrtg in order to generate qmail activity statistics.

Before we add the desired root’s crontab instructions we have to open the crontab for edit, using the command.

debian-server:~# crontab -u root -e

I personally use and recommend the following line as a line to be added to root’s crontab.

0-55/5 * * * * env LANG=C /usr/bin/mrtg /etc/qmail.mrtg.cfg > /dev/null

9. Copy index.html from qmailmrtg source directory to /var/log/qmailmrtg

debian-server:/usr/local/src/qmailmrtg7-4.2# cp -rpf index.html /var/log/qmailmrtg

10. Last step is to make sure Apache’s configuration contains lines that will enable you to access the qmail activity statistics.

The quickest way to do that in Debian running Apache 2.2 is to edit /etc/apache2/apache2.conf and add a directory Alias as follows

Alias /qmailmrtg/ "/var/www/qmailmrtg/"

Now after Apache restart

/etc/init.d/apache2 restart

You should be now able to access the qmail mrtg qmail log statistics through your Apache’s default configured host.

For instance, assuming your default configured Apache host is domain.com. You’ll be able to reach the qmailmrtg statistics through an url like:

http://domain.com/qmailmrtg/

After I verified and ensured myself qmail mrtg is working correctly after all the above explained steps partook I wasn’t happy with some headlines in the index.html and the html tile of qmailmrtg,

so as a last step I manually edited the /var/www/qmailmrtg/index.html to attune it to my likings.

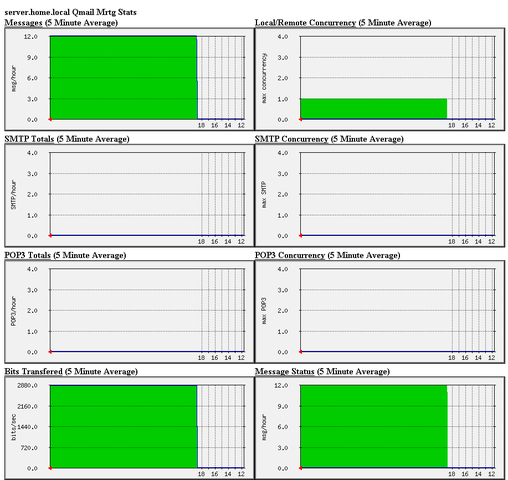

Here is a screenshot of the qmailmrtg web statistics in action.

Tags: amp, checkq, configuration file, cron, current source, debian package, debian repositories, declaration, default, download, exit, file, file permissions, freak, function, graph, host, html data, implicit declaration, indent, index, Installing qmailmrtg (qmail graph statistics on qmail activity) on Debian Lenny, loc, location, log, mrtg, necessery, package, proper location, rf, root, root user, Set, source release, statistics, strip, untar, wget

Posted in System Administration | 6 Comments »

Tuesday, August 2nd, 2011

Today I’ve learned from a admin colleague, a handy tip.

I’m administrating some Linux servers which are configured on purpose not to run on the default ssh port number (22) and therefore each time I connect to a host I have to invoke the ssh command with -p PORT_NUMBER option.

This is not such a problem, however when one has to administrate a dozen of servers each of which is configured to listen for ssh connections on various port numbers, every now and then I had to check in my notes which was the correct ssh port number I’m supposed to connect to.

To get around this silly annoyance the ssh client has a feature, whether a number of ssh server hosts can be preconfigured from the ~/.ssh/config in order to later automatically recognize the port number to which the corresponding host will be connecting (whenever) using the ssh user@somehost without any -p argument specified.

In order to make the “auto detection” of the ssh port number, the ~/.ssh/config file should look something similar to:

hipo@noah:~$ cat ~/.ssh/config

Host home.*.www.pc-freak.net

User root

Port 2020

Host www.remotesystemadministration.com

User root

Port 1212

Host sub.www.pc-freak.net

User root

Port 2222

Host www.example-server-host.com

User root

Port 1234

The *.www.pc-freak.net specifies that all ssh-able subdomains belonging to my domain www.pc-freak.net should be by default sshed to port 2020

Now I can simply use:

hipo@noah:~$ ssh root@myhosts.com

And I can connect without bothering to remember port numbers or dig into an old notes.

Hope this ssh tip is helpful.

Tags: administrate, annoyance, auto detection, cat, client, com, configHost, domain pc, example server, file, freak, handy tip, home, linux servers, net, noah, number 22, number option, order, port, port 22, port numbers, purpose, remotesystemadministration, root, root port, server host, server hosts, somehost, ssh, ssh client, ssh command, ssh connections, ssh port number, sub, subdomains, time, tip, www

Posted in Linux, System Administration | No Comments »

Monday, July 25th, 2011

If you have to use Skype as a mean to call your employers and you do some important talks related work via Skype it might be a good idea to keep a voice logs of Skype peer to peer calls or the conferent ones.

On Windows it’s pretty easy to achieve voice skype calls recording as there is a plenty of software. However on Linux I can find only one application called skype-call-recorder

As I’m running Debian Squeeze/Wheeze (testing unstable) on my notebook, I decided to give skype-call-recorder a try:

I’m using a 64 bit release of Debian, so first I tried installing the only available version for Debian which is natively prepared to run on a i386 Debian, however I hoped it will run out as I have emulation support for i386 applications.

Thus I proceeded further downloadded and installed with the force-all dpkg optionskype-call-recorder-debian_0.8_i386.deb

root@noah:~# wget https://www.pc-freak.net/files/skype-call-recorder-debian_0.8_i386.deb

root@noah:~# dpkg -i --force-all skype-call-recorder-debian_0.8_i386.deb

...

However installing the debian i386 version of skyp-call-recorder seemed to not be starting due to problems with missing /usr/lib/libmp3lame.so.0 and /usr/lib/libaudid3tag.so libraries.

Thus I decided to give a try to the skype-call-recorder amd65 version which is natively aimed to be installed on Ubuntu release 8/9.

root@noah:~# wget https://www.pc-freak.net/files/skype-call-recorder-ubuntu_0.8_amd64.deb

root@noah:~# dpkg -i skype-call-recorder-ubuntu_0.8_amd64.deb

Installation of skype-call-recorder with this package went smoothly on Debian, the only issue I had with it is that I couldn’t easily find/launch the program via Gnome Application menu.

To work around this I immediately edited /usr/local/share/applications/skype-call-recorder.desktop e.g.:

root@noah:~# vim /usr/local/share/applications/skype-call-recorder.desktop

In skype-call-recorder.desktop I substituted the line:

Categories=Utility;TelephonyTools;Recorder;InstantMessaging;Qt;

with

Categories=Application;AudioVideo;Audio;

A consequent quick Gnome logout and login again and now I have the program launchable via the menus:

Application -> Sound and Video -> Skype Call Recorder

The only thing I dislike about Skype Call Recorder is that the program current interface is build based on QT KDE library and thus when I launch it, the program launches a number of KDE related daemones like DCOP which eat my system an extra memory, still I’m happy even though the bit high load I can record the skype voice sessions on my Debian GNU/Linux.

Tags: amd65, application menu, AudioVideo, call recorder, deb, debInstallation, Desktop, dpkg, freak, Gnome, gnome application, Install, InstantMessaging, lib, libaudid, libraries, Linux, login, logs, noah, notebook, package, recording, root, share applications, Skype, software, Sound, squeeze, tag, Ubuntu, vim, wget, work

Posted in Linux, Linux and FreeBSD Desktop, Linux Audio & Video, Skype on Linux | No Comments »

Monday, June 13th, 2011 I needed to check my mail via ssh connection, as my installed squirrelmail is curently broken and I’m away from my own personal computer.

I did some online research on how this can be achieved and thanksfully I finallyfound a way to check my pop3 and imap mailbox with a console client called alpine , better known in unix community under the name pine .

I installed pine on my Debian with apt:

debian:~# apt-get install alpine

Here is my pine configuration file .pinerc used to fetch my mail with pine:

a .pinerc conf file to check my pop3 mail

To use that file I placed it in my home directory ~/ , e.g.:

debian:~# wget https://www.pc-freak.net/files/.pinerc

...

To attune the pop3 server configuration in the sample .pinerc above one needs to change the value of:

inbox-path=

For example to configure pine to fetch mail from the pop3 server mail.www.pc-freak.net and store it locally in my home directory within a file called INBOX

I have configured the inbox-path .pinerc variable to look like so:

inbox-path={mail.www.pc-freak.net/pop3/user=hipo@www.pc-freak.net}INBOX

In above configuration’s inbox-path variable configuration the /pop3/ specifies I want to fetch my mail via the pop3 protocol , if one wants to use imap this has to be substituted with /imap/

The value user=hipo@www.pc-freak.net specifies my vpopmail created user which in my case is obviously hipo@www.pc-freak.net

The other variables which are good to be changed in .pinerc config are:

personal-name=

This variable has to be set to the name of the Email Sender which will be set, if pine is used to send email.

I also changed the user-domain variable as it’s used to set the domain name from which the pine client will send the emails from:

As my domain is www.pc-freak.net I’ve set the domain name variable to be:

user-domain=www.pc-freak.net

Now after launching pine it prompted me for my email password, putting in the pass did fetch all my new unread mails via pop3 protocol.

The only annoying thing was that each time I quit pine and start it up again, I’m now asked to enter the email password.

This behaviour is really shitty, but thanksfully one can easily workaround that by letting pine be constantly running detached in gni screen session.

Tags: alpine, Auto, case, client, community, Computer, conf, config, configuration file, configure, connection, domain pc, Draft, email, email password, email sender, example, file, finallyfound, freak, hipo, home directory, imap, inbox, mail, mailbox, name, online, own personal computer, password, personal name, pine configuration, pinerc, pop, pop3 mail, pop3 protocol, pop3 server, Protocol, screen, server configuration, server mail, session, squirrelmail, ssh, time, unix, unix community, value, variables, vpopmail, way, wget

Posted in Linux, Various | No Comments »

Tuesday, April 26th, 2011 Tags: apple, assignment, autorewind, black, check, christian messages, cinema, clicktarget, clickurl, description, download, Festival, Film, film arts, FLOWPLAYER, flvmovie, folklore, folklore music, freak, funny name, Graduation, graduation assignment, having fun, height, jury, loop, modern day music, moscow, movie, music, Myspace, name, NATFA, National, national academy, national folklore, NATVIS, nbsp, Orthodox, player, spiritual, splashimage, story, StoryDaniela, swf, uniqueness, White, work

Posted in Entertainment, Everyday Life, Various | No Comments »